<management-interfaces> [...] <http-interface security-realm="ManagementRealm"> <socket-binding http="management-http"/> </http-interface> <management-interfaces>

WildFly offers three different approaches to configure and manage servers: a web interface, a command line client and a set of XML configuration files. Regardless of the approach you choose, the configuration is always synchronized across the different views and finally persisted to the XML files.

Web Management Interface

The web interface is a GWT application that uses the HTTP management API to configure a management domain or standalone server.

HTTP Management Endpoint

The HTTP API endpoint is the entry point for management clients that rely on the HTTP protocol to integrate with the management layer. It uses a JSON encoded protocol and a de-typed, RPC style API to describe and execute management operations against a managed domain or standalone server. It's used by the web console, but offers integration capabilities for a wide range of other clients too.

The HTTP API endpoint is co-located with either the domain controller or a standalone server. By default, it runs on port 9990:

(See standalone/configuration/standalone.xml or domain/configuration/host.xml)

The HTTP API Endpoint serves two different contexts. One for executing management operations and another one that allows you to access the web interface:

-

Domain API: http://<host>:9990/management

-

Web Console: http://<host>:9990/console

Accessing the web console

The web console is served through the same port as the HTTP management API. It can be accessed by pointing your browser to:

-

http://<host>:9990/console

By default the web interface can be accessed here: http://localhost:9990/console.

Default HTTP Management Interface Security

WildFly is distributed secured by default. The default security mechanism is username / password based making use of HTTP Digest for the authentication process.

The reason for securing the server by default is so that if the management interfaces are accidentally exposed on a public IP address authentication is required to connect - for this reason there is no default user in the distribution.

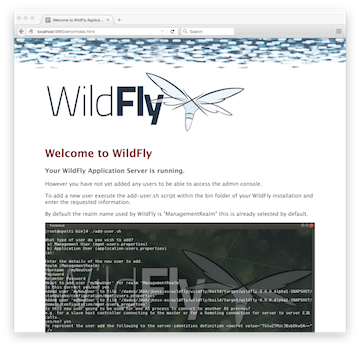

If you attempt to connect to the admin console before you have added a user to the server you will be presented with the following screen.

The user are stored in a properties file called mgmt-users.properties under standalone/configuration and domain/configuration depending on the running mode of the server, these files contain the users username along with a pre-prepared hash of the username along with the name of the realm and the users password.

Although the properties files do not contain the plain text passwords they should still be guarded as the pre-prepared hashes could be used to gain access to any server with the same realm if the same user has used the same password.

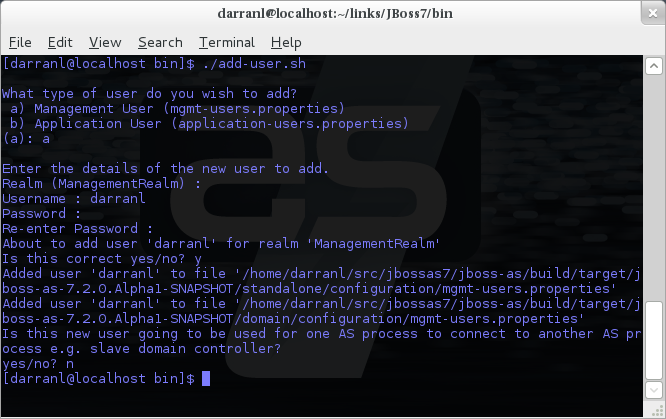

To manipulate the files and add users we provide a utility add-user.sh and add-user.bat to add the users and generate the hashes, to add a user you should execute the script and follow the guided process.

The full details of the add-user utility are described later but for the purpose of accessing the management interface you need to enter the following values: -

-

Type of user - This will be a 'Management User' to selection option a.

-

Realm - This MUST match the realm name used in the configuration so unless you have changed the configuration to use a different realm name leave this set as 'ManagementRealm'.

-

Username - The username of the user you are adding.

-

Password - The users password.

Provided the validation passes you will then be asked to confirm you want to add the user and the properties files will be updated.

For the final question, as this is a user that is going to be accessing the admin console just answer 'n' - this option will be described later for adding slave host controllers that authenticate against a master domain controller but that is a later topic.

Updates to the properties file are picked up in real time so either click 'Try Again' on the error page that was displayed in the browser or navigate to the console again and you should then be prompted to enter the username and password to connect to the server.

Command Line Interface

The Command Line Interface (CLI) is a management tool for a managed domain or standalone server. It allows a user to connect to the domain controller or a standalone server and execute management operations available through the de-typed management model.

Running the CLI

Depending on the operating system, the CLI is launched using jboss-cli.sh or jboss-cli.bat located in the WildFly bin directory. For further information on the default directory structure, please consult the "Getting Started Guide".

The first thing to do after the CLI has started is to connect to a managed WildFly instance. This is done using the command connect, e.g.

./bin/jboss-cli.sh You are disconnected at the moment. Type 'connect' to connect to the server or 'help' for the list of supported commands. [disconnected /] [disconnected /] connect [domain@localhost:9990 /] [domain@localhost:9990 /] quit Closed connection to localhost:9990

localhost:9990 is the default host and port combination for the WildFly CLI client.

The host and the port of the server can be provided as an optional parameter, if the server is not listening on localhost:9990.

./bin/jboss-cli.sh You are disconnected at the moment. Type 'connect' to connect to the server [disconnected /] connect 192.168.0.10:9990 Connected to standalone controller at 192.168.0.1:9990

The :9990 is not required as the CLI will use port 9990 by default. The port needs to be provided if the server is listening on some other port.

To terminate the session type quit.

The jboss-cli script accepts a --connect parameter: ./jboss-cli.sh --connect

The --controller parameter can be used to specify the host and port of the server: ./jboss-cli.sh --connect --controller=192.168.0.1:9990

Help is also available:

[domain@localhost:9990 /] help --commands Commands available in the current context: batch connection-factory deployment-overlay if patch reload try cd connection-info echo jdbc-driver-info pwd rollout-plan undeploy clear data-source echo-dmr jms-queue quit run-batch unset command deploy help jms-topic read-attribute set version connect deployment-info history ls read-operation shutdown xa-data-source To read a description of a specific command execute 'command_name --help'.

interactive Mode

The CLI can also be run in non-interactive mode to support scripts and other types of command line or batch processing. The --command and --commands arguments can be used to pass a command or a list of commands to execute. Additionally a --file argument is supported which enables CLI commands to be provided from a text file.

For example the following command can be used to list all the current deployments

$ ./bin/jboss-cli.sh --connect --commands=ls\ deployment sample.war osgi-bundle.jar

The output can be combined with other shell commands for further processing, for example to find out what .war files are deployed:

$ ./bin/jboss-cli.sh --connect --commands=ls\ deployment | grep war sample.war

Default Native Management Interface Security

The native interface shares the same security configuration as the http interface, however we also support a local authentication mechanism which means that the CLI can authenticate against the local WildFly instance without prompting the user for a username and password. This mechanism only works if the user running the CLI has read access to the standalone/tmp/auth folder or domain/tmp/auth folder under the respective WildFly installation - if the local mechanism fails then the CLI will fallback to prompting for a username and password for a user configured as in Default HTTP Interface Security.

Establishing a CLI connection to a remote server will require a username and password by default.

Operation Requests

Operation requests allow for low level interaction with the management model. They are different from the high level commands (i.e. create-jms-queue) in that they allow you to read and modify the server configuration as if you were editing the XML configuration files directly. The configuration is represented as a tree of addressable resources, where each node in the tree (aka resource) offers a set of operations to execute.

An operation request basically consists of three parts: The address, an operation name and an optional set of parameters.

The formal specification for an operation request is:

[/node-type=node-name (/node-type=node-name)*] : operation-name [( [parameter-name=parameter-value (,parameter-name=parameter-value)*] )]

For example:

/subsystem=logging/root-logger=ROOT:change-root-log-level(level=WARN)

Tab-completion is supported for all commands and options, i.e. node-types and node-names, operation names and parameter names. We are also considering adding aliases that are less verbose for the user, and will translate into the corresponding operation requests in the background.

Whitespaces between the separators in the operation request strings are not significant.

Addressing resources

Operation requests might not always have the address part or the parameters. E.g.

:read-resource

which will list all the node types for the current node.

To syntactically disambiguate between the commands and operations, operations require one of the following prefixes:

To execute an operation against the current node, e.g.

cd subsystem=logging :read-resource(recursive="true")

To execute an operation against a child node of the current node, e.g.

cd subsystem=logging ./root-logger=ROOT:change-root-log-level(level=WARN)

To execute an operation against the root node, e.g.

/:read-resource

Available Operation Types and Descriptions

The operation types can be distinguished between common operations that exist on any node and specific operations that belong to a particular configuration resource (i.e. subsystem). The common operations are:

-

add

-

read-attribute

-

read-children-names

-

read-children-resources

-

read-children-types

-

read-operation-description

-

read-operation-names

-

read-resource

-

read-resource-description

-

remove

-

validate-address

-

write-attribute

For a list of specific operations (e.g. operations that relate to the logging subsystem) you can always query the model itself. For example, to read the operations supported by the logging subsystem resource on a standalone server:

[[standalone@localhost:9990 /] /subsystem=logging:read-operation-names

{

"outcome" => "success",

"result" => [

"add",

"change-root-log-level",

"read-attribute",

"read-children-names",

"read-children-resources",

"read-children-types",

"read-operation-description",

"read-operation-names",

"read-resource",

"read-resource-description",

"remove-root-logger",

"root-logger-assign-handler",

"root-logger-unassign-handler",

"set-root-logger",

"validate-address",

"write-attribute"

]

}

As you can see, the logging resource offers four additional operations, namely root-logger-assign-handler, root-logger-unassign-handler, set-root-logger and remove-root-logger.

Further documentation about a resource or operation can be retrieved through the description:

[standalone@localhost:9990 /] /subsystem=logging:read-operation-description(name=change-root-log-level)

{

"outcome" => "success",

"result" => {

"operation-name" => "change-root-log-level",

"description" => "Change the root logger level.",

"request-properties" => {"level" => {

"type" => STRING,

"description" => "The log level specifying which message levels will be logged by this logger.

Message levels lower than this value will be discarded.",

"required" => true

}}

}

}

To see the full model enter :read-resource(recursive=true).

Command History

Command (and operation request) history is enabled by default. The history is kept both in-memory and in a file on the disk, i.e. it is preserved between command line sessions. The history file name is .jboss-cli-history and is automatically created in the user's home directory. When the command line interface is launched this file is read and the in-memory history is initialized with its content.

While in the command line session, you can use the arrow keys to go back and forth in the history of commands and operations.

To manipulate the history you can use the history command. If executed without any arguments, it will print all the recorded commands and operations (up to the configured maximum, which defaults to 500) from the in-memory history.

history supports three optional arguments:

-

disable - will disable history expansion (but will not clear the previously recorded history);

-

enabled - will re-enable history expansion (starting from the last recorded command before the history expansion was disabled);

-

clear - will clear the in-memory history (but not the file one).

Batch Processing

The batch mode allows one to group commands and operations and execute them together as an atomic unit. If at least one of the commands or operations fails, all the other successfully executed commands and operations in the batch are rolled back.

Not all of the commands are allowed in the batch. For example, commands like cd, ls, help, etc. are not allowed in the batch since they don't translate into operation requests. Only the commands that translate into operation requests are allowed in the batch. The batch, actually, is executed as a composite operation request.

The batch mode is entered by executing command batch.

[standalone@localhost:9990 /] batch [standalone@localhost:9990 / #] /subsystem=datasources/data-source="java\:\/H2DS":enable [standalone@localhost:9990 / #] /subsystem=messaging-activemq/server=default/jms-queue=newQueue:add

You can execute a batch using the run-batch command:

[standalone@localhost:9990 / #] run-batch The batch executed successfully.

Exit the batch edit mode without losing your changes:

[standalone@localhost:9990 / #] holdback-batch [standalone@localhost:9990 /]

Then activate it later on again:

[standalone@localhost:9990 /] batch Re-activated batch #1 /subsystem=datasources/data-source=java:/H2DS:\/H2DS:enable

There are several other notable batch commands available as well (tab complete to see the list):

-

clear-batch

-

edit-batch-line (e.g. edit-batch line 3 create-jms-topic name=mytopic)

-

remove-batch-line (e.g. remove-batch-line 3)

-

move-batch-line (e.g. move-batch-line 3 1)

-

discard-batch

Configuration Files

WildFly stores its configuration in centralized XML configuration files, one per server for standalone servers and, for managed domains, one per host with an additional domain wide policy controlled by the master host. These files are meant to be human-readable and human editable.

The XML configuration files act as a central, authoritative source of configuration. Any configuration changes made via the web interface or the CLI are persisted back to the XML configuration files. If a domain or standalone server is offline, the XML configuration files can be hand edited as well, and any changes will be picked up when the domain or standalone server is next started. However, users are encouraged to use the web interface or the CLI in preference to making offline edits to the configuration files. External changes made to the configuration files while processes are running will not be detected, and may be overwritten.

Standalone Server Configuration File

The XML configuration for a standalone server can be found in the standalone/configuration directory. The default configuration file is standalone/configuration/standalone.xml.

The standalone/configuration directory includes a number of other standard configuration files, e.g. standalone-full.xml, standalone-ha.xml and standalone-full-ha.xml each of which is similar to the default standalone.xml file but includes additional subsystems not present in the default configuration. If you prefer to use one of these files as your server configuration, you can specify it with the c or -server-config command line argument:

-

bin/standalone.sh -c=standalone-full.xml

-

bin/standalone.sh --server-config=standalone-ha.xml

Managed Domain Configuration Files

In a managed domain, the XML files are found in the domain/configuration directory. There are two types of configuration files – one per host, and then a single domain-wide file managed by the master host, aka the Domain Controller. (For more on the types of processes in a managed domain, see Operating modes.)

Host Specific Configuration – host.xml

When you start a managed domain process, a Host Controller instance is launched, and it parses its own configuration file to determine its own configuration, how it should integrate with the rest of the domain, any host-specific values for settings in the domain wide configuration (e.g. IP addresses) and what servers it should launch. This information is contained in the host-specific configuration file, the default version of which is domain/configuration/host.xml.

Each host will have its own variant host.xml, with settings appropriate for its role in the domain. WildFly ships with three standard variants:

|

host-master.xml |

A configuration that specifies the Host Controller should become the master, aka the Domain Controller. No servers will be started by this Host Controller, which is a recommended setup for a production master. |

|

host-slave.xml |

A configuration that specifies the Host Controller should not become master and instead should register with a remote master and be controlled by it. This configuration launches servers, although a user will likely wish to modify how many servers are launched and what server groups they belong to. |

|

host.xml |

The default host configuration, tailored for an easy out of the box experience experimenting with a managed domain. This configuration specifies the Host Controller should become the master, aka the Domain Controller, but it also launches a couple of servers. |

Which host-specific configuration should be used can be controlled via the _--host-config_ command line argument:

$ bin/domain.sh --host=config=host-master.xml

Domain Wide Configuration – domain.xml

Once a Host Controller has processed its host-specific configuration, it knows whether it is configured to act as the master Domain Controller. If it is, it must parse the domain wide configuration file, by default located at domain/configuration/domain.xml. This file contains the bulk of the settings that should be applied to the servers in the domain when they are launched – among other things, what subsystems they should run with what settings, what sockets should be used, and what deployments should be deployed.

Which domain-wide configuration should be used can be controlled via the _--domain-config_ command line argument:

$ bin/domain.sh --domain=config=domain-production.xml

That argument is only relevant for hosts configured to act as the master.

A slave Host Controller does not usually parse the domain wide configuration file. A slave gets the domain wide configuration from the remote master Domain Controller when it registers with it. A slave also will not persist changes to a domain.xml file if one is present on the filesystem. For that reason it is recommended that no domain.xml be kept on the filesystem of hosts that will only run as slaves.

A slave can be configured to keep a locally persisted copy of the domain wide configuration and then use it on boot (in case the master is not available.) See -backup and -cached-dc under Command line parameters.