Preface

Full text search engines like Apache Lucene are very powerful technologies to add efficient free text search capabilities to applications. However, Lucene suffers several mismatches when dealing with object domain models. Amongst other things indexes have to be kept up to date and mismatches between index structure and domain model as well as query mismatches have to be avoided.

Hibernate Search addresses these shortcomings - it indexes your domain model with the help of a few annotations, takes care of database/index synchronization and brings back regular managed objects from free text queries. To achieve this Hibernate Search is combining the power of Hibernate ORM and Apache Lucene/Elasticsearch.

1. Getting started

This section will guide you through the initial steps required to integrate Hibernate Search into your application.

1.1. Compatibility

Java Runtime |

Java 8 or greater. |

|---|---|

Hibernate ORM (for the ORM mapper) |

Hibernate ORM 5.4.32.Final. |

JPA (for the ORM mapper) |

JPA 2.2. |

Apache Lucene (for the Lucene backend) |

Lucene 8.7.0. |

Elasticsearch server (for the Elasticsearch backend) |

Elasticsearch 5.6, 6.8 or 7.10. Other minor versions (e.g. 6.0 or 7.0) may work but are not given priority for bugfixes and new features. |

|

Find more information for all versions of Hibernate Search on our compatibility matrix. The compatibility policy may also be of interest. |

1.2. Migration notes

If you are upgrading an existing application from an earlier version of Hibernate Search to the latest release, make sure to check out the migration guide.

|

To Hibernate Search 5 users If you pull our artifacts from a Maven repository and you come from Hibernate Search 5, be aware that just bumping the version number will not be enough. In particular, the group IDs changed from Additionally, be aware that a lot of APIs changed, some only because of a package change, others because of more fundamental changes (like moving away from using Lucene types in Hibernate Search APIs). |

1.3. Framework support

1.3.1. Quarkus

Quarkus has an official extension for Hibernate Search with Elasticsearch. We recommend you follow Quarkus’s Hibernate Search Guide: it is a great hands-on introduction to Hibernate Search, and it covers the specifics of Quarkus (different dependencies, different configuration properties, …).

1.3.2. Spring Boot

Hibernate Search can easily be integrated into a Spring Boot application. Just read about Spring Boot’s specifics below, then follow the getting started guide.

Configuration properties

application.properties/application.yaml are Spring Boot configuration files,

not JPA or Hibernate Search configuration files.

Adding Hibernate Search properties starting with hibernate.search. directly in that file will not work.

Instead, prefix your Hibernate Search properties with

spring.jpa.properties.,

so that Spring Boot passes along the properties to Hibernate ORM, which will pass them along to Hibernate Search.

For example:

spring.jpa.properties.hibernate.search.backend.hosts = elasticsearch.mycompany.comDependency versions

Spring Boot automatically sets the version of dependencies without your knowledge. While this is ordinarily a good thing, from time to time Spring Boot dependencies will be a little out of date. Thus, it is recommended to override Spring Boot’s defaults at least for some key dependencies.

With Maven, add this to your POM’s <properties>:

<properties>

<hibernate.version>5.4.32.Final</hibernate.version>

<elasticsearch.version>7.10.0</elasticsearch.version>

<!-- ... plus any other properties of yours ... -->

</properties>|

If, after setting the properties above,

you still have problems (e.g. |

Application hanging on startup

Spring Boot 2.3.x and above is affected by a bug that causes the application to hang on startup when using Hibernate Search, particularly when using custom components (custom bridges, analysis configurers, …).

The problem, which is not limited to just Hibernate Search, has been reported, but hasn’t been fixed yet in Spring Boot 2.5.1.

As a workaround, you can set the property spring.data.jpa.repositories.bootstrap-mode to deferred or,

if that doesn’t work, default.

Interestingly, using @EnableJpaRepositories(bootstrapMode = BootstrapMode.DEFERRED) has been reported to work

even in situations where setting spring.data.jpa.repositories.bootstrap-mode to deferred didn’t work.

Alternatively, if you do not need dependency injection in your custom components,

you can refer to those components with the prefix constructor:

so that Hibernate Search doesn’t even try to use Spring to retrieve the components,

and thus avoids the deadlock in Spring.

See this section for more information.

1.4. Dependencies

The Hibernate Search artifacts can be found in Maven’s Central Repository.

If you do not want to, or cannot, fetch the JARs from a Maven repository, you can get them from the distribution bundle hosted at Sourceforge.

In order to use Hibernate Search, you will need at least two direct dependencies:

-

a dependency to the "mapper", which extracts data from your domain model and maps it to indexable documents;

-

and a dependency to the "backend", which allows to index and search these documents.

Below are the most common setups and matching dependencies for a quick start; read Architecture for more information.

- Hibernate ORM + Lucene

-

Allows indexing of ORM entities in a single application node, storing the index on the local filesystem.

If you get Hibernate Search from Maven, use these dependencies:

<dependency> <groupId>org.hibernate.search</groupId> <artifactId>hibernate-search-mapper-orm</artifactId> <version>6.0.11.Final</version> </dependency> <dependency> <groupId>org.hibernate.search</groupId> <artifactId>hibernate-search-backend-lucene</artifactId> <version>6.0.11.Final</version> </dependency>If you get Hibernate Search from the distribution bundle, copy the JARs from

dist/engine,dist/mapper/orm,dist/backend/lucene, and their respectivelibsubdirectories. - Hibernate ORM + Elasticsearch

-

Allows indexing of ORM entities on multiple application nodes, storing the index on a remote Elasticsearch cluster (to be configured separately).

If you get Hibernate Search from Maven, use these dependencies:

<dependency> <groupId>org.hibernate.search</groupId> <artifactId>hibernate-search-mapper-orm</artifactId> <version>6.0.11.Final</version> </dependency> <dependency> <groupId>org.hibernate.search</groupId> <artifactId>hibernate-search-backend-elasticsearch</artifactId> <version>6.0.11.Final</version> </dependency>If you get Hibernate Search from the distribution bundle, copy the JARs from

dist/engine,dist/mapper/orm,dist/backend/elasticsearch, and their respectivelibsubdirectories.

1.5. Configuration

Once you have added all required dependencies to your application, it’s time to have a look at the configuration file.

|

If you are new to Hibernate ORM, we recommend you start there to implement entity persistence in your application, and only then come back here to add Hibernate Search indexing. |

The configuration properties of Hibernate Search are sourced from Hibernate ORM, so they can be added to any file from which Hibernate ORM takes its configuration:

-

A

hibernate.propertiesfile in your classpath. -

The

hibernate.cfg.xmlfile in your classpath, if using Hibernate ORM native bootstrapping. -

The

persistence.xmlfile in your classpath, if using Hibernate ORM JPA bootstrapping.

Hibernate Search provides sensible defaults for all configuration properties, but depending on your setup you might want to set the following:

persistence.xml for a "Hibernate ORM + Lucene" setup<property name="hibernate.search.backend.directory.root"

value="some/filesystem/path"/> (1)| 1 | Set the location of indexes in the filesystem. By default, the backend will store indexes in the current working directory. |

persistence.xml for a "Hibernate ORM + Elasticsearch" setup<property name="hibernate.search.backend.hosts"

value="elasticsearch.mycompany.com"/> (1)

<property name="hibernate.search.backend.protocol"

value="https"/> (2)

<property name="hibernate.search.backend.username"

value="ironman"/> (3)

<property name="hibernate.search.backend.password"

value="j@rV1s"/>| 1 | Set the Elasticsearch hosts to connect to.

By default, the backend will attempt to connect to localhost:9200. |

| 2 | Set the protocol. The default is http, but you may need to use https. |

| 3 | Set the username and password for basic HTTP authentication. You may also be interested in AWS IAM authentication. |

1.6. Mapping

Let’s assume that your application contains the Hibernate ORM managed classes Book and Author

and you want to index them in order to search the books contained in your database.

import java.util.HashSet;

import java.util.Set;

import javax.persistence.Entity;

import javax.persistence.GeneratedValue;

import javax.persistence.Id;

import javax.persistence.ManyToMany;

@Entity

public class Book {

@Id

@GeneratedValue

private Integer id;

private String title;

private String isbn;

private int pageCount;

@ManyToMany

private Set<Author> authors = new HashSet<>();

public Book() {

}

// Getters and setters

// ...

}import java.util.HashSet;

import java.util.Set;

import javax.persistence.Entity;

import javax.persistence.GeneratedValue;

import javax.persistence.Id;

import javax.persistence.ManyToMany;

@Entity

public class Author {

@Id

@GeneratedValue

private Integer id;

private String name;

@ManyToMany(mappedBy = "authors")

private Set<Book> books = new HashSet<>();

public Author() {

}

// Getters and setters

// ...

}To make these entities searchable, you will need to map them to an index structure. The mapping can be defined using annotations, or using a programmatic API; this getting started guide will show you a simple annotation mapping. For more details, refer to Mapping Hibernate ORM entities to indexes.

Below is an example of how the model above can be mapped.

import java.util.HashSet;

import java.util.Set;

import javax.persistence.Entity;

import javax.persistence.GeneratedValue;

import javax.persistence.Id;

import javax.persistence.ManyToMany;

import org.hibernate.search.mapper.pojo.mapping.definition.annotation.FullTextField;

import org.hibernate.search.mapper.pojo.mapping.definition.annotation.GenericField;

import org.hibernate.search.mapper.pojo.mapping.definition.annotation.Indexed;

import org.hibernate.search.mapper.pojo.mapping.definition.annotation.IndexedEmbedded;

import org.hibernate.search.mapper.pojo.mapping.definition.annotation.KeywordField;

@Entity

@Indexed (1)

public class Book {

@Id (2)

@GeneratedValue

private Integer id;

@FullTextField (3)

private String title;

@KeywordField (4)

private String isbn;

@GenericField (5)

private int pageCount;

@ManyToMany

@IndexedEmbedded (6)

private Set<Author> authors = new HashSet<>();

public Book() {

}

// Getters and setters

// ...

}import java.util.HashSet;

import java.util.Set;

import javax.persistence.Entity;

import javax.persistence.GeneratedValue;

import javax.persistence.Id;

import javax.persistence.ManyToMany;

import org.hibernate.search.mapper.pojo.mapping.definition.annotation.FullTextField;

@Entity (7)

public class Author {

@Id

@GeneratedValue

private Integer id;

@FullTextField (3)

private String name;

@ManyToMany(mappedBy = "authors")

private Set<Book> books = new HashSet<>();

public Author() {

}

// Getters and setters

// ...

}| 1 | @Indexed marks Book as indexed, i.e. an index will be created for that entity, and that index will be kept up to date. |

| 2 | By default, the JPA @Id is used to generate a document identifier. |

| 3 | @FullTextField maps a property to a full-text index field with the same name and type.

Full-text fields are broken down into tokens and normalized (lowercased, …).

Here we’re relying on default analysis configuration,

but most applications need to customize it;

this will be addressed further down. |

| 4 | @KeywordField maps a property to a non-analyzed index field.

Useful for identifiers, for example. |

| 5 | Hibernate Search is not just for full-text search: you can index non-String types with the @GenericField annotation,

A broad range of property types are supported out-of-the-box,

such as primitive types (int, double, …) and their boxed counterpart (Integer, Double, …),

enums, date/time types, BigInteger/BigDecimal, etc. |

| 6 | @IndexedEmbedded "embeds" the indexed form of associated objects (entities or embeddables)

into the indexed form of the embedding entity.

Here, the |

| 7 | Entities that are only @IndexedEmbedded in other entities,

but do not require to be searchable by themselves, do not need to be annotated with @Indexed. |

This is a very simple example, but is enough to get started. Just remember that Hibernate Search allows more complex mappings:

-

Multiple

@*Fieldannotations exist, some of them allowing full-text search, some of them allowing finer-grained configuration for field of a certain type. You can find out more about@*Fieldannotations in Mapping a property to an index field with@GenericField,@FullTextField, …. -

Properties, or even types, can be mapped with finer-grained control using "bridges". This allows the mapping of types that are not supported out-of-the-box. See Bridges for more information.

1.7. Initialization

Before the application is started for the first time, some initialization may be required:

-

The indexes and their schema need to be created.

-

Data already present in the database (if any) needs to be indexed.

1.7.1. Schema management

Before indexing can take place, indexes and their schema need to be created, either on disk (Lucene) or through REST API calls (Elasticsearch).

Fortunately, by default, Hibernate Search will take care of creating indexes on the first startup: you don’t have to do anything.

The next time the application is started, existing indexes will be re-used.

|

Any change to your mapping (adding new fields, changing the type of existing fields, …) between two restarts of the application will require an update to the index schema. This will require some special handling, though it can easily be solved by dropping and re-creating the index. See Changing the mapping of an existing application for more information. |

1.7.2. Initial indexing

As we’ll see later, Hibernate Search takes care of triggering indexing every time an entity changes in the application.

However, data already present in the database when you add the Hibernate Search integration is unknown to Hibernate Search, and thus has to be indexed through a batch process. To that end, you can use the mass indexer API, as shown in the following code:

SearchSession searchSession = Search.session( entityManager ); (1)

MassIndexer indexer = searchSession.massIndexer( Book.class ) (2)

.threadsToLoadObjects( 7 ); (3)

indexer.startAndWait(); (4)| 1 | Get a Hibernate Search session, called SearchSession, from the EntityManager. |

| 2 | Create an "indexer", passing the entity types you want to index.

To index all entity types, call massIndexer() without any argument. |

| 3 | It is possible to set the number of threads to be used. For the complete list of options see Reindexing large volumes of data with the MassIndexer. |

| 4 | Invoke the batch indexing process. |

| If no data is initially present in the database, mass indexing is not necessary. |

1.8. Indexing

Hibernate Search will transparently index every entity persisted, updated or removed through Hibernate ORM. Thus this code would transparently populate your index:

// Not shown: get the entity manager and open a transaction

Author author = new Author();

author.setName( "John Doe" );

Book book = new Book();

book.setTitle( "Refactoring: Improving the Design of Existing Code" );

book.setIsbn( "978-0-58-600835-5" );

book.setPageCount( 200 );

book.getAuthors().add( author );

author.getBooks().add( book );

entityManager.persist( author );

entityManager.persist( book );

// Not shown: commit the transaction and close the entity manager|

By default, in particular when using the Elasticsearch backend, changes will not be visible right after the transaction is committed. A slight delay (by default one second) will be necessary for Elasticsearch to process the changes. For that reason, if you modify entities in a transaction, and then a execute search query right after that transaction, the search results may not be consistent with the changes you just performed. See Synchronization with the indexes for more information about this behavior and how to tune it. |

1.9. Searching

Once the data is indexed, you can perform search queries.

The following code will prepare a search query targeting the index for the Book entity,

filtering the results so that at least one field among title and authors.name

contains the string refactoring.

The matches are implicitly on words ("tokens") instead of the full string,

and are case-insensitive: that’s because the targeted fields are full-text fields.

// Not shown: get the entity manager and open a transaction

SearchSession searchSession = Search.session( entityManager ); (1)

SearchResult<Book> result = searchSession.search( Book.class ) (2)

.where( f -> f.match() (3)

.fields( "title", "authors.name" )

.matching( "refactoring" ) )

.fetch( 20 ); (4)

long totalHitCount = result.total().hitCount(); (5)

List<Book> hits = result.hits(); (6)

List<Book> hits2 =

/* ... same DSL calls as above... */

.fetchHits( 20 ); (7)

// Not shown: commit the transaction and close the entity manager| 1 | Get a Hibernate Search session, called SearchSession, from the EntityManager. |

| 2 | Initiate a search query on the index mapped to the Book entity. |

| 3 | Define that only documents matching the given predicate should be returned.

The predicate is created using a factory f passed as an argument to the lambda expression. |

| 4 | Build the query and fetch the results, limiting to the top 20 hits. |

| 5 | Retrieve the total number of matching entities. |

| 6 | Retrieve matching entities. |

| 7 | In case you’re not interested in the whole result, but only in the hits,

you can also call fetchHits() directly. |

If for some reason you don’t want to use lambdas, you can use an alternative, object-based syntax, but it will be a bit more verbose:

// Not shown: get the entity manager and open a transaction

SearchSession searchSession = Search.session( entityManager ); (1)

SearchScope<Book> scope = searchSession.scope( Book.class ); (2)

SearchResult<Book> result = searchSession.search( scope ) (3)

.where( scope.predicate().match() (4)

.fields( "title", "authors.name" )

.matching( "refactoring" )

.toPredicate() )

.fetch( 20 ); (5)

long totalHitCount = result.total().hitCount(); (6)

List<Book> hits = result.hits(); (7)

List<Book> hits2 =

/* ... same DSL calls as above... */

.fetchHits( 20 ); (8)

// Not shown: commit the transaction and close the entity manager| 1 | Get a Hibernate Search session, called SearchSession, from the EntityManager. |

| 2 | Create a "search scope", representing the indexed types that will be queried. |

| 3 | Initiate a search query targeting the search scope. |

| 4 | Define that only documents matching the given predicate should be returned. The predicate is created using the same search scope as the query. |

| 5 | Build the query and fetch the results, limiting to the top 20 hits. |

| 6 | Retrieve the total number of matching entities. |

| 7 | Retrieve matching entities. |

| 8 | In case you’re not interested in the whole result, but only in the hits,

you can also call fetchHits() directly. |

It is possible to get just the total hit count, using fetchTotalHitCount().

// Not shown: get the entity manager and open a transaction

SearchSession searchSession = Search.session( entityManager );

long totalHitCount = searchSession.search( Book.class )

.where( f -> f.match()

.fields( "title", "authors.name" )

.matching( "refactoring" ) )

.fetchTotalHitCount(); (1)

// Not shown: commit the transaction and close the entity manager| 1 | Fetch the total hit count. |

Note that, while the examples above retrieved hits as managed entities, it is just one of the possible hit types. See Projection DSL for more information.

1.10. Analysis

Full-text search allows fast matches on words in a case-insensitive way, which is one step further than substring search in a relational database. But it can get much better: what if we want a search with the term "refactored" to match our book whose title contains "refactoring"? That’s possible with custom analysis.

Analysis is how text is supposed to be processed when indexing and searching. This involves analyzers, which are made up of three types of components, applied one after the other:

-

zero or (rarely) more character filters, to clean up the input text:

A <strong>GREAT</strong> résume⇒A GREAT résume. -

a tokenizer, to split the input text into words, called "tokens":

A GREAT résume⇒[A, GREAT, résume]. -

zero or more token filters, to normalize the tokens and remove meaningless tokens.

[A, GREAT, résume]⇒[great, resume].

There are built-in analyzers, in particular the default one, which will:

-

tokenize (split) the input according to the Word Break rules of the Unicode Text Segmentation algorithm;

-

filter (normalize) tokens by turning uppercase letters to lowercase.

The default analyzer is a good fit for most language, but is not very advanced. To get the most of analysis, you will need to define a custom analyzer by picking the tokenizer and filters most suited to your specific needs.

The following paragraphs will explain how to configure and use a simple yet reasonably useful analyzer. For more information about analysis and how to configure it, refer to the Analysis section.

Each custom analyzer needs to be given a name in Hibernate Search. This is done through analysis configurers, which are defined per backend:

-

First, you need to implement an analysis configurer, a Java class that implements a backend-specific interface:

LuceneAnalysisConfigurerorElasticsearchAnalysisConfigurer. -

Second, you need to alter the configuration of your backend to actually use your analysis configurer.

As an example, let’s assume that one of your indexed Book entities has the title

"Refactoring: Improving the Design of Existing Code",

and you want to get hits for any of the following search terms:

"Refactor", "refactors", "refactored" and "refactoring".

One way to achieve this is to use an analyzer with the following components:

-

A "standard" tokenizer, which splits words at whitespaces, punctuation characters and hyphens. It is a good general-purpose tokenizer.

-

A "lowercase" filter, which converts every character to lowercase.

-

A "snowball" filter, which applies language-specific stemming.

-

Finally, an "ascii-folding" filter, which replaces characters with diacritics ("é", "à", …) with their ASCII equivalent ("e", "a", …).

The examples below show how to define an analyzer with these components, depending on the backend you picked.

persistence.xml for a "Hibernate ORM + Lucene" setuppackage org.hibernate.search.documentation.gettingstarted.withhsearch.customanalysis;

import org.hibernate.search.backend.lucene.analysis.LuceneAnalysisConfigurationContext;

import org.hibernate.search.backend.lucene.analysis.LuceneAnalysisConfigurer;

import org.apache.lucene.analysis.core.LowerCaseFilterFactory;

import org.apache.lucene.analysis.miscellaneous.ASCIIFoldingFilterFactory;

import org.apache.lucene.analysis.snowball.SnowballPorterFilterFactory;

import org.apache.lucene.analysis.standard.StandardTokenizerFactory;

public class MyLuceneAnalysisConfigurer implements LuceneAnalysisConfigurer {

@Override

public void configure(LuceneAnalysisConfigurationContext context) {

context.analyzer( "english" ).custom() (1)

.tokenizer( StandardTokenizerFactory.class ) (2)

.tokenFilter( LowerCaseFilterFactory.class ) (3)

.tokenFilter( SnowballPorterFilterFactory.class ) (3)

.param( "language", "English" ) (4)

.tokenFilter( ASCIIFoldingFilterFactory.class );

context.analyzer( "name" ).custom() (5)

.tokenizer( StandardTokenizerFactory.class )

.tokenFilter( LowerCaseFilterFactory.class )

.tokenFilter( ASCIIFoldingFilterFactory.class );

}

}<property name="hibernate.search.backend.analysis.configurer"

value="class:org.hibernate.search.documentation.gettingstarted.withhsearch.customanalysis.MyLuceneAnalysisConfigurer"/> (6)| 1 | Define a custom analyzer named "english", to analyze English text such as book titles. |

| 2 | Set the tokenizer to a standard tokenizer. You need to pass factory classes to refer to components. |

| 3 | Set the token filters. Token filters are applied in the order they are given. |

| 4 | Set the value of a parameter for the last added char filter/tokenizer/token filter. |

| 5 | Define another custom analyzer, named "name", to analyze author names. On contrary to the first one, do not use enable stemming, as it is unlikely to lead to useful results on proper nouns. |

| 6 | Assign the configurer to the backend in the Hibernate Search configuration (here in persistence.xml).

For more information about the format of bean references, see Parsing of bean references. |

persistence.xml for a "Hibernate ORM + Elasticsearch" setuppackage org.hibernate.search.documentation.gettingstarted.withhsearch.customanalysis;

import org.hibernate.search.backend.elasticsearch.analysis.ElasticsearchAnalysisConfigurationContext;

import org.hibernate.search.backend.elasticsearch.analysis.ElasticsearchAnalysisConfigurer;

public class MyElasticsearchAnalysisConfigurer implements ElasticsearchAnalysisConfigurer {

@Override

public void configure(ElasticsearchAnalysisConfigurationContext context) {

context.analyzer( "english" ).custom() (1)

.tokenizer( "standard" ) (2)

.tokenFilters( "lowercase", "snowball_english", "asciifolding" ); (3)

context.tokenFilter( "snowball_english" ) (4)

.type( "snowball" )

.param( "language", "English" ); (5)

context.analyzer( "name" ).custom() (6)

.tokenizer( "standard" )

.tokenFilters( "lowercase", "asciifolding" );

}

}<property name="hibernate.search.backend.analysis.configurer"

value="class:org.hibernate.search.documentation.gettingstarted.withhsearch.customanalysis.MyElasticsearchAnalysisConfigurer"/> (7)| 1 | Define a custom analyzer named "english", to analyze English text such as book titles. |

| 2 | Set the tokenizer to a standard tokenizer. |

| 3 | Set the token filters. Token filters are applied in the order they are given. |

| 4 | Note that, for Elasticsearch, any parameterized char filter, tokenizer or token filter must be defined separately and assigned a name. |

| 5 | Set the value of a parameter for the char filter/tokenizer/token filter being defined. |

| 6 | Define another custom analyzer, named "name", to analyze author names. On contrary to the first one, do not use enable stemming, as it is unlikely to lead to useful results on proper nouns. |

| 7 | Assign the configurer to the backend in the Hibernate Search configuration (here in persistence.xml).

For more information about the format of bean references, see Parsing of bean references. |

Once analysis is configured, the mapping must be adapted to assign the relevant analyzer to each field:

import java.util.HashSet;

import java.util.Set;

import javax.persistence.Entity;

import javax.persistence.GeneratedValue;

import javax.persistence.Id;

import javax.persistence.ManyToMany;

import org.hibernate.search.mapper.pojo.mapping.definition.annotation.FullTextField;

import org.hibernate.search.mapper.pojo.mapping.definition.annotation.GenericField;

import org.hibernate.search.mapper.pojo.mapping.definition.annotation.Indexed;

import org.hibernate.search.mapper.pojo.mapping.definition.annotation.IndexedEmbedded;

import org.hibernate.search.mapper.pojo.mapping.definition.annotation.KeywordField;

@Entity

@Indexed

public class Book {

@Id

@GeneratedValue

private Integer id;

@FullTextField(analyzer = "english") (1)

private String title;

@KeywordField

private String isbn;

@GenericField

private int pageCount;

@ManyToMany

@IndexedEmbedded

private Set<Author> authors = new HashSet<>();

public Book() {

}

// Getters and setters

// ...

}import java.util.HashSet;

import java.util.Set;

import javax.persistence.Entity;

import javax.persistence.GeneratedValue;

import javax.persistence.Id;

import javax.persistence.ManyToMany;

import org.hibernate.search.mapper.pojo.mapping.definition.annotation.FullTextField;

@Entity

public class Author {

@Id

@GeneratedValue

private Integer id;

@FullTextField(analyzer = "name") (1)

private String name;

@ManyToMany(mappedBy = "authors")

private Set<Book> books = new HashSet<>();

public Author() {

}

// Getters and setters

// ...

}| 1 | Replace the @GenericField annotation with @FullTextField,

and set the analyzer parameter to the name of the custom analyzer configured earlier. |

That’s it! Now, once the entities will be reindexed, you will be able to search for the terms "Refactor", "refactors", "refactored" or "refactoring", and the book entitled "Refactoring: Improving the Design of Existing Code" will show up in the results.

|

Mapping changes are not auto-magically applied to already-indexed data. Unless you know what you are doing, you should remember to reindex your data after you changed the Hibernate Search mapping of your entities. |

// Not shown: get the entity manager and open a transaction

SearchSession searchSession = Search.session( entityManager );

SearchResult<Book> result = searchSession.search( Book.class )

.where( f -> f.match()

.fields( "title", "authors.name" )

.matching( "refactored" ) )

.fetch( 20 );

// Not shown: commit the transaction and close the entity manager1.11. What’s next

The above paragraphs gave you an overview of Hibernate Search. The next step after this tutorial is to get more familiar with the overall architecture of Hibernate Search ( Architecture) and explore the basic features in more detail.

Two topics which were only briefly touched in this tutorial were analysis configuration ( Analysis) and bridges ( Bridges). Both are important features required for more fine-grained indexing.

When it comes to initializing your index, you will be interested in schema management and mass indexing.

When querying, you will probably want to know more about predicates, sorts, projections, aggregations.

You can also have a look at sample applications:

-

The Quarkus quickstart, a sample application using Hibernate Search with the Quarkus framework.

-

The "Library" showcase, a sample application using Hibernate Search with the Spring Boot framework.

2. Concepts

2.1. Full-text search

Full-text search is a set of techniques for searching, in a corpus of text documents, the documents that best match a given query.

The main difference with traditional search — for example in an SQL database — is that the stored text is not considered as a single block of text, but as a collection of tokens (words).

Hibernate Search relies on either Apache Lucene or Elasticsearch to implement full-text search. Since Elasticsearch uses Lucene internally, they share a lot of characteristics and their general approach to full-text search.

To simplify, these search engines are based on the concept of inverted indexes: a dictionary where the key is a token (word) found in a document, and the value is the list of identifiers of every document containing this token.

Still simplifying, once all documents are indexed, searching for documents involves three steps:

-

extracting tokens (words) from the query;

-

looking up these tokens in the index to find matching documents;

-

aggregating the results of the lookups to produce a list of matching documents.

|

Lucene and Elasticsearch are not limited to just text search: numeric data is also supported, enabling support for integers, doubles, longs, dates, etc. These types are indexed and queried using a slightly different approach, which obviously does not involve text processing. |

2.2. Mapping

Applications targeted by Hibernate search generally use an entity-based model to represent data.

In this model, each entity is a single object with a few properties of atomic type

(String, Integer, LocalDate, …).

Each entity can have multiple associations to one or even many other entities.

Entities are thus organized as a graph, where each node is an entity and each association is an edge.

By contrast, Lucene and Elasticsearch work with documents. Each document is a collection of "fields", each field being assigned a name — a unique string — and a value — which can be text, but also numeric data such as an integer or a date. Fields also have a type, which not only determines the type of values (text/numeric), but more importantly the way this value will be stored: indexed, stored, with doc values, etc. It is possible to introduce nested documents, but not real associations.

Documents are thus organized, at best, as a collection of trees, where each tree is a document, optionally with nested documents.

There are multiple mismatches between the entity model and the document model: properties vs. fields, associations vs. nested documents, graph vs. collection of trees.

The goal of mapping, in Hibernate search, is to resolve these mismatches by defining how to transform one or more entities into a document, and how to resolve a search hit back into the original entity. This is the main added value of Hibernate Search, the basis for everything else from automatic indexing to the various search DSLs.

Mapping is usually configured using annotations in the entity model, but this can also be achieved using a programmatic API. To learn more about how to configure mapping, see Mapping Hibernate ORM entities to indexes.

To learn how to index the resulting documents, see Indexing Hibernate ORM entities (hint: it’s automatic).

To learn how to search with an API that takes advantage of the mapping to be closer to the entity model, in particular by returning hits as entities instead of just document identifiers, see Searching.

2.3. Analysis

As mentioned in Full-text search, the full-text engine works on tokens, which means text has to be processed both when indexing (document processing, to build the token → document index) and when searching (query processing, to generate a list of tokens to look up).

However, the processing is not just about "tokenizing".

Index lookups are exact lookups,

which means that looking up Great (capitalized) will not return documents containing only great (all lowercase).

An extra step is performed when processing text to address this caveat:

token filtering, which normalizes tokens.

Thanks to that "normalization",

Great will be indexed as great,

so that an index lookup for the query great will match as expected.

In the Lucene world (Lucene, Elasticsearch, Solr, …), text processing during both the indexing and searching phases is called "analysis" and is performed by an "analyzer".

The analyzer is made up of three types of components, which will each process the text successively in the following order:

-

Character filter: transforms the input characters. Replaces, adds or removes characters.

-

Tokenizer: splits the text into several words, called "tokens".

-

Token filter: transforms the tokens. Replaces, add or removes characters in a token, derives new tokens from the existing ones, removes tokens based on some condition, …

The tokenizer usually splits on whitespaces (though there are other options).

Token filters are usually where customization takes place.

They can remove accented characters,

remove meaningless suffixes (-ing, -s, …)

or tokens (a, the, …),

replace tokens with a chosen spelling (wi-fi ⇒ wifi),

etc.

|

Character filters, though useful, are rarely used, because they have no knowledge of token boundaries. Unless you know what you are doing, you should generally favor token filters. |

In some cases, it is necessary to index text in one block, without any tokenization:

-

For some types of text, such as SKUs or other business codes, tokenization simply does not make sense: the text is a single "keyword".

-

For sorts by field value, tokenization is not necessary. It is also forbidden in Hibernate Search due to performance issues; only non-tokenized fields can be sorted on.

To address these use cases, a special type of analyzer, called "normalizer", is available. Normalizers are simply analyzers that are guaranteed not to use a tokenizer: they can only use character filters and token filters.

In Hibernate Search, analyzers and normalizers are referenced by their name, for example when defining a full-text field. Analyzers and normalizers have two separate namespaces.

Some names are already assigned to built-in analyzers (in Elasticsearch in particular), but it is possible (and recommended) to assign names to custom analyzers and normalizers, assembled using built-in components (tokenizers, filters) to address your specific needs.

Each backend exposes its own APIs to define analyzers and normalizers, and generally to configure analysis. See the documentation of each backend for more information:

2.4. Commit and refresh

In order to get the best throughput when indexing and when searching, both Elasticsearch and Lucene rely on "buffers" when writing to and reading from the index:

-

When writing, changes are not directly written to the index, but to an "index writer" that buffers changes in-memory or in temporary files.

The changes are "pushed" to the actual index when the writer is committed. Until the commit happens, uncommitted changes are in an "unsafe" state: if the application crashes or if the server suffers from a power loss, uncommitted changes will be lost.

-

When reading, e.g. when executing a search query, data is not read directly from the index, but from an "index reader" that exposes a view of the index as it was at some point in the past.

The view is updated when the reader is refreshed. Until the refresh happens, results of search queries might be slightly out of date: documents added since the last refresh will be missing, documents delete since the last refresh will still be there, etc.

Unsafe changes and out-of-date indexes are obviously undesirable, but they are a trade-off that improves performance.

Different factors influence when refreshes and commit happen:

-

Automatic indexing will, by default, require that a commit of the index writer is performed after each set of changes, meaning the changes are safe after the Hibernate ORM transaction commit returns. However, no refresh is requested by default, meaning the changes may only be visible at a later time, when the backend decides to refresh the index reader. This behavior can be customized by setting a different synchronization strategy.

-

The mass indexer will not require any commit or refresh until the very end of mass indexing, so as to maximize indexing throughput.

-

Whenever there are no particular commit or refresh requirements, backend defaults will apply:

-

See here for Lucene.

-

A commit may be forced explicitly through the

flush()API. -

A refresh may be forced explicitly though the

refresh()API.

|

Even though we use the word "commit", this is not the same concept as a commit in relational database transactions: there is no transaction and no "rollback" is possible. There is no concept of isolation, either. After a refresh, all changes to the index are taken into account: those committed to the index, but also those that are still buffered in the index writer. For this reason, commits and refreshes can be treated as completely orthogonal concepts: certain setups will occasionally lead to committed changes not being be visible in search queries, while others will allow even uncommitted changes to be visible in search queries. |

2.5. Sharding and routing

Sharding consists in splitting index data into multiple "smaller indexes", called shards, in order to improve performance when dealing with large amounts of data.

In Hibernate Search, similarly to Elasticsearch, another concept is closely related to sharding: routing. Routing consists in resolving a document identifier, or generally any string called a "routing key", into the corresponding shard.

When indexing:

-

A document identifier and optionally a routing key are generated from the indexed entity.

-

The document, along with its identifier and optionally its routing key, is passed to the backend.

-

The backend "routes" the document to the correct shard, and adds the routing key (if any) to a special field in the document (so that it’s indexed).

-

The document is indexed in that shard.

When searching:

-

The search query can optionally be passed one or more routing keys.

-

If no routing key is passed, the query will be executed on all shards.

-

If one or more routing keys are passed:

-

The backend resolves these routing keys into a set of shards, and the query will only be executed on all shards, ignoring the other shards.

-

A filter is added to the query so that only documents indexed with one of the given routing keys are matched.

-

Sharding, then, can be leveraged to boost performance in two ways:

-

When indexing: a sharded index can spread the "stress" onto multiple shards, which can be located on different disks (Lucene) or different servers (Elasticsearch).

-

When searching: if one property, let’s call it

category, is often used to select a subset of documents, this property can be defined as a routing key in the mapping, so that it’s used to route documents instead of the document ID. As a result, documents with the same value forcategorywill be indexed in the same shard. Then when searching, if a query already filters documents so that it is known that the hits will all have the same value forcategory, the query can be manually routed to the shards containing documents with this value, and the other shards can be ignored.

To enable sharding, some configuration is required:

-

The backends require explicit configuration: see here for Lucene and here for Elasticsearch.

-

In most cases, document IDs are used to route documents to shards by default. This does not allow taking advantage of routing when searching, which requires multiple documents to share the same routing key. Applying routing to a search query in that case will return at most one result. To explicitly define the routing key to assign to each document, assign routing bridges to your entities.

|

Sharding is static by nature: each index is expected to have the same shards, with the same identifiers, from one boot to the other. Changing the number of shards or their identifiers will require full reindexing. |

3. Architecture

3.1. Components of Hibernate Search

From the user’s perspective, Hibernate Search consists of two components:

- Mapper

-

The mapper "maps" the user model to an index model, and provide APIs consistent with the user model to perform indexing and searching.

Most applications rely on the ORM mapper, which offers the ability to index properties of Hibernate ORM entities.

The mapper is configured partly through annotations on the domain model, and partly through configuration properties.

- Backend

-

The backend is the abstraction over the full-text engines, where "things get done". It implements generic indexing and searching interfaces for use by the mapper through "index managers", each providing access to one index.

For instance the Lucene backend delegates to the Lucene library, and the Elasticsearch backend delegates to a remote Elasticsearch cluster.

The backend is configured partly by the mapper, which tells the backend which indexes must exist and what fields they must have, and partly through configuration properties.

The mapper and backend work together to provide three main features:

- Mass indexing

-

This is how Hibernate Search rebuilds indexes from zero based on the content of a database.

The mapper queries the database to retrieve the identifier of every entity, then processes these identifiers in batches, loading the entities then processing them to generate documents that are sent to the backend for indexing. The backend puts the document in an internal queue, and will index documents in batches, in background processes, notifying the mapper when it’s done.

See Reindexing large volumes of data with the

MassIndexerfor details. - Automatic indexing

-

This is how Hibernate Search keeps indexes in sync with a database.

When an entity changes, the mapper detects the change and stores the information in an indexing plan. Upon transaction commit, entities are processed to generate documents that are sent to the backend for indexing. The backend puts the document in an internal queue, and will index documents in batches, in background processes, notifying the mapper when it’s done.

See Automatic indexing for details.

- Searching

-

This is how Hibernate Search provides ways to query an index.

The mapper exposes entry points to the search DSL, allowing selection of entity types to query. When one or more entity types are selected, the mapper delegates to the corresponding index managers to provide a Search DSL and ultimately create the search query. Upon query execution, the backend submits a list of entity references to the mapper, which loads the corresponding entities. The entities are then returned by the query.

See Searching for details.

3.2. Examples of architectures

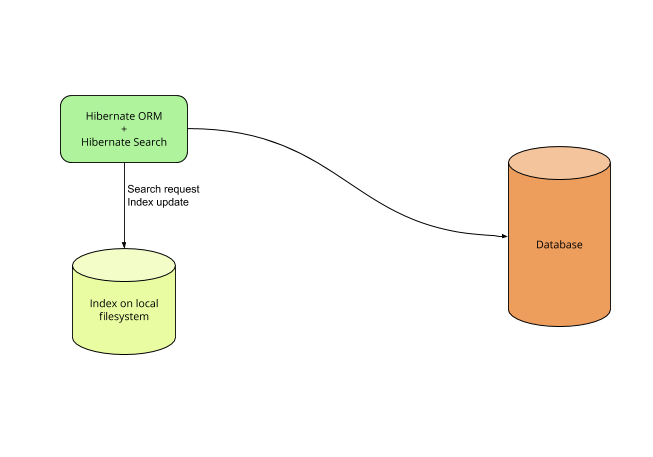

3.2.1. Single-node application with the Lucene backend

With the Lucene backend, indexes are local to a given node (JVM). They are accessed through direct calls to the Lucene library, without going through the network.

This mode is only relevant to single-node applications.

Pros:

-

Simplicity. No external services are required, everything lives on the same server.

-

Immediate (~milliseconds) visibility of indexes updates. While other backends can perform comparably well for most use cases, a single-node, Lucene backend is the best way to implement indexing if you need changes to be visible immediately after the database changes.

Cons:

-

No horizontal scalability: there can only be one application node, and all indexes need to live on the same server.

-

Not so easy to extend: experienced developers can access a lot of Lucene features, even those that are not exposed by Hibernate Search, by providing native Lucene objects; however, Lucene APIs are not very easy to figure out for developers unfamiliar with Lucene. If you’re interested, see for example

Query-based predicates.

To implement this architecture, use the following Maven dependencies:

<dependency>

<groupId>org.hibernate.search</groupId>

<artifactId>hibernate-search-mapper-orm</artifactId>

<version>6.0.11.Final</version>

</dependency>

<dependency>

<groupId>org.hibernate.search</groupId>

<artifactId>hibernate-search-backend-lucene</artifactId>

<version>6.0.11.Final</version>

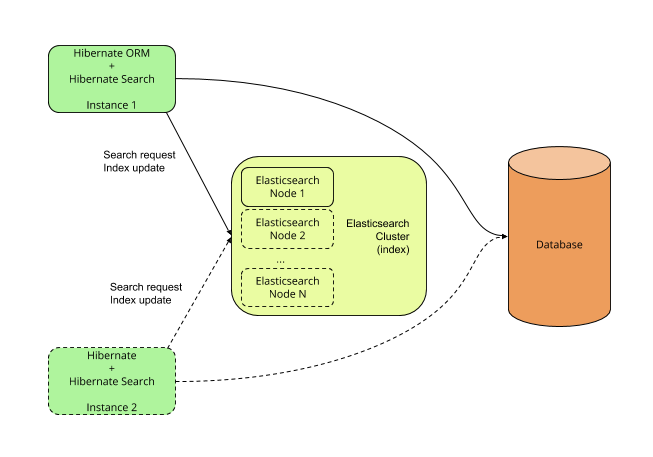

</dependency>3.2.2. Single-node or multi-node application with the Elasticsearch backend

With the Elasticsearch backend, indexes are not tied to the application. They are managed by a separate cluster of Elasticsearch nodes, and accessed through calls to REST APIs.

| The Elasticsearch cluster may be a single node living on the same server as the application. |

Pros:

-

Horizontal scalability of the indexes: you can size the Elasticsearch cluster according to your needs. See "Scalability and resilience" in the Elasticsearch documentation.

-

Horizontal scalability of the application: you can have as many instances of the application as you need.

-

Easy to extend: you can easily access most Elasticsearch features, even those that are not exposed by Hibernate Search, by providing your own JSON. See for example JSON-defined predicates, or JSON-defined aggregations, or leveraging advanced features with JSON manipulation.

Cons:

-

Need to manage an additional service: the Elasticsearch cluster.

-

Delayed (~1 second) visibility of indexes updates (near-real-time). While changes can be made visible as soon as possible after the database changes, Elasticsearch is near-real-time by nature, and won’t perform very well if you need changes to be visible immediately after the database changes.

|

In multi-node applications, there is a possibility for the indexes to get out of sync in very specific scenarios where two transactions simultaneously trigger reindexing of the same entity instance. + HSEARCH-3281 will restore full support for clustered applications. |

To implement this architecture, use the following Maven dependencies:

<dependency>

<groupId>org.hibernate.search</groupId>

<artifactId>hibernate-search-mapper-orm</artifactId>

<version>6.0.11.Final</version>

</dependency>

<dependency>

<groupId>org.hibernate.search</groupId>

<artifactId>hibernate-search-backend-elasticsearch</artifactId>

<version>6.0.11.Final</version>

</dependency>4. Limitations

4.1. In rare cases, automatic indexing involving @IndexedEmbedded may lead to out-of sync indexes

4.1.1. Description

If two entity instances are indexed-embedded in the same "index-embedding" entity, and these two entity instance are updated in parallel transactions, there is a small risk that the transaction commits happen in just the wrong way, leading to the index-embedding entity being reindexed with only part of the updates.

For example, consider indexed entity A, which index-embeds B and C. The following course of events involving two parallel transactions (T1 and T2) will lead to an out of date index:

-

T1: Load B.

-

T1: Change B in a way that will require reindexing A.

-

T2: Load C.

-

T2: Change C in a way that will require reindexing A.

-

T2: Request the transaction commit. Hibernate Search builds the document for A. While doing so, it automatically loads B. B appears unmodified, as T1 wasn’t committed yet.

-

T1: Request the transaction commit. Hibernate Search builds documents to index. While doing so, it automatically loads C. C appears unmodified, as T2 wasn’t committed yet.

-

T1: Transaction is committed. Hibernate Search automatically sends the updated A to the index. In this version, B is updated, but C is not.

-

T2: Transaction is committed. Hibernate Search automatically sends the updated A to the index. In this version, C is updated, but B is not.

This chain of events ends with the index containing a version of A where C is updated, but B is not.

4.1.2. Workaround

The following solutions can help circumvent this limitation:

-

Avoid parallel updates to entities that are indexed-embedded in the same indexed entity. This is only possible in very specific setups.

-

OR schedule a full reindexing of your database periodically (e.g. every night) to get the index back in sync with the database.

4.1.3. Roadmap

This problem cannot be solved using Elasticsearch’s optimistic concurrency control: when two conflicting versions of the same documents are created due to this issue, neither version is completely right.

We plan to address this limitation in Hibernate Search 6.1 by offering fully asynchronous indexing, from entity loading to index updates. To track progress of this feature, see HSEARCH-3281.

In short, entities will no longer be indexed directly in the ORM session where the entity change occurred. Instead, "change events" will be sent to a queue, then consumed by a background process, which will load the entity from a separate session and perform the indexing.

As long as events are sent to the queue after the original transaction is committed, and background indexing processes avoid concurrent reindexing of the same entity, the limitation will no longer apply: indexing will always get data from the latest committed state of the database, and out-of-date documents will never "overwrite" up-to-date documents.

This feature will be opt-in, as it has both upsides and downsides.

- Upsides

-

-

It will provide opportunities for scaling out indexing, by sharding the event queue and distributing the process across multiple nodes.

-

It may even clear the way for new features such as HSEARCH-1937.

-

- Downsides

-

-

It may require additional infrastructure to store the indexing queue. However, we intend to provide a basic solution relying on the database exclusively.

-

It will most likely prevent any form of synchronous indexing (waiting for indexing to finish before releasing the application thread).

-

4.2. Automatic indexing is not compatible with Session serialization

4.2.1. Description

When automatic indexing is enabled,

Hibernate Search collects entity change events

to build an "indexing plan" inside the ORM EntityManager/Session.

The indexing plan holds information relative to which entities need to be reindexed,

and sometimes documents that have not been indexed yet.

The indexing plan cannot be serialized.

If the ORM Session gets serialized,

all collected change events will be lost upon deserializing the session,

and Hibernate Search will likely "forget" to reindex some entities.

This is fine in most applications, since they do not rely on serializing the session, but it might be a problem with some JEE applications relying on Bean Passivation.

5. Configuration

5.1. Configuration sources

When using Hibernate Search within Hibernate ORM, configuration properties are retrieved from Hibernate ORM.

This means that wherever you set Hibernate ORM properties, you can set Hibernate Search properties:

-

In a

hibernate.propertiesfile at the root of your classpath. -

In

persistence.xml, if you bootstrap Hibernate ORM with the JPA APIs -

In JVM system properties (

-DmyProperty=myValuepassed to thejavacommand) -

In the configuration file of your framework, for example

application.yaml/application.properties.

|

When setting properties through the configuration file of your framework, the keys of configuration properties will likely be different from the keys mentioned in this documentation. For example See Framework support for more information. |

5.2. Structure of configuration properties

Configuration properties are all grouped under a common root.

In the ORM integration, this root is hibernate.search,

but other integrations (Infinispan, …) may use a different one.

This documentation will use hibernate.search in all examples.

Under that root, we can distinguish between three categories of properties.

- Global properties

-

These properties potentially affect all Hibernate Search. They are generally located just under the

hibernate.searchroot.Global properties are explained in the relevant parts of this documentation:

- Backend properties

-

These properties affect a single backend. They are grouped under a common root:

-

hibernate.search.backendfor the default backend (most common usage). -

hibernate.search.backends.<backend name>for a named backend (advanced usage).

Backend properties are explained in the relevant parts of this documentation:

-

- Index properties

-

These properties affect either one or multiple indexes, depending on the root.

With the root

hibernate.search.backend, they set defaults for all indexes of the backend.With the root

hibernate.search.backend.indexes.<index name>, they set the value for a specific index, overriding the defaults (if any). The backend and index names must match the names defined in the mapping. For ORM entities, the default index name is the name of the indexed class, without the package:org.mycompany.Bookwill haveBookas its default index name. Index names can be customized in the mapping.Alternatively, the backend can also be referenced by name, i.e. the roots above can also be

hibernate.search.backends.<backend name>orhibernate.search.backends.<backend name>.indexes.<index name>.Examples:

-

hibernate.search.backend.io.commit_interval = 500sets theio.commit_intervalproperty for all indexes of the default backend. -

hibernate.search.backend.indexes.Product.io.commit_interval = 2000sets theio.commit_intervalproperty for theProductindex of the default backend. -

hibernate.search.backends.myBackend.io.commit_interval = 500sets theio.commit_intervalproperty for all indexes of backendmyBackend. -

hibernate.search.backends.myBackend.indexes.Product.io.commit_interval = 2000sets theio.commit_intervalproperty for theProductindex of backendmyBackend.

Other index properties are explained in the relevant parts of this documentation:

-

5.3. Type of configuration properties

Property values can be set programmatically as Java objects, or through a configuration file as a string that will have to be parsed.

Each configuration property in Hibernate Search has an assigned type, and this type defines the accepted values in both cases.

Here are the definitions of all property types.

| Designation | Accepted Java objects | Accepted String format |

|---|---|---|

String |

|

Any string |

Boolean |

|

|

Integer |

|

Any string that can be parsed by |

Long |

|

Any string that can be parsed by |

Bean reference of type T |

An instance of |

|

Multi-valued bean reference of type T |

A |

Comma-separated string containing bean references (see above) |

5.3.1. Configuration Builders

Both BackendSettings and IndexSettings provide tools to help build the configuration property keys.

- BackendSettings

-

BackendSettings.backendKey(ElasticsearchBackendSettings.HOSTS)is equivalent tohibernate.search.backend.hosts.BackendSettings.backendKey("myBackend", ElasticsearchBackendSettings.HOSTS)is equivalent tohibernate.search.backends.myBackend.hosts.For a list of available property keys, see ElasticsearchBackendSettings or LuceneBackendSettings

- IndexSettings

-

IndexSettings.indexKey("myIndex", ElasticsearchIndexSettings.INDEXING_QUEUE_SIZE)is equivalent tohibernate.search.backend.indexes.myIndex.indexing.queue_size.IndexSettings.indexKey("myBackend", "myIndex", ElasticsearchIndexSettings.INDEXING_QUEUE_SIZE)is equivalent tohibernate.search.backends.myBackend.indexes.myIndex.indexing.queue_size.For a list of available property keys, see ElasticsearchIndexSettings or LuceneIndexSettings

private Properties buildHibernateConfiguration() {

Properties config = new Properties();

// backend configuration

config.put( BackendSettings.backendKey( ElasticsearchBackendSettings.HOSTS ), "127.0.0.1:9200" );

config.put( BackendSettings.backendKey( ElasticsearchBackendSettings.PROTOCOL ), "http" );

// index configuration

config.put(

IndexSettings.indexKey( "myIndex", ElasticsearchIndexSettings.INDEXING_MAX_BULK_SIZE ),

20

);

// orm configuration

config.put(

HibernateOrmMapperSettings.AUTOMATIC_INDEXING_SYNCHRONIZATION_STRATEGY,

AutomaticIndexingSynchronizationStrategyNames.ASYNC

);

// engine configuration

config.put( EngineSettings.BACKGROUND_FAILURE_HANDLER, "myFailureHandler" );

return config;

}5.4. Configuration property checking

Hibernate Search will track the parts of the provided configuration that are actually used and will log a warning if any configuration property starting with "hibernate.search." is never used, because that might indicate a configuration issue.

To disable this warning, set the hibernate.search.configuration_property_checking.strategy

property to ignore.

5.5. Beans

Hibernate Search allows to plug in references to custom beans in various places: configuration properties, mapping annotations, arguments to APIs, …

This section describes the supported frameworks, how to reference beans, how the beans are resolved and how the beans can get injected with other beans.

5.5.1. Supported frameworks

When using the Hibernate Search integration into Hibernate ORM, all dependency injection frameworks supported by Hibernate ORM are supported.

This includes, but may not be limited to:

When the framework is not supported, or when using Hibernate Search without Hibernate ORM, beans can only be retrieved using reflection by calling the public, no-arg constructor of the referenced type.

5.6. Bean references

Bean references are composed of two parts:

-

The type, i.e. a

java.lang.Class. -

Optionally, the name, as a

String.

When referencing beans using a string value in configuration properties, the type is implicitly set to whatever interface Hibernate Search expects for that configuration property.

|

For experienced users, Hibernate Search also provides the |

5.6.1. Parsing of bean references

When referencing beans using a string value in configuration properties, that string is parsed.

Here are the most common formats:

-

bean:followed by the name of a Spring or CDI bean. For examplebean:myBean. -

class:followed by the fully-qualified name of a class, to be instantiated through Spring/CDI if available, or through its public, no-argument constructor otherwise. For exampleclass:com.mycompany.MyClass. -

An arbitrary string that doesn’t contain a colon: it will be interpreted as explained in Bean resolution. In short:

-

first, look for a built-in bean with the given name;

-

then try to retrieve a bean with the given name from Spring/CDI (if available);

-

then try to interpret the string as a fully-qualified class name and to retrieve the corresponding bean from Spring/CDI (if available);

-

then try to interpret the string as a fully-qualified class name and to instantiate it through its public, no-argument constructor.

-

The following formats are also accepted, but are only useful for advanced use cases:

-

any:followed by an arbitrary string. Equivalent to leaving out the prefix in most cases. Only useful if the arbitrary string contains a colon. -

builtin:followed by the name of a built-in bean, e.g.simplefor the Elasticsearch index layout strategies. This will not fall back to Spring/CDI or a direct constructor call. -

constructor:followed by the fully-qualified name of a class, to be instantiated through its public, no-argument constructor. This will ignore built-in beans and will not try to instantiate the class through Spring/CDI.

5.6.2. Bean resolution

Bean resolution (i.e. the process of turning this reference into an object instance) happens as follows by default:

-

If the given reference matches a built-in bean, that bean is used.

Example: the name

simple, when used as the value of the propertyhibernate.search.backend.layout.strategyto configure the Elasticsearch index layout strategy, resolves to the built-insimplestrategy. -

Otherwise, if a dependency injection framework is integrated into Hibernate ORM, the reference is resolved using the DI framework (see Supported frameworks).

-

If a managed bean with the given type (and if provided, name) exists, that bean is used.

Example: the name

myLayoutStrategy, when used as the value of the propertyhibernate.search.backend.layout.strategyto configure the Elasticsearch index layout strategy, resolves to any bean known from CDI/Spring of typeIndexLayoutStrategyand annotated with@Named("myShardinStrategy"). -

Otherwise, if a name is given, and that name is a fully-qualified class name, and a managed bean of that type exists, that bean is used.

Example: the name

com.mycompany.MyLayoutStrategy, when used as the value of the propertyhibernate.search.backend.layout.strategyto configure the Elasticsearch index layout strategy, resolves to any bean known from CDI/Spring and extendingcom.mycompany.MyLayoutStrategy.

-

-

Otherwise, reflection is used to resolve the bean.

-

If a name is given, and that name is a fully-qualified class name, and that class extends the type reference, an instance is created by invoking the public, no-argument constructor of that class.

Example: the name

com.mycompany.MyLayoutStrategy, when used as the value of the propertyhibernate.search.backend.layout.strategyto configure the Elasticsearch index layout strategy, resolves to an instance ofcom.mycompany.MyLayoutStrategy. -

If no name is given, an instance is created by invoking the public, no-argument constructor of the referenced type.

Example: the class

com.mycompany.MyLayoutStrategy.class(ajava.lang.Class, not aString), when used as the value of the propertyhibernate.search.backend.layout.strategyto configure the Elasticsearch index layout strategy, resolves to an instance ofcom.mycompany.MyLayoutStrategy.

-

|

It is possible to control bean retrieval more finely by selecting a |

5.6.3. Bean injection

All beans resolved by Hibernate Search using a supported framework can take advantage of injection features of this framework.

For example a bean can be injected with another bean

by annotating one of its fields in the bridge with @Inject.

Lifecycle annotations such as @PostConstruct should also work as expected.

Even when not using any framework,

it is still possible to take advantage of the BeanResolver.

This component, passed to several methods during bootstrap,

exposes several methods to resolve

a reference into a bean,

exposing programmatically what would usually be achieved with an @Inject annotation.

See the javadoc of BeanResolver for more information.

5.6.4. Bean lifecycle

As soon as beans are no longer needed,

Hibernate Search will release them and let the dependency injection framework

call the appropriate methods (@PreDestroy, …).

Some beans are only necessary during bootstrap,

such as ElasticsearchAnalysisConfigurers,

so they will be released just after bootstrap.

Other beans are necessary at runtime, such as ValueBridges,

so they will be released on shutdown.

|

Be careful to define the scope of your beans as appropriate. Immutable beans or beans used only once such as For these beans, it is recommended to use the "dependent" scope (CDI terminology) or the "prototype" scope (Spring terminology). When in doubt, this is also generally the safest choice for beans injected into Hibernate Search. |

Beans resolved by Hibernate Search using a supported framework can take advantage of injection features of this framework.

5.7. Background failure handling

Hibernate Search generally propagates exceptions occurring in background threads to the user thread,

but in some cases, such as Lucene segment merging failures,

or some failures during automatic indexing,

the exception in background threads cannot be propagated.

By default, when that happens, the failure is logged at the ERROR level.

To customize background failure handling, you will need to:

-

Define a class that implements the

org.hibernate.search.engine.reporting.FailureHandlerinterface. -

Configure the backend to use that implementation by setting the configuration property

hibernate.search.background_failure_handlerto a bean reference pointing to the implementation, for exampleclass:com.mycompany.MyFailureHandler.

Hibernate Search will call the handle methods whenever a failure occurs.

FailureHandlerpackage org.hibernate.search.documentation.reporting.failurehandler;

import java.util.ArrayList;

import java.util.List;

import org.hibernate.search.engine.reporting.EntityIndexingFailureContext;

import org.hibernate.search.engine.reporting.FailureContext;

import org.hibernate.search.engine.reporting.FailureHandler;

import org.hibernate.search.util.impl.test.rule.StaticCounters;

public class MyFailureHandler implements FailureHandler {

@Override

public void handle(FailureContext context) { (1)

String failingOperationDescription = context.failingOperation().toString(); (2)

Throwable throwable = context.throwable(); (3)

// ... report the failure ... (4)

}

@Override

public void handle(EntityIndexingFailureContext context) { (5)

String failingOperationDescription = context.failingOperation().toString();

Throwable throwable = context.throwable();

List<String> entityReferencesAsStrings = new ArrayList<>();

for ( Object entityReference : context.entityReferences() ) { (6)

entityReferencesAsStrings.add( entityReference.toString() );

}

// ... report the failure ... (7)

}

}| 1 | handle(FailureContext) is called for generic failures that do not fit any other specialized handle method. |

| 2 | Get a description of the failing operation from the context. |

| 3 | Get the throwable thrown when the operation failed from the context. |

| 4 | Use the context-provided information to report the failure in any relevant way. |

| 5 | handle(EntityIndexingFailureContext) is called for failures occurring when indexing entities. |

| 6 | On top of the failing operation and throwable, the context also lists references to entities that could not be indexed correctly because of the failure. |

| 7 | Use the context-provided information to report the failure in any relevant way. |

(1)

hibernate.search.background_failure_handler = org.hibernate.search.documentation.reporting.failurehandler.MyFailureHandler| 1 | Assign the background failure handler using a Hibernate Search configuration property. |

|

When a failure handler’s |

6. Mapping Hibernate ORM entities to indexes

6.1. Configuration

6.1.1. Enabling/disabling Hibernate Search

The Hibernate Search integration into Hibernate ORM is enabled by default as soon as it is present in the classpath.

If for some reason you need to disable it,

set the hibernate.search.enabled boolean property to false.

6.1.2. Configuring the mapping

By default, Hibernate Search will automatically process mapping annotations for entity types,

as well as nested types in those entity types, for instance embedded types.

See Entity/index mapping and Mapping a property to an index field with @GenericField, @FullTextField, …

to get started with annotation-based mapping.

If you want to ignore these annotations, set hibernate.search.mapping.process_annotations to false.

To configure the mapping programmatically, see Programmatic mapping.

6.1.3. Other configuration properties

Other configuration properties are mentioned in the relevant parts of this documentation. You can find a full reference of available properties in the Hibernate Search javadoc: org.hibernate.search.mapper.orm.cfg.HibernateOrmMapperSettings.

6.2. Programmatic mapping

6.2.1. Basics

Most examples in this documentation use annotation-based mapping, which is generally enough for most applications. However, some applications have needs that go beyond the what annotations can offer:

-

a single entity type must be mapped differently for different deployments — e.g. for different customers.

-

many entity types must be mapped similarly, without code duplication.

To address those needs, you can use programmatic mapping: define the mapping through code that will get executed on startup.

Implementing a programmatic mapping requires two steps:

-

Define a class that implements the

org.hibernate.search.mapper.orm.mapping.HibernateOrmSearchMappingConfigurerinterface. -

Configure Hibernate Search to use that implementation by setting the configuration property

hibernate.search.mapping.configurerto a bean reference pointing to the implementation, for exampleclass:com.mycompany.MyMappingConfigurer.

Hibernate Search will call the configure method of this implementation on startup,

and the configurer will be able to take advantage of a DSL to define

the programmatic mapping.

|

Programmatic mapping is declarative and exposes the exact same features as annotation-based mapping. In order to implement more complex, "imperative" mapping, for example to combine two entity properties into a single index field, use custom bridges. |

|

Alternatively, if you only need to repeat the same mapping for several types or properties, you can apply a custom annotation on those types or properties, and have Hibernate Search execute some programmatic mapping code when it encounters that annotation. This solution doesn’t require a mapping configurer. See Custom mapping annotations for more information. |

See below for an example. The following sections also provide one example of programmatic mapping for each feature.

public class MySearchMappingConfigurer implements HibernateOrmSearchMappingConfigurer {

@Override

public void configure(HibernateOrmMappingConfigurationContext context) {

ProgrammaticMappingConfigurationContext mapping = context.programmaticMapping(); (1)

TypeMappingStep bookMapping = mapping.type( Book.class ); (2)

bookMapping.indexed(); (3)

bookMapping.property( "title" ) (4)

.fullTextField().analyzer( "english" ); (5)

}

}| 1 | Access the programmatic mapping. |

| 2 | Access the programmatic mapping of type Book. |

| 3 | Define Book as indexed. |

| 4 | Access the programmatic mapping of property title of type Book. |

| 5 | Define an index field based on property title of type Book. |

|

By default, programmatic mapping will be merged with annotation mapping (if any). To disable annotation mapping, set |

6.2.2. Mapping Map-based models

"dynamic-map" entity models,

i.e. models based on java.util.Map instead of custom classes,

cannot be mapped using annotations.

However, they can be mapped using the programmatic mapping API:

you just need to refer to the types by their name using context.programmaticMapping().type("thename"):

-

The entity name for dynamic entity types.

-

The "role" for dynamic embedded/component types, i.e. the name of the owning entity, followed by a dot ("."), followed by the dot-separated path to the component in that entity. For example

MyEntity.myEmbeddedorMyEntity.myEmbedded.myNestedEmbedded.

However, support for "dynamic-map" entity models is limited. In particular:

-

Mass indexing dynamic-map entities is not supported. See HSEARCH-3771.

6.3. Entity/index mapping

6.3.1. Basics

In order to index an entity, it must be annotated with @Indexed.

@Indexed@Entity

@Indexed

public class Book {|

Subclasses inherit the If the fact that |

By default:

-

The index name will be equal to the entity name, which in Hibernate ORM is set using the

@Entityannotation and defaults to the simple class name. -

The identifier of indexed documents will be generated from the entity identifier. Most types commonly used for entity identifiers are supported out of the box, but for more exotic types you may need specific configuration. See Mapping the document identifier for details.

-