JBoss.org Community Documentation

Authors

Nov 2008

Abstract

This book is a guide to the administration and configuration of the JBoss Application Server 5.

- What this Book Covers

- About JBoss

- 1. Introduction

- I. JBoss AS Infrastructure

- II. JBoss Application Server 5 Configuration

-

- 3. Deployment

- 4. Microcontainer

- 5. Web Services

-

- 5.1. Who needs web services?

- 5.2. Service Oriented Architecture (SOA)

- 5.3. What web services are not...

- 5.4. Jboss Web services Attachment support with XOP (XML-binary Optimized Packaging) and SwA

- 5.5. Using SwaRef with JAX-WS endpoints

- 5.6. MTOM/XOP

- 5.7. Enabling MTOM per endpoint

- 5.8. Document/Literal

- 5.9. Document/Literal (Bare)

- 5.10. Document/Literal (Wrapped)

- 5.11. RPC/Literal

- 5.12. RPC/Encoded

- 5.13. Web Service Endpoints

- 5.14. Plain old Java Object (POJO)

- 5.15. The endpoint as a web application

- 5.16. Packaging the endpoint

- 5.17. Accessing the generated WSDL

- 5.18. EJB3 Stateless Session Bean (SLSB)

- 5.19. Endpoint Provider

- 5.20. WebServiceContext

- 5.21. Web Service Clients

- 5.22. Common API

- 5.23. DataBinding

- 5.24. Attachments

- 5.25. Tools

- 5.26. Web Service Extensions

- 5.27. JBossWS Extensions

- 5.28. Web Services Appendix

- 5.29. References

- 6. JBoss5 Virtual Deployment Framework

- 7. JBOSS AOP

- 8. JBoss Cache

- 9. JBoss Transactions

- 10. JGroups

- 11. Remoting

- 12. JBoss Messaging

-

- 12.1. Configuring JBoss Messaging

- 12.2. Configuring the ServerPeer

- 12.3. Server Attributes

-

- 12.3.1. ServerPeerID

- 12.3.2. DefaultQueueJNDIContext

- 12.3.3. DefaultTopicJNDIContext

- 12.3.4. PostOffice

- 12.3.5. DefaultDLQ

- 12.3.6. DefaultMaxDeliveryAttempts

- 12.3.7. DefaultExpiryQueue

- 12.3.8. DefaultRedeliveryDelay

- 12.3.9. MessageCounterSamplePeriod

- 12.3.10. FailoverStartTimeout

- 12.3.11. FailoverCompleteTimeout

- 12.3.12. DefaultMessageCounterHistoryDayLimit

- 12.3.13. ClusterPullConnectionFactory

- 12.3.14. DefaultPreserveOrdering

- 12.3.15. RecoverDeliveriesTimeout

- 12.3.16. SuckerPassword

- 12.3.17. StrictTCK

- 12.3.18. Destinations

- 12.3.19. MessageCounters

- 12.3.20. MessageCountersStatistics

- 12.3.21. SupportsFailover

- 12.3.22. PersistenceManager

- 12.3.23. JMSUserManager

- 12.3.24. SecurityStore

- 12.4. MBean operations of the ServerPeer MBean

-

- 12.4.1. DeployQueue

- 12.4.2. UndeployQueue

- 12.4.3. DestroyQueue

- 12.4.4. DeployTopic

- 12.4.5. UndeployTopic

- 12.4.6. DestroyTopic

- 12.4.7. ListMessageCountersHTML

- 12.4.8. ResetAllMesageCounters

- 12.4.9. ResetAllMesageCounters

- 12.4.10. EnableMessageCounters

- 12.4.11. DisableMessageCounters

- 12.4.12. RetrievePreparedTransactions

- 12.4.13. ShowPreparedTransactions

- 13. Use Alternative Databases with JBoss AS

-

- 13.1. How to Use Alternative Databases

- 13.2. Install JDBC Drivers

- 13.3. Creating a DataSource for the External Database

- 13.4. Common configuration for DataSources and ConnectionFactorys

- 13.5. Change Database for the JMS Services

- 13.6. Support Foreign Keys in CMP Services

- 13.7. Specify Database Dialect for Java Persistence API

- 13.8. Change Other JBoss AS Services to Use the External Database

- 13.9. A Special Note About Oracle DataBases

- 13.10. DataSource configuration

- 13.11. Parameters specific for java.sql.Driver usage

- 13.12. Parameters specific for javax.sql.XADataSource usage

- 13.13. Common DataSource parameters

- 13.14. Generic Datasource Sample

- 13.15. Configuring a DataSource for remote usage

- 13.16. Configuring a DataSource to use login modules

- 14. Pooling

- 15. Frequently Asked Questions

- III. Clustering Guide

-

- 16. Clustering

- 17. Clustered JNDI Services

- 18. Clustered Session EJBs

- 19. Clustered Entity EJBs

- 20. HTTP Services

-

- 20.1. Configuring load balancing using Apache and mod_jk

- 20.2. Download the software

- 20.3. Configure Apache to load mod_jk

- 20.4. Configure worker nodes in mod_jk

- 20.5. Configuring JBoss to work with mod_jk

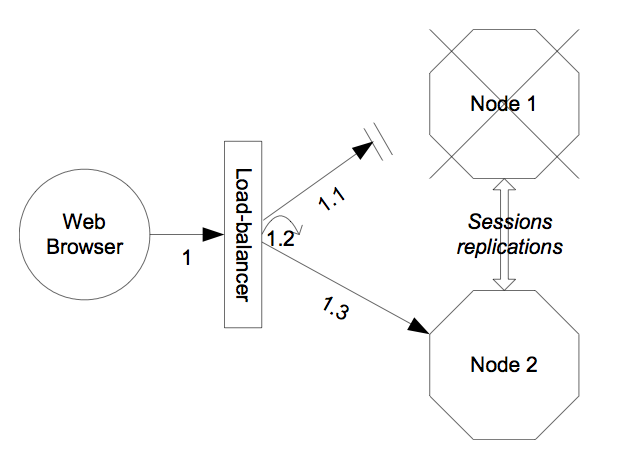

- 20.6. Configuring HTTP session state replication

- 20.7. Enabling session replication in your application

- 20.8. Using FIELD level replication

- 20.9. Monitoring session replication

- 20.10. Using Clustered Single Sign On

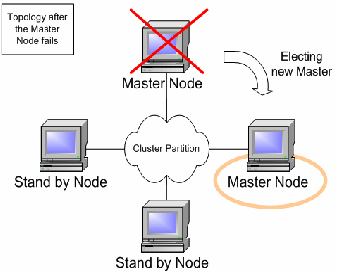

- 20.11. Clustered Singleton Services

- 21. JBoss Messaging Clustering Notes

- 22. JBossCache and JGroups Services

-

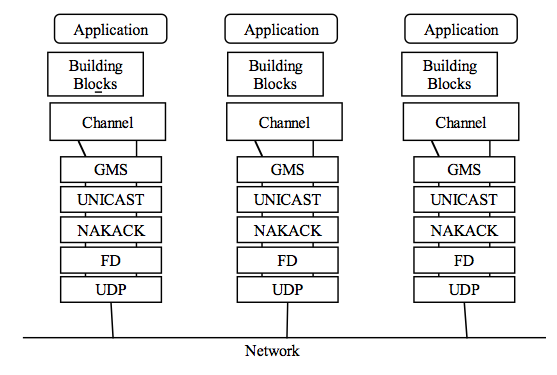

- 22.1. JGroups Configuration

- 22.2. Common Configuration Properties

- 22.3. Transport Protocols

- 22.4. Discovery Protocols

- 22.5. Failure Detection Protocols

- 22.6. Reliable Delivery Protocols

- 22.7. Other Configuration Options

-

- 22.7.1. Group Membership

- 22.7.2. Flow Control

- 22.7.3. Fragmentation

- 22.7.4. State Transfer

- 22.7.5. Distributed Garbage Collection

- 22.7.6. Merging

- 22.7.7. Binding JGroups Channels to a particular interface

- 22.7.8. Isolating JGroups Channels

- 22.7.9. Changing the Group Name

- 22.7.10. Changing the multicast address and port

- 22.7.11. JGroups Troubleshooting

- 22.7.12. Causes of missing heartbeats in FD

The primary focus of this book is the presentation of the standard JBoss 4.2 architecture components from both the perspective of their configuration and architecture. As a user of a standard JBoss distribution you will be given an understanding of how to configure the standard components. Note that this book is not an introduction to J2EE or how to use J2EE in applications. It focuses on the internal details of the JBoss server architecture and how our implementation of a given J2EE container can be configured and extended.

As a JBoss developer, you will be given a good understanding of the architecture and integration of the standard components to enable you to extend or replace the standard components for your infrastructure needs. We also show you how to obtain the JBoss source code, along with how to build and debug the JBoss server.

JBoss, a division of Red Hat, is the global leader in open source middleware software, combining enterprise-class JEMS open source software with the industry’s leading services and tools to provide simply a better way to transform your business to Service-Oriented Architecture (SOA).

JBoss, pioneered the disruptive Professional Open Source model, which combines the best of the open source and proprietary software worlds to make open source a safe choice for the enterprise and give CIOs peace of mind. This includes the royalty-free software, transparent development and active community inherent in open source and the accountability and professional support services expected of a traditional software vendor. The company finds innovative open source projects and professionalizes the project from a hobby into a livelihood by hiring the lead developer(s), often the founders themselves. JBoss provides the resources, core development and support services to enable popular open source projects to scale into enterprise-class software.

Coverage: North America and Europe on a direct basis. JBoss provides coverage worldwide via our extensive authorized partner network.

Mission Statement: JBoss' mission is to revolutionize the way enterprise middleware software is built, distributed, and supported through the Professional Open Source model. We are committed to delivering innovative and high quality technology and services that make JBoss the safe choice for enterprises and software providers.

Customers: Enterprise customers deploying JBoss technologies in mission-critical applications with professional services support from JBoss include Aviva Canada, Continental Airlines, La Quinta, NLG, MCI, Nielsen Media Research and Travelocity. For a current list of customer success stories, please visit the Customers section of our website.

Partners: JBoss works with software and hardware vendors, systems integrators and OEMs to deliver implementation services, frontline support, and certification for products embedded with JBoss technologies. For more information on the JBoss Certified Partner Program, please visit the Partners section of our website.

Professional Open Source(tm) from JBoss Inc. offers you:

-

Standards-based and stable Java Middleware technology

-

No cost open source product licenses

-

Backed by a professional and expert support staff

-

Comprehensive services including Professional Support, Training, and Consulting

-

A very large and active community of developers

-

An extensive worldwide network of authorized and certified partners

Benefits of Professional Open Source from JBoss Inc.:

-

Lowest possible total cost of ownership

-

Reliable and safe technology

-

Support, accountability, and trust from a stable company

-

Expedited problem resolution compared to commercial software vendors

The basic idea behind open source is very simple: When programmers can read, redistribute, and modify the source code for a piece of software, the software evolves. People improve it, people adapt it, people fix bugs. And this can happen at a speed that, if one is used to the slow pace of conventional software development, seems astonishing. Open Source is an often-misunderstood term relating to free software. The Open Source Initiative (OSI) web site provides a number of resources that define the various aspects of Open Source including an Open Source Definition at: http://www.opensource.org/docs/definition.html. The following quote from the OSI home page summarizes the key aspects as they relate to JBoss nicely:

|

We in the open source community have learned that this rapid evolutionary process produces better software than the traditional closed model, in which only very few programmers can see the source and everybody else must blindly use an opaque block of bits. Open Source Initiative exists to make this case to the commercial world. Open source software is an idea whose time has finally come. For twenty years it has been building momentum in the technical cultures that built the Internet and the World Wide Web. Now it's breaking out into the commercial world, and that's changing all the rules. Are you ready? |

||

| --The Open Source Initiative | ||

JBoss is the leader in the second generation of open source, which we have termed Professional Open Source. The Professional Open Source methodology is based on the following:

-

We hire and pay experts in the open source community to write exceptional and innovative software full-time.

-

We only use open source licenses that are friendly to end-user IT shops, independent software vendors, and the community itself.

-

Directly and through our authorized partners, we deliver the best support services available; all of which are backed up by the real product experts.

-

Unlike first generation open source providers, we control the direction and source code for our projects. We can ensure that all bug fixes and patches are rolled into future versions of our products.

-

By combining enterprise-proven technology, business-friendly open source licenses, and world-class support services, we have made Professional Open Source the safe choice for end-user enterprises and independent software vendors alike.

If you find a typographical error in the Administration and Configuration Guide, or if you have thought of a way to make this manual better, we would love to hear from you! Please submit a report in JIRA: http://jira.jboss.com against the project JBoss Application Server and component Documentation.

If you have a suggestion for improving the documentation, try to be as specific as possible when describing it. If you have found an error, please include the section number and some of the surrounding text so we can find it easily.

Note

Be sure to give us your name so you can receive full credit.

Note

This content is taken from svn.jboss.org/repos/jbossas/projects/docs/trunk and has yet to be branched.

To access the content directly and make changes yourself:

svn co https://svn.jboss.org/repos/jbossas/projects/docs/trunk/AS_5/Administration_And_Configuration_Guide/ --username yourname

The directory structure includes other languages the book will be translated in. For English please edit the files under en-US .

To identify the filename you wish to edit, please check the chapter title which will match the file's name. The files are written in Docbook xml. After saving your changes please validate the files you've edited for error's before committing your changes.

JBoss Application Server 5 is built on top of the new JBoss Microcontainer. The JBoss Microcontainer is a lightweight container that supports direct deployment, configuration and lifecycle of plain old Java objects (POJOs). The JBoss Microcontainer project is standalone and replaces the JBoss JMX Microkernel used in the 3.x and 4.x JBoss Application Servers. Project goals include:

-

Make the JBoss Microcontainer available as a standalone project.

-

Embrace JBoss' POJO middleware strategy.

-

Enable JBoss services to be easily deployed in the other containers.

-

Allow the features to be used in more restrictive environments (e.g. Applets, J2ME, etc.).

-

Provide POJO configuration management, support for dependencies, and support for clustering.

The JBoss Microcontainer integrates nicely with the JBoss Aspect Oriented Programming framework (JBoss AOP). JBoss AOP is discussed in Chapter 7, JBOSS AOP Support for JMX in JBoss AS 5 remains strong and MBean services written against the old Microkernel are expected to work.

JBoss AS5 is designed around the advanced concept of a Virtual Deployment Framework (VDF). The JBoss5 Virtual Deployment Framework (VDF) takes the aspect oriented design of many of the earlier JBoss containers and applies it to the deployment layer. It is also based on the POJO microntainer rather than JMX as in previous releases. More information about the Virtual Deployment Framework (VDF) can be found in Chapter 6, JBoss5 Virtual Deployment Framework .

A sample Java EE 5 application that can be run on top of JBoss 5.0.0.Beta4 and above which demonstrates many interesting technologies is the Seam Booking Application available on http://seam.demo.jboss.com/home.seam. This application makes use of the following technologies running on JBoss AS5:

-

EJB3

-

Stateful Session Beans

-

Stateless Session Beans

-

JPA (w/ Hibernate validation)

-

JSF

-

Facelets

-

Ajax4JSF

-

Seam

Many key features of JBoss AS5 are provided by integrating other standalone JBoss projects which include: -

-

JBoss EJB3 included with JBoss 5 provides the implementation of the latest revision of the Enterprise Java Beans (EJB) specification. EJB 3.0 is a deep overhaul and simplification of the EJB specification. EJB 3.0's goals are to simplify development, facilitate a test driven approach, and focus more on writing plain old java objects (POJOs) rather than coding against complex EJB APIs.

-

JBoss Messaging is a high performance JMS provider in the JBoss Enterprise Middleware Stack (JEMS), included with JBoss 5 as the default messaging provider. It is also the backbone of the JBoss ESB infrastructure. JBoss Messaging is a complete rewrite of JBossMQ, which is the default JMS provider for the JBoss AS 4.x series.

-

JBossCache 2.0 that comes in two flavors. A traditional tree-structured node-based cache and a PojoCache, an in-memory, transactional, and replicated cache system that allows users to operate on simple POJOs transparently without active user management of either replication or persistency aspects.

-

JBossWS 2 is the web services stack for JBoss 5 providing Java EE compatible web services, JAXWS-2.0.

-

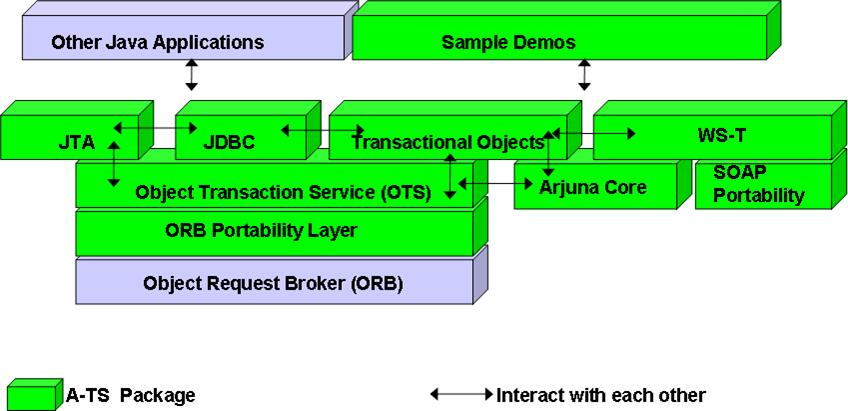

JBoss Transactions is the default transaction manager for JBoss 5. JBoss Transactions is founded on industry proven technology and 18 year history as a leader in distributed transactions, and is one of the most interoperable implementations available.

-

JBoss Web is the Web container in JBoss 5, an implementation based on Apache Tomcat that includes the Apache Portable Runtime (APR) and Tomcat native technologies to achieve scalability and performance characteristics that match and exceed the Apache Http server.

JBoss AS5 includes numerous features and bug fixes, many of them carried over upstream from the JBoss AS4.x codebase. See the Detailed Release Notes section for the full details.

-

99% of web apps involve a database

-

mission critical web applications likely to be clustered.

-

Simple web applications with JSPs/Servlets upgrade to jboss As with tomcat embedded.

-

Intermediate web applications with JSPs/Servlets using a web framework such as Struts, Java Server Faces, Cocoon, Tapestry, Spring, Expresso, Avalon, Turbine.

-

Complex web apps with JSPs/Servlets, SEAM, Enterprise Java Beans (EJB), Java Messaging (JMS), caching etc.

-

Cross application middleware (JMS, Corba, JMX etc).

The community JBoss Application Server is sponsored by JBoss/Red Hat. It allows innovation at a faster pace.

Fueled by the thriving JBoss.org community, JBoss Enterprise Middleware is a comprehensive middleware portfolio that combines and integrates the latest enterprise-ready features from JBoss.org into stable, enterprise-class platform distributions. JBoss Enterprise Middleware further mitigates risk with industry leading 24x7 support and multi-year update and maintenance policies. This means you have an enterprise-class open source option for application and service hosting, content aggregation, data federation, and service integration – for both development and production.

JBoss Enterprise Application Platform is a rigorously tested, stable, supported platform for developing and deploying mission critical Java applications and services. It integrates code from the JBoss.org Application Server/Clustering project, JBoss Hibernate Framework, JBoss Seam Framework into a single distribution with a single patch and update stream, multi-year maintenance policy. JBoss EAP is certified on 17 operating systems, 5 Database Management systems and JVM combinations. It also integrates with JBoss Developer Studio and the JBoss Operations Network.

Key benefits of using JBoss:

-

significant CPU deployments,

-

commitment to web apps, and experience with open source

-

The need to reduce operational costs. You can reduce enterprise middleware costs by upto 70%. With zero licence fees you can refocus savings on business differentiation. JBoss Middleware is Enterprise-class technology with significantly lower Total Cost of ownership (TCO).

-

The need/desire to shed vendor lock in and high maintenance/support fees. Jboss Mitigates risks and helps customers avoid vendor lock in. You can therefore start new projects without any cost prohibition. You can use the Application Server with other middleware such as Hibernate and Websphere. With Red Hat open source Assurance program the code is always there and not dependent on a single vendor.

-

unplanned growth of systems leads to unplanned maintenance and licence cost increases.

-

System consolidation – mergers and acquisitions.

-

You can consistently meet service level agreements (SLA's) with as little change as possible by back porting patches to previous versions. With 24x7x365 support with 1 hour SLA you are guaranteed support when you need it. Please visit http://jboss.com/services/profsupport for more details. Performance tuning and certification services are also available to our customers. Automated patch and upgrade management on JBoss Enterprise middleware increases reliability of resources with minimal downtime if any.

-

Multi channel integration needs leading to Service Oriented Architecture (SOA).

More information about JBoss Enterprise Application Platform and Enterprise middleware can be obtained on http://www.jboss.com/products/index and http://www.redhat.com/promo/migration/

The following are current compatibility issues for JBoss AS5:

-

JBossAS 5 runs under Java 5 but there is an ongoing task to make it run safely under Java 6, too http://jira.jboss.org/jira/browse/JBAS-5031. If using a Sun Java 6 runtime, you may want to set

-Dsun.lang.ClassLoader.allowArraySyntax=true, as described in http://jira.jboss.org/jira/browse/JBAS-4491. -

If using proprietary JBoss/EJB3 annotations, those have moved into the

org.jboss.ejb3.annotationpackage, http://jira.jboss.org/jira/browse/EJBTHREE-1099. Those are now included in a new artifact,jboss-ejb3-ext-api.jar -

Work on EJB3 Extended Persistence Context support is not completed, http://jira.jboss.org/jira/browse/EJBTHREE-1026.

-

Interoperating with previous JBoss EJB3 implementations may present problems due to serialVersionUIDs issues, http://jira.jboss.org/jira/browse/EJBTHREE-1118.

-

EJB 2.1 View requirements

-

Home extending EJBLocalHome? or EJBHome must be defined

-

Remote or Local interface must either be defined via @Local/@Remote or via return type of "create<METHOD>" methods of the Home

-

EJB 2.1 Remote/Local interfaces must extend EJBObject/EJBLocalObject

-

-

@Local/@Remote may be used to define either EJB 3.0 View Business Interface, or EJB 2.1 View Remote/Local interface

-

Use of JBoss Cache 2.x. JBC 2.x has a significantly different API from the 1.x releases used in JBoss AS 4.x and 3.2.x.

Table of Contents

The following diagram illustrates an overview of the JBoss.org community projects including the JBoss Appplication Server and its components.

The directory structure of JBoss 5 resembles that of the 4.x series with some notable differences:

-<JBOSS_HOME>/ - the path to your JBoss AS installation. + bin/ - contains start scripts and run.jar + client/ - client jars + docs/ - docs, schemas/dtds, examples + lib/ - core bootstrap jars, different with the introduction of the microcontainer and breakup of jboss-common. + server/ - contains the same server configuration directories. + default/ configuration + conf/ - contains server configuration files used when starting the server. changes in here are detected on restarting your server. # bootstrap-beans.xml - new mc kernel bootstrap configuration # jax-ws-catalog.xml - oasis catalog driven schema/dtd namespace configuration # jbossjta-properties.xml - new JBossTS properties # jboss-service.xml - legacy static mbeans for compatibility # jndi.properties - the same jndi props # log4j.xml - the same log4j config # login-config.xml - the same jaas login config # props/ - the same default jaas login properties files # standardjaws.xml - obsolete cmp config # standardjbosscmp-jdbc.xml - the same cmp2 config # standardjboss.xml - the same ejb2 config # xmdesc/ - legacy xmbean descriptors + data/ - contains hypersonic local database, transactions, xmbean configuration files. + deploy/ - this is where services and your java applications are deployed. You can deploy an application on the JBoss application server by simply copying the application's (WAR, EAR or JAR files) into this directory. + deployers/ - new vdf deployers # bsh-deployer - beanshell deployer # ejb3.deployer - ejb3 deployers # jboss-aop-jboss5.deployer - aspect deployer # jboss-jca.deployer - JCA deployers # jbossweb.deployer - war deployers # jbossws.deployer - web services deployers # ear-deployer-beans.xml - ear deployers # ejb-deployer-beans.xml - ejb2.x deployers # metadata-beans.xml - metadata handlers # security-deployer-beans.xml - security deployers # profileservice-beans.xml.bak - an example of the repository based profile service + lib/ - the JBoss AS static library files shared by the services and applications in the respective configuration.

Table of Contents

- 3. Deployment

- 4. Microcontainer

- 5. Web Services

-

- 5.1. Who needs web services?

- 5.2. Service Oriented Architecture (SOA)

- 5.3. What web services are not...

- 5.4. Jboss Web services Attachment support with XOP (XML-binary Optimized Packaging) and SwA

- 5.5. Using SwaRef with JAX-WS endpoints

- 5.6. MTOM/XOP

- 5.7. Enabling MTOM per endpoint

- 5.8. Document/Literal

- 5.9. Document/Literal (Bare)

- 5.10. Document/Literal (Wrapped)

- 5.11. RPC/Literal

- 5.12. RPC/Encoded

- 5.13. Web Service Endpoints

- 5.14. Plain old Java Object (POJO)

- 5.15. The endpoint as a web application

- 5.16. Packaging the endpoint

- 5.17. Accessing the generated WSDL

- 5.18. EJB3 Stateless Session Bean (SLSB)

- 5.19. Endpoint Provider

- 5.20. WebServiceContext

- 5.21. Web Service Clients

- 5.22. Common API

- 5.23. DataBinding

- 5.24. Attachments

- 5.25. Tools

- 5.26. Web Service Extensions

- 5.27. JBossWS Extensions

- 5.28. Web Services Appendix

- 5.29. References

- 6. JBoss5 Virtual Deployment Framework

- 7. JBOSS AOP

- 8. JBoss Cache

- 9. JBoss Transactions

- 10. JGroups

- 11. Remoting

- 12. JBoss Messaging

-

- 12.1. Configuring JBoss Messaging

- 12.2. Configuring the ServerPeer

- 12.3. Server Attributes

-

- 12.3.1. ServerPeerID

- 12.3.2. DefaultQueueJNDIContext

- 12.3.3. DefaultTopicJNDIContext

- 12.3.4. PostOffice

- 12.3.5. DefaultDLQ

- 12.3.6. DefaultMaxDeliveryAttempts

- 12.3.7. DefaultExpiryQueue

- 12.3.8. DefaultRedeliveryDelay

- 12.3.9. MessageCounterSamplePeriod

- 12.3.10. FailoverStartTimeout

- 12.3.11. FailoverCompleteTimeout

- 12.3.12. DefaultMessageCounterHistoryDayLimit

- 12.3.13. ClusterPullConnectionFactory

- 12.3.14. DefaultPreserveOrdering

- 12.3.15. RecoverDeliveriesTimeout

- 12.3.16. SuckerPassword

- 12.3.17. StrictTCK

- 12.3.18. Destinations

- 12.3.19. MessageCounters

- 12.3.20. MessageCountersStatistics

- 12.3.21. SupportsFailover

- 12.3.22. PersistenceManager

- 12.3.23. JMSUserManager

- 12.3.24. SecurityStore

- 12.4. MBean operations of the ServerPeer MBean

-

- 12.4.1. DeployQueue

- 12.4.2. UndeployQueue

- 12.4.3. DestroyQueue

- 12.4.4. DeployTopic

- 12.4.5. UndeployTopic

- 12.4.6. DestroyTopic

- 12.4.7. ListMessageCountersHTML

- 12.4.8. ResetAllMesageCounters

- 12.4.9. ResetAllMesageCounters

- 12.4.10. EnableMessageCounters

- 12.4.11. DisableMessageCounters

- 12.4.12. RetrievePreparedTransactions

- 12.4.13. ShowPreparedTransactions

- 13. Use Alternative Databases with JBoss AS

-

- 13.1. How to Use Alternative Databases

- 13.2. Install JDBC Drivers

- 13.3. Creating a DataSource for the External Database

- 13.4. Common configuration for DataSources and ConnectionFactorys

- 13.5. Change Database for the JMS Services

- 13.6. Support Foreign Keys in CMP Services

- 13.7. Specify Database Dialect for Java Persistence API

- 13.8. Change Other JBoss AS Services to Use the External Database

- 13.9. A Special Note About Oracle DataBases

- 13.10. DataSource configuration

- 13.11. Parameters specific for java.sql.Driver usage

- 13.12. Parameters specific for javax.sql.XADataSource usage

- 13.13. Common DataSource parameters

- 13.14. Generic Datasource Sample

- 13.15. Configuring a DataSource for remote usage

- 13.16. Configuring a DataSource to use login modules

- 14. Pooling

- 15. Frequently Asked Questions

Deploying applications on JBoss AS is very easy. You just need to copy the application into the JBOSS_HOME/server/default/deploy directory. You can replace default with different server profiles such as all or minimal. We will cover those later in this chapter. JBoss AS constantly scans the deploy directory to pick up new applications or any changes to existing applications. So, you can "hot deploy" application on the fly while JBoss AS is still running.

You can deploy several different types of enterprise applications in JBoss AS:

-

The WAR application archive (e.g., myapp.war) packages a Java EE web application in a JAR file. It contains servlet classes, view pages, libraries, and deployment descriptors such as web.xml, faces-config.xml, and jboss-web.xml etc..

-

The EAR application archive (e.g., myapp.ear) packages a Java EE enterprise application in a JAR file. It typically contains a WAR file for the web module, JAR files for EJB modules, as well as deployment descriptors such as application.xml and jboss-app.xml etc.

-

The SAR application archive (e.g., myservice.sar) packages a JBoss service in a JAR file. It is mostly used by JBoss internal services.

-

The *-ds.xml file defines connections to external databases. The data source can then be reused by all applications and services in JBoss AS via the internal JNDI.

-

You can deploy XML files with MBean service definitions. If you have the appropriate JAR files available in the deploy or lib directories, the MBeans specified in the XML files will be started. This is the way how you start many JBoss AS internal services, such as the JMS queues.

-

You can also deploy JAR files containing EJBs or other service objects directly in JBoss AS.

Exploded Deployment

The WAR, EAR, and SAR deployment packages are really just JAR files with special XML deployment descriptors in directories like META-INF and WEB-INF. JBoss AS allows you to deploy those archives as expanded directories instead of JAR files. That allows you to make changes to web pages etc on the fly without re-deploying the entire application. If you do need to re-deploy the exploded directory without re-start the server, you can just "touch" the deployment descriptors (e.g., the WEB-INF/web.xml in a WAR and the META-INF/application.xml in an EAR) to update their timestamps.

The JBoss Application Server ships with three server configurations. You can choose which configuration to start by passing the -c parameter to the server startup script. For instance, command run.sh -c all would start the server in the all configuration. Each configuration is contained in a directory named JBOSS_HOME/server/[config name]/. You can look into each server configuration's directory to see the default services, applications, and libraries supported in the configuration.

-

The minimal configuration starts the core server container without any of the enterprise services. It is a good starting point if you want to build a customized version of JBoss AS that only contains the services you need.

-

The default configuration is the mostly common used configuration for application developers. It supports the standard J2EE 1.4 and most of the Java EE 5.0 programming APIs (e.g., JSF and EJB3).

-

The all configuration is the default configuration with clustering support and other enterprise extensions.

The detailed services and APIs supported in each of those configurations will be discussed throughout this book.

JBoss Application Server 5.0 uses the microcontainer to integrate enterprise services together with a Servlet/JSP container, EJB container, deployers and management utilities in order to provide a standard Java EE environment. If you need additional services then you can simply deploy these on top of Java EE to provide the functionality you need. Likewise you are free to remove any services that you don't need simply by changing the configuration. You can even use the microcontainer to do this in other environments such as Tomcat and GlassFish since you can plug in different classloading models during the service deployment phase.

Since JBoss Microcontainer is very lightweight and deals with POJOs it can also be used to deploy services into a Java ME runtime environment. This opens up new possibilities for mobile applications that can now take advantage of enterprise services without requiring a full JEE application server.

In common with other lightweight containers JBoss Microcontainer uses dependency injection to wire individual POJOs together to create services. Configuration is performed using either annotations or XML depending on where the information is best located. Finally unit testing is made extremely simple thanks to a helper class that extends JUnit to setup the test environment, allowing you to access POJOs and services from your test methods using just a few lines of code.

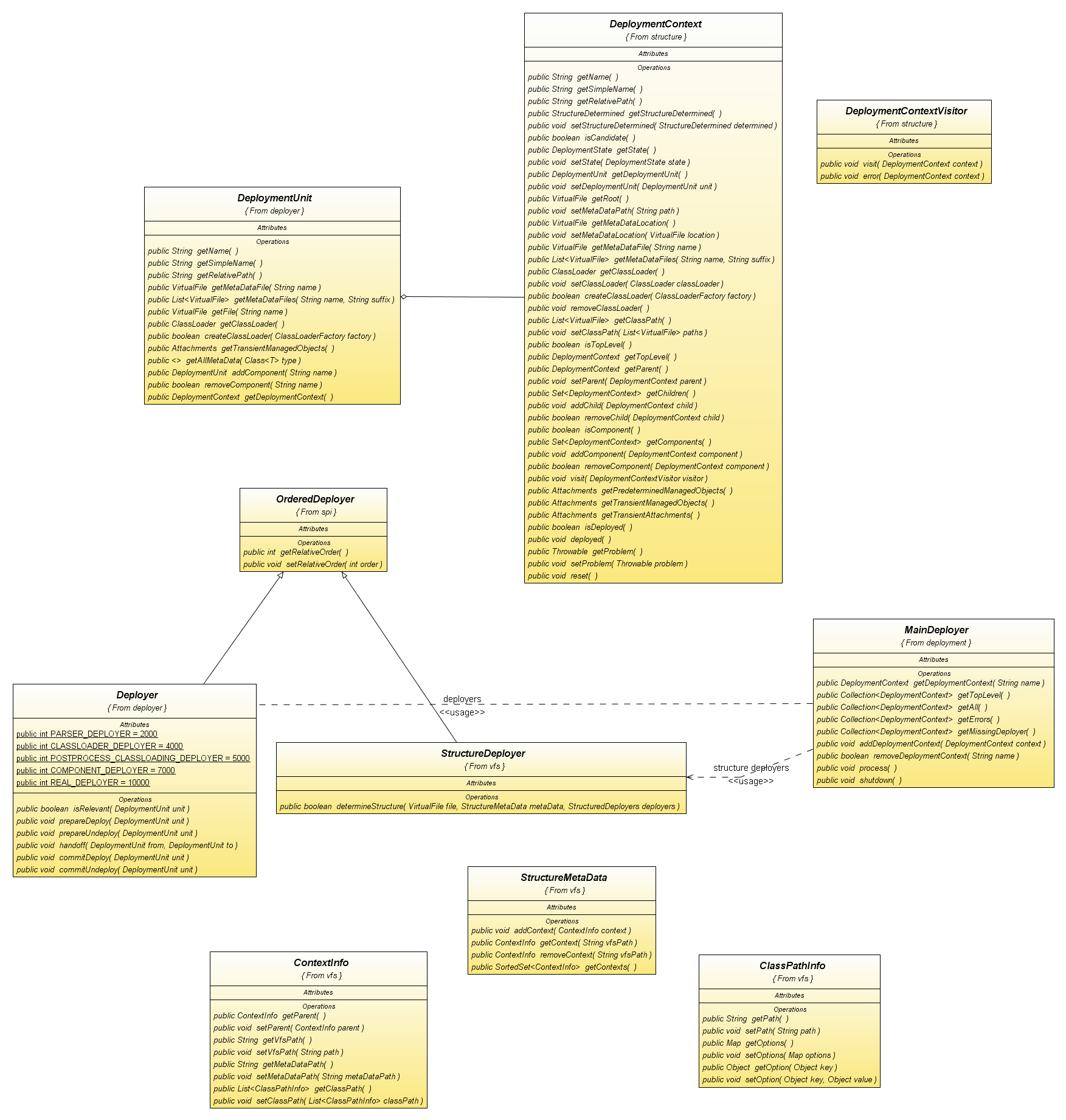

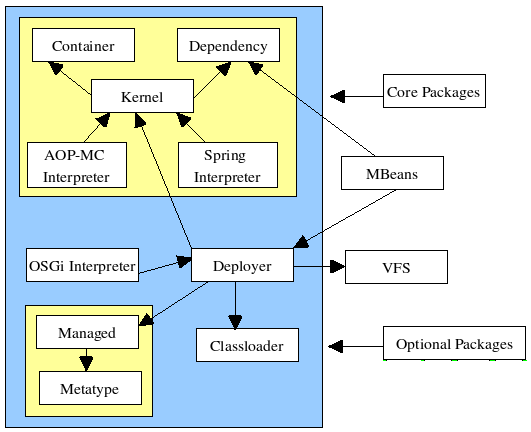

This section introduces the various Microcontainer modules. The figure below gives an overview of the modules.

-

aop-mc-inthandles integration between the JBossAOP and Microcontainer projects -

The

containermodule contains: reflection, the integration point for manipulating class information at runtime, e.g. overriding annotations or obtaining an aop instance advisor. joinpoint, the joinpoint model including the join point factory. classadaptor, the integration and configuration spi. metadata, base metadata types and repository -

dependencymanagement is handled by the controller. The controller is the core component for keeping track of contexts to make sure the configuration and lifecycle are done in the correct order including dependencies and classloading considerations. -

deployersload components from various models, POJOs, JMX, spring, Java EE, etc. into the Microcontainer runtime. -

kernelkernel defines the core kernel spi including, boostrap, configuration, POJO deployments, dependency, events, bean metadata, and bean registry. -

The

managedmodule defines the base objects defining the management view of a component. -

The

metatypemetatype module defines the base types found in the management view of a component. -

osgi-intcontains the integration classes that adapt the OSGi model onto the Microcontainer. -

spring-intcontains the integration classes that adapt the spring model onto the Microcontainer.

To configure your microcontainer the you can use the JBOSS_HOME/server/<server_configuration>/conf/bootstrap-beans.xml and JBOSS_HOME/server/<server_configuration>/conf/bootstrap-repo-beans.xml files where <server_configuration> represents the all , default or minimal JBoss AS configurations. The configuration files have comments to guide you on the specific configurations available as illustrated by the example below.

<deployment xmlns="urn:jboss:bean-deployer:2.0"> <!-- All beans use the bootstrap classloader --> <classloader><inject bean="BootstrapClassLoader"/></classloader> <!-- TODO Should split this file up and use the new classloader --> <bean name="BootstrapClassLoader" class="org.jboss.system.NoAnnotationURLClassLoader"> <classloader><null/></classloader> <constructor factoryClass="org.jboss.system.NoAnnotationURLClassLoader" factoryMethod="createClassLoader"> <parameter> ..... ...... <bean name="ProfileServiceBootstrap" class="org.jboss.system.server.profileservice.ProfileServiceBootstrap"> <property name="kernel"><inject bean="jboss.kernel:service=Kernel"/></property> </bean> <!-- The legacy JMX kernel --> <bean name="JMXKernel" class="org.jboss.system.server.jmx.JMXKernel"> <property name="kernel"><inject bean="jboss.kernel:service=Kernel"/></property> <property name="serverImpl"><inject bean="JBossServer"/></property> <property name="oldClassLoader">false</property> </bean> ....(content truncated) ...... <!-- The ManagedDeploymentCreator implementation --> <bean name="ManagedDeploymentCreator" class="org.jboss.deployers.plugins.managed.DefaultManagedDeploymentCreator" /> <!-- The holder for deployers that determine structure --> <bean name="StructuralDeployers" class="org.jboss.deployers.vfs.plugins.structure.VFSStructuralDeployersImpl"> <property name="structureBuilder"> <!-- The consolidator of the structure information --> <bean name="StructureBuilder" class="org.jboss.deployers.vfs.plugins.structure.VFSStructureBuilder"/> </property> <!-- Accept any implementor of structure deployer --> <incallback method="addDeployer"/> <uncallback method="removeDeployer"/> </bean> ...(content truncated) ...

The main beans are:

-

ProfileService : this bean loads the deployments associated with the named server profile, "default", "all" or whatever name is passed in as the "-c" option to the server. Its an extension of the jboss-4.0.x and earlier notion of always looking to the filesystem

server/name/conf/jboss-service.xmlandserver/name/deployto load deployments. -

AspectManager? : the AOP aspects

-

MainDeployer : this bean is an update of the JMX based MainDeployer from earlier versions to a one based on the Microcontainer, JBoss5VirtualFileSystem, and Virtual Deployment Framework(VDF). Deployer aspects are registered with the MainDeployer as an ordered list via inject of the deployers property.

-

ServiceClassLoaderDeployer? : this bean manages the class loading aspect of deployment.

-

JARDeployer : this bean is a structural deployment aspect which handles the legacy nested deployment behavior of adding non-deployable jars to the current deployment classpath.

-

FileStructure? : this bean is a structural deployment aspect which recognizes well know deployment file types specified by suffix.

-

AspectDeployer? : handles aop descriptor deployments.

-

BeanDeployer? : this bean translates deployer-beans.xml into KernelDeployment? for the descriptor beans.

-

KernelDeploymentDeployer? : translates a KernelDeployment? into the constituent BeanMetaData instances for the kernel beans.

-

BeanMetaDataDeployer? : creates the kernel beans from the deployment BeanMetaData.

-

SARDeployer : this bean is a port of the legacy JMX SARDeployer to the VDF. It handles the legacy jboss-service.xml style of mbean deployment descriptors and maps this into a ServiceDeployment? pojo.

-

ServiceDeploymentDeployer? : translates ServiceDeployment? pojo into the constituent ServiceMetaData that represent the various mbeans.

-

ServiceDeployer? : creates the mbean services from deployment ServiceMetaData instances.

-

JMXKernel : this bean manages the instantiation of a JMX kernel and MBeanServer in the jboss domain. It is used by the SARDeployer. It will be used by other management deployment aspects in the future to expose kernel beans via JMX.

-

VFSDeployerScanner? : a scanner bean that loads the deployers directory contents into the basic profile service.

-

VFSDeploymentScanner? : a scanner bean that loads the deploy directory contents into the basic profile service.

-

HDScanner : a bean that queries the profile service for changes in deploy directory contents and redeploys updated content, undeploys removed content, and add new deployment content to the profile service.

More information on the JBoss Microcontainer project can be obtained from http://labs.jboss.com/jbossmc/.

- 5.1. Who needs web services?

- 5.2. Service Oriented Architecture (SOA)

- 5.3. What web services are not...

- 5.4. Jboss Web services Attachment support with XOP (XML-binary Optimized Packaging) and SwA

- 5.5. Using SwaRef with JAX-WS endpoints

- 5.6. MTOM/XOP

- 5.7. Enabling MTOM per endpoint

- 5.8. Document/Literal

- 5.9. Document/Literal (Bare)

- 5.10. Document/Literal (Wrapped)

- 5.11. RPC/Literal

- 5.12. RPC/Encoded

- 5.13. Web Service Endpoints

- 5.14. Plain old Java Object (POJO)

- 5.15. The endpoint as a web application

- 5.16. Packaging the endpoint

- 5.17. Accessing the generated WSDL

- 5.18. EJB3 Stateless Session Bean (SLSB)

- 5.19. Endpoint Provider

- 5.20. WebServiceContext

- 5.21. Web Service Clients

- 5.22. Common API

- 5.23. DataBinding

- 5.24. Attachments

- 5.25. Tools

- 5.26. Web Service Extensions

- 5.27. JBossWS Extensions

- 5.28. Web Services Appendix

- 5.29. References

Web services are a key contributing factor in the ways Web commerce is conducted today. Web services enable application/programs to communicate by sending small and large chunks of data to each other.

A web service is essentially a software application that supports interaction of applications over a computer network or the world wide web. Web services usually interact via XML documents that map to an object, computer program, business process or database. To communicate, an application sends a message in XML document format to a web service which sends this message to the respective programs. Responses may be received based on requirements and the web service receives and sends them in XML document format to the required program or applications. Web services can be used in many ways examples include supply chain information management and business integration among a multitude of other applications.

JBossWS is a web service framework developed as part of the JBoss Application Server. It implements the JAX-WS specification that defines a programming model and run-time architecture for implementing web services in Java, targeted at the Java Platform, Enterprise Edition 5 (Java EE 5).

JBossWS integrates with most current JBoss Application Server releases as well as earlier ones, that did implement the J2EE 1.4 specifications. Even though JAX-RPC, the web service specification for J2EE 1.4, is still supported JBossWS does put a clear focus on JAX-WS.

Enterprise systems communication may benefit from a wise adoption of WS technologies. Exposing well designed contracts allows developers to extract an abstract view of their service capabilities. Considering the standardized way contracts are written, this definitely helps communication with third-party systems and eventually support business-to-business integration. No more agreement required on vendor specific implementation details, home-brew communication protocol or custom per-customer settings. Everything is clear and standardized in the contract the provider and consumer agree on. Of course this also reduces the dependencies between implementations allowing other consumers to easily use the provided service without major changes.

Enterprise system may benefit from web service technologies also for internal heterogenous subsystems communication. As a matter of fact their interoperability boosts service reuse and composition. No more need to rewrite whole functionalities only because they were developed by another enterprise department using another software language.

In case you think you already heard something like this... yes, those in previous paragraph are some of the principles Service Oriented Architecture is based on.

Transforming an enterprise business to Service Oriented Architecture includes obtaining standardized service contract, service reusability, service abstraction, service loose coupling, service composability and so on.

Of course SOA is an architectural model agnostic to technology platforms and every enterprise can pursue the strategic goals associated with service-oriented computing using different technologies. However in the current marketplace, Web Services are probably the technology platform that better suits SOA principles and are most used to get to this architecture.

Needless to say that web services are not the solution for every software system communication.

Nowadays they are meant to be used for loosely-coupled coarse-grained communication, for message (document) exchange. Moreover during the last years a lot of specifications (WS-*) were discussed and finally approved to standardize ws-related advanced aspects including reliable messaging, message-level security, cross-service transactions, etc. Finally web service specifications also include notion of registries to collect service contract references, to easily discover service implementations, etc.

This all means that the web services technology platform suits complex enterprise communication and is not simply the latest way of doing remote procedure calls.

JBoss-WS4EE relied on a deprecated attachments technology called SwA (SOAP with Attachments). SwA required soap/encoding which is disallowed by the WS-I Basic Profile. JBossWS provides support for WS-I AP 1.0, and MTOM instead. There will be no API change for users, however, since this is an updated protocol you will not be able to transfer attachments between older versions of JBoss AS and JBoss AS 4.0.4 or above.

WS-I Attachment Profile 1.0 defines mechanism to reference MIME attachment parts using swaRef. In this mechanism the content of XML element of type wsi:swaRef is sent as MIME attachment and the element inside SOAP Body holds the reference to this attachment in the CID URI scheme as defined by RFC 2111.

JAX-WS endpoints delegate all marshalling/unmarshalling to the JAXB API. The most simple way to enable SwaRef encoding for DataHandler types is to annotate a payload bean with the @XmlAttachmentRef annotation as shown below:

/**

* Payload bean that will use SwaRef encoding

*/

@XmlRootElement

public class DocumentPayload

{

private DataHandler data;

public DocumentPayload()

{

}

public DocumentPayload(DataHandler data)

{

this.data = data;

}

@XmlElement

@XmlAttachmentRef

public DataHandler getData()

{

return data;

}

public void setData(DataHandler data)

{

this.data = data;

}

}

With document wrapped endpoints you may even specify the @XmlAttachmentRef annotation on the service endpoint interface:

@WebService

public interface DocWrappedEndpoint

{

@WebMethod

DocumentPayload beanAnnotation(DocumentPayload dhw, String test);

@WebMethod

@XmlAttachmentRef

DataHandler parameterAnnotation(@XmlAttachmentRef DataHandler data, String test);

}

The message would then refer to the attachment part by CID:

<env:Envelope xmlns:env='http://schemas.xmlsoap.org/soap/envelope/'> <env:Header/> <env:Body> <ns2:parameterAnnotation xmlns:ns2='http://swaref.samples.jaxws.ws.test.jboss.org/'> <arg0>cid:0-1180017772935-32455963@ws.jboss.org</arg0> <arg1>Wrapped test</arg1> </ns2:parameterAnnotation> </env:Body> </env:Envelope>

This chapter describes Message Transmission Optimization Mechanism (MTOM) and XML-binary Optimized Packaging (XOP), a means of more efficiently serializing XML Infosets that have certain types of content. The related specifications are

-

SOAP Message Transmission Optimization Mechanism ((MTOM) http://www.w3.org/TR/soap12-mtom/)

-

XML-binary Optimized Packaging (XOP) (http://www.w3.org/TR/xop10/)

|

image/jpeg |

java.awt.Image |

|

text/xml |

javax.xml.transform.Source |

|

application/xml |

javax.xml.transform.Source |

|

application/octet-stream |

javax.activation.DataHandler |

Table 5.1. Supported MTOM parameter types

The above table shows a list of supported endpoint parameter types. The recommended approach is to use the javax.activation.DataHandler classes to represent binary data as service endpoint parameters.

Note

Microsoft endpoints tend to send any data as application/octet-stream. The only Java type that can easily cope with this ambiguity is javax.activation.DataHandler

On the server side MTOM processing is enabled through the @BindingType annotation. JBossWS does handle SOAP1.1 and SOAP1.2. Both come with or without MTOM flavours: MTOM enabled service implementations

package org.jboss.test.ws.jaxws.samples.xop.doclit;

import javax.ejb.Remote;

import javax.jws.WebService;

import javax.jws.soap.SOAPBinding;

import javax.xml.ws.BindingType;

@Remote

@WebService(targetNamespace = "http://org.jboss.ws/xop/doclit")

@SOAPBinding(style = SOAPBinding.Style.DOCUMENT, parameterStyle = SOAPBinding.ParameterStyle.BARE)

@BindingType(value="http://schemas.xmlsoap.org/wsdl/soap/http?mtom=true") (1)

public interface MTOMEndpoint {

[...]

}

MTOM enabled clients

Web service clients can use the same approach described above or rely on the Binding API to enable MTOM (Excerpt taken from the org.jboss.test.ws.jaxws.samples.xop.doclit.XOPTestCase):

[...] Service service = Service.create(wsdlURL, serviceName); port = service.getPort(MTOMEndpoint.class); // enable MTOM binding = (SOAPBinding)((BindingProvider)port).getBinding(); binding.setMTOMEnabled(true);

With document style web services two business partners agree on the exchange of complex business documents that are well defined in XML schema. For example, one party sends a document describing a purchase order, the other responds (immediately or later) with a document that describes the status of the purchase order. No need to agree on such low level details as operation names and their associated parameters. The payload of the SOAP message is an XML document that can be validated against XML schema. Document is defined by the style attribute on the SOAP binding.

<binding name='EndpointInterfaceBinding' type='tns:EndpointInterface'> <soap:binding style='document' transport='http://schemas.xmlsoap.org/soap/http'/> <operation name='concat'> <soap:operation soapAction=''/> <input> <soap:body use='literal'/> </input> <output> <soap:body use='literal'/> </output> </operation> </binding>

With document style web services the payload of every message is defined by a complex type in XML schema.

<complexType name='concatType'> <sequence> <element name='String_1' nillable='true' type='string'/> <element name='long_1' type='long'/> </sequence> </complexType> <element name='concat' type='tns:concatType'/> Therefore, message parts must refer to an element from the schema. <message name='EndpointInterface_concat'> <part name='parameters' element='tns:concat'/> </message> The following message definition is invalid. <message name='EndpointInterface_concat'> <part name='parameters' type='tns:concatType'/> </message>

Bare is an implementation detail from the Java domain. Neither in the abstract contract (i.e. wsdl+schema) nor at the SOAP message level is a bare endpoint recognizable. A bare endpoint or client uses a Java bean that represents the entire document payload.

@WebService

@SOAPBinding(parameterStyle = SOAPBinding.ParameterStyle.BARE)

public class DocBareServiceImpl

{

@WebMethod

public SubmitBareResponse submitPO(SubmitBareRequest poRequest)

{

...

}

}

The trick is that the Java beans representing the payload contain JAXB annotations that define how the payload is represented on the wire.

@XmlAccessorType(XmlAccessType.FIELD)

@XmlType(name = "SubmitBareRequest", namespace="http://soapbinding.samples.jaxws.ws.test.jboss.org/", propOrder = { "product" })

@XmlRootElement(namespace="http://soapbinding.samples.jaxws.ws.test.jboss.org/", name = "SubmitPO")

public class SubmitBareRequest

{

@XmlElement(namespace="http://soapbinding.samples.jaxws.ws.test.jboss.org/", required = true)

private String product;

...

}

Wrapped is an implementation detail from the Java domain. Neither in the abstract contract (i.e. wsdl+schema) nor at the SOAP message level is a wrapped endpoint recognizable. A wrapped endpoint or client uses the individual document payload properties. Wrapped is the default and does not have to be declared explicitly.

@WebService

public class DocWrappedServiceImpl

{

@WebMethod

@RequestWrapper (className="org.somepackage.SubmitPO")

@ResponseWrapper (className="org.somepackage.SubmitPOResponse")

public String submitPO(String product, int quantity)

{

...

}

}

Note, that with JBossWS the request/response wrapper annotations are not required, they will be generated on demand using sensible defaults.

With RPC there is a wrapper element that names the endpoint operation. Child elements of the RPC parent are the individual parameters. The SOAP body is constructed based on some simple rules:

-

The port type operation name defines the endpoint method name

-

Message parts are endpoint method parameters

RPC is defined by the style attribute on the SOAP binding.

<binding name='EndpointInterfaceBinding' type='tns:EndpointInterface'> <soap:binding style='rpc' transport='http://schemas.xmlsoap.org/soap/http'/> <operation name='echo'> <soap:operation soapAction=''/> <input> <soap:body namespace='http://org.jboss.ws/samples/jsr181pojo' use='literal'/> </input> <output> <soap:body namespace='http://org.jboss.ws/samples/jsr181pojo' use='literal'/> </output> </operation> </binding>

With rpc style web services the portType names the operation (i.e. the java method on the endpoint)

<portType name='EndpointInterface'> <operation name='echo' parameterOrder='String_1'> <input message='tns:EndpointInterface_echo'/> <output message='tns:EndpointInterface_echoResponse'/> </operation> </portType>

Operation parameters are defined by individual message parts.

<message name='EndpointInterface_echo'> <part name='String_1' type='xsd:string'/> </message> <message name='EndpointInterface_echoResponse'> <part name='result' type='xsd:string'/> </message>

Note, there is no complex type in XML schema that could validate the entire SOAP message payload.

@WebService

@SOAPBinding(style = SOAPBinding.Style.RPC)

public class JSEBean01

{

@WebMethod

@WebResult(name="result")

public String echo(@WebParam(name="String_1") String input)

{

...

}

}

The element names of RPC parameters/return values may be defined using the JAX-WS Annotations#javax.jws.WebParam and JAX-WS Annotations#javax.jws.WebResult respectively.

SOAP encodeding style is defined by the infamous chapter 5 of the SOAP-1.1 specification. It has inherent interoperability issues that cannot be fixed. The Basic Profile-1.0 prohibits this encoding style in 4.1.7 SOAP encodingStyle Attribute. JBossWS has basic support for rpc/encoded that is provided as is for simple interop scenarios with SOAP stacks that do not support literal encoding. Specifically, JBossWS does not support:-

-

element references

-

soap arrays as bean properties

JAX-WS simplifies the development model for a web service endpoint a great deal. In short, an endpoint implementation bean is annotated with JAX-WS annotations and deployed to the server. The server automatically generates and publishes the abstract contract (i.e. wsdl+schema) for client consumption. All marshalling/unmarshalling is delegated to JAXB [2].

Let's take a look at simple POJO endpoint implementation. All endpoint associated metadata is provided via JSR-181 annotations

@WebService

@SOAPBinding(style = SOAPBinding.Style.RPC)

public class JSEBean01

{

@WebMethod

public String echo(String input)

{

...

}

}

A JAX-WS java service endpoint (JSE) is deployed as a web application.

<web-app ...> <servlet> <servlet-name>TestService</servlet-name> <servlet-class>org.jboss.test.ws.jaxws.samples.jsr181pojo.JSEBean01</servlet-class> </servlet> <servlet-mapping> <servlet-name>TestService</servlet-name> <url-pattern>/*</url-pattern> </servlet-mapping> </web-app>

A JSR-181 java service endpoint (JSE) is packaged as a web application in a *.war file.

<war warfile="${build.dir}/libs/jbossws-samples-jsr181pojo.war" webxml="${build.resources.dir}/samples/jsr181pojo/WEB-INF/web.xml">

<classes dir="${build.dir}/classes">

<include name="org/jboss/test/ws/samples/jsr181pojo/JSEBean01.class"/>

</classes>

</war>

Note that only the endpoint implementation bean and web.xml are required.

A successfully deployed service endpoint will show up in the service endpoint manager. This is also where you find the links to the generated wsdl.

http://yourhost:8080/jbossws/services

a Note, it is also possible to generate the abstract contract off line using jbossw tools. For details of that please see #Top Down (Java to WSDL)

The JAX-WS programming model support the same set of annotations on EJB3 stateless session beans as on # Plain old Java Object (POJO) endpoints. EJB-2.1 endpoints are supported using the JAX-RPC progamming model.

@Stateless

@Remote(EJB3RemoteInterface.class)

@RemoteBinding(jndiBinding = "/ejb3/EJB3EndpointInterface")

@WebService

@SOAPBinding(style = SOAPBinding.Style.RPC)

public class EJB3Bean01 implements EJB3RemoteInterface

{

@WebMethod

public String echo(String input)

{

...

}

}

Above you see an EJB-3.0 stateless session bean that exposes one method both on the remote interface and on and as an endpoint operation.

Packaging the endpoint

A JSR-181 EJB service endpoint is packaged as an ordinary ejb deployment.

<jar jarfile="${build.dir}/libs/jbossws-samples-jsr181ejb.jar">

<fileset dir="${build.dir}/classes">

<include name="org/jboss/test/ws/samples/jsr181ejb/EJB3Bean01.class"/>

<include name="org/jboss/test/ws/samples/jsr181ejb/EJB3RemoteInterface.class"/>

</fileset>

</jar>

Accessing the generated WSDL

A successfully deployed service endpoint will show up in the service endpoint manager. This is also where you find the links to the generated wsdl.

http://yourhost:8080/jbossws/services

Note, it is also possible to generate the abstract contract off line using jbossw tools. For details of that please see #Top Down (Java to WSDL)

JAX-WS services typically implement a native Java service endpoint interface (SEI), perhaps mapped from a WSDL port type, either directly or via the use of annotations.

Java SEIs provide a high level Java-centric abstraction that hides the details of converting between Java objects and their XML representations for use in XML-based messages. However, in some cases it is desirable for services to be able to operate at the XML message level. The Provider interface offers an alternative to SEIs and may be implemented by services wishing to work at the XML message level.

A Provider based service instance’s invoke method is called for each message received for the service.

@WebServiceProvider

@ServiceMode(value = Service.Mode.PAYLOAD)

public class ProviderBeanPayload implements Provider<Source>

{

public Source invoke(Source req)

{

// Access the entire request PAYLOAD and return the response PAYLOAD

}

}

Note, Service.Mode.PAYLOAD is the default and does not have to be declared explicitly. You can also use Service.Mode.MESSAGE to access the entire SOAP message (i.e. with MESSAGE the Provider can also see SOAP Headers)

The WebServiceContext is treated as an injectable resource that can be set at the time an endpoint is initialized. The WebServiceContext object will then use thread-local information to return the correct information regardless of how many threads are concurrently being used to serve requests addressed to the same endpoint object.

@WebService

public class EndpointJSE

{

@Resource

WebServiceContext wsCtx;

@WebMethod

public String testGetMessageContext()

{

SOAPMessageContext jaxwsContext = (SOAPMessageContext)wsCtx.getMessageContext();

return jaxwsContext != null ? "pass" : "fail";

}

..

@WebMethod

public String testGetUserPrincipal()

{

Principal principal = wsCtx.getUserPrincipal();

return principal.getName();

}

@WebMethod

public boolean testIsUserInRole(String role)

{

return wsCtx.isUserInRole(role);

}

}

Service is an abstraction that represents a WSDL service. A WSDL service is a collection of related ports, each of which consists of a port type bound to a particular protocol and available at a particular endpoint address.

For most clients, you will start with a set of stubs generated from the WSDL. One of these will be the service, and you will create objects of that class in order to work with the service (see "static case" below).

Static case

Most clients will start with a WSDL file, and generate some stubs using jbossws tools like wsconsume . This usually gives a mass of files, one of which is the top of the tree. This is the service implementation class.

The generated implementation class can be recognised as it will have two public constructors, one with no arguments and one with two arguments, representing the wsdl location (a java.net.URL) and the service name (a javax.xml.namespace.QName) respectively.

Usually you will use the no-argument constructor. In this case the WSDL location and service name are those found in the WSDL. These are set implicitly from the WebServiceClient annotation that decorates the generated class.

The following code snippet shows the generated constructors from the generated class:

// Generated Service Class

@WebServiceClient(name="StockQuoteService", targetNamespace="http://example.com/stocks", wsdlLocation="http://example.com/stocks.wsdl")

public class StockQuoteService extends javax.xml.ws.Service

{

public StockQuoteService()

{

super(new URL("http://example.com/stocks.wsdl"), new QName("http://example.com/stocks", "StockQuoteService"));

}

public StockQuoteService(String wsdlLocation, QName serviceName)

{

super(wsdlLocation, serviceName);

}

...

}

Section #Dynamic Proxy explains how to obtain a port from the service and how to invoke an operation on the port. If you need to work with the XML payload directly or with the XML representation of the entire SOAP message, have a look at #Dispatch.

Dynamic case

In the dynamic case, when nothing is generated, a web service client uses Service.create to create Service instances, the following code illustrates this process.

URL wsdlLocation = new URL("http://example.org/my.wsdl");

QName serviceName = new QName("http://example.org/sample", "MyService");

Service service = Service.create(wsdlLocation, serviceName);

This is the nastiest way to work with JBossWs. Older versions have extensive details on DII as it was then known.

JAX-WS provides a flexible plug-in framework for message processing modules, known as handlers, that may be used to extend the capabilities of a JAX-WS runtime system. #Handler Framework describes the handler framework in detail. A Service instance provides access to a HandlerResolver via a pair of getHandlerResolver/setHandlerResolver methods that may be used to configure a set of handlers on a per-service, per-port or per-protocol binding basis.

When a Service instance is used to create a proxy or a Dispatch instance then the handler resolver currently registered with the service is used to create the required handler chain. Subsequent changes to the handler resolver configured for a Service instance do not affect the handlers on previously created proxies, or Dispatch instances.

Service instances can be configured with a java.util.concurrent.Executor. The executor will then be used to invoke any asynchronous callbacks requested by the application. The setExecutor and getExecutor methods of Service can be used to modify and retrieve the executor configured for a service.

You can create an instance of a client proxy using one of getPort methods on the #Service.

/**

* The getPort method returns a proxy. A service client

* uses this proxy to invoke operations on the target

* service endpoint. The <code>serviceEndpointInterface</code>

* specifies the service endpoint interface that is supported by

* the created dynamic proxy instance.

**/

public <T> T getPort(QName portName, Class<T> serviceEndpointInterface)

{

...

}

/**

* The getPort method returns a proxy. The parameter

* <code>serviceEndpointInterface</code> specifies the service

* endpoint interface that is supported by the returned proxy.

* In the implementation of this method, the JAX-WS

* runtime system takes the responsibility of selecting a protocol

* binding (and a port) and configuring the proxy accordingly.

* The returned proxy should not be reconfigured by the client.

*

**/

public <T> T getPort(Class<T> serviceEndpointInterface)

{

...

}

The service endpoint interface (SEI) is usually generated using tools. For details see # Top Down (WSDL to Java)

A generated static #Service usually also offers typed methods to get ports. These methods also return dynamic proxies that implement the SEI.

@WebServiceClient(name = "TestEndpointService", targetNamespace = "http://org.jboss.ws/wsref",

wsdlLocation = "http://localhost.localdomain:8080/jaxws-samples-webserviceref?wsdl")

public class TestEndpointService extends Service

{

...

public TestEndpointService(URL wsdlLocation, QName serviceName) {

super(wsdlLocation, serviceName);

}

@WebEndpoint(name = "TestEndpointPort")

public TestEndpoint getTestEndpointPort()

{

return (TestEndpoint)super.getPort(TESTENDPOINTPORT, TestEndpoint.class);

}

}

The WebServiceRef annotation is used to declare a reference to a Web service. It follows the resource pattern exemplified by the javax.annotation.Resource annotation in JSR-250 [5]

There are two uses to the WebServiceRef annotation:

-

To define a reference whose type is a generated service class. In this case, the type and value element will both refer to the generated service class type. Moreover, if the reference type can be inferred by the field/method declaration the annotation is applied to, the type and value elements MAY have the default value (Object.class, that is). If the type cannot be inferred, then at least the type element MUST be present with a non-default value.

-

To define a reference whose type is a SEI. In this case, the type element MAY be present with its default value if the type of the reference can be inferred from the annotated field/method declaration, but the value element MUST always be present and refer to a generated service class type (a subtype of javax.xml.ws.Service). The wsdlLocation element, if present, overrides theWSDL location information specified in the WebService annotation of the referenced generated service class.

public class EJB3Client implements EJB3Remote

{

@WebServiceRef

public TestEndpointService service4;

@WebServiceRef

public TestEndpoint port3;

WebServiceRef Customization

In jboss-5.0.x we offer a number of overrides and extensions to the WebServiceRef annotation. These include

-

define the port that should be used to resolve a container-managed port

-

define default Stub property settings for Stub objects

-

define the URL of a final WSDL document to be used

Example:

<service-ref>

<service-ref-name>OrganizationService</service-ref-name>

<wsdl-override>file:/wsdlRepository/organization-service.wsdl</wsdl-override>

</service-ref>

..

<service-ref>

<service-ref-name>OrganizationService</service-ref-name>

<config-name>Secure Client Config</config-name>

<config-file>META-INF/jbossws-client-config.xml</config-file>

<handler-chain>META-INF/jbossws-client-handlers.xml</handler-chain>

</service-ref>

<service-ref>

<service-ref-name>SecureService</service-ref-name>

<service-class-name>org.jboss.tests.ws.jaxws.webserviceref.SecureEndpointService</service-class-name>

<service-qname>{http://org.jboss.ws/wsref}SecureEndpointService</service-qname>

<port-info>

<service-endpoint-interface>org.jboss.tests.ws.jaxws.webserviceref.SecureEndpoint</service-endpoint-interface>

<port-qname>{http://org.jboss.ws/wsref}SecureEndpointPort</port-qname>

<stub-property>

<name>javax.xml.ws.security.auth.username</name>

<value>kermit</value>

</stub-property>

<stub-property>

<name>javax.xml.ws.security.auth.password</name>

<value>thefrog</value>

</stub-property>

</port-info>

</service-ref>

For details please see service-ref_5_0.dtd in the jboss docs directory.

XMLWeb Services use XML messages for communication between services and service clients. The higher level JAX-WS APIs are designed to hide the details of converting between Java method invocations and the corresponding XML messages, but in some cases operating at the XML message level is desirable. The Dispatch interface provides support for this mode of interaction.

Dispatch supports two usage modes, identified by the constants javax.xml.ws.Service.Mode.MESSAGE and javax.xml.ws.Service.Mode.PAYLOAD respectively:

Message In this mode, client applications work directly with protocol-specific message structures. E.g., when used with a SOAP protocol binding, a client application would work directly with a SOAP message.

Message Payload In this mode, client applications work with the payload of messages rather than the messages themselves. E.g., when used with a SOAP protocol binding, a client application would work with the contents of the SOAP Body rather than the SOAP message as a whole.

Dispatch is a low level API that requires clients to construct messages or message payloads as XML and requires an intimate knowledge of the desired message or payload structure. Dispatch is a generic class that supports input and output of messages or message payloads of any type.

Service service = Service.create(wsdlURL, serviceName); Dispatch dispatch = service.createDispatch(portName, StreamSource.class, Mode.PAYLOAD); String payload = "<ns1:ping xmlns:ns1='http://oneway.samples.jaxws.ws.test.jboss.org/'/>"; dispatch.invokeOneWay(new StreamSource(new StringReader(payload))); payload = "<ns1:feedback xmlns:ns1='http://oneway.samples.jaxws.ws.test.jboss.org/'/>"; Source retObj = (Source)dispatch.invoke(new StreamSource(new StringReader(payload)));

The BindingProvider interface represents a component that provides a protocol binding for use by clients, it is implemented by proxies and is extended by the Dispatch interface.

BindingProvider instances may provide asynchronous operation capabilities. When used, asynchronous operation invocations are decoupled from the BindingProvider instance at invocation time such that the response context is not updated when the operation completes. Instead a separate response context is made available using the Response interface.

public void testInvokeAsync() throws Exception

{

URL wsdlURL = new URL("http://" + getServerHost() + ":8080/jaxws-samples-asynchronous?wsdl");

QName serviceName = new QName(targetNS, "TestEndpointService");

Service service = Service.create(wsdlURL, serviceName);

TestEndpoint port = service.getPort(TestEndpoint.class);

Response response = port.echoAsync("Async");

// access future

String retStr = (String) response.get();

assertEquals("Async", retStr);

}

@Oneway indicates that the given web method has only an input message and no output. Typically, a oneway method returns the thread of control to the calling application prior to executing the actual business method.

@WebService (name="PingEndpoint")

@SOAPBinding(style = SOAPBinding.Style.RPC)

public class PingEndpointImpl

{

private static String feedback;

..

@WebMethod

@Oneway

public void ping()

{

log.info("ping");

feedback = "ok";

}

..

@WebMethod

public String feedback()

{

log.info("feedback");

return feedback;

}

}

This sections describes concepts that apply equally to #Web Service Endpoints and #Web Service Clients

The handler framework is implemented by a JAX-WS protocol binding in both client and server side runtimes. Proxies, and Dispatch instances, known collectively as binding providers, each use protocol bindings to bind their abstract functionality to specific protocols.

Client and server-side handlers are organized into an ordered list known as a handler chain. The handlers within a handler chain are invoked each time a message is sent or received. Inbound messages are processed by handlers prior to binding provider processing. Outbound messages are processed by handlers after any binding provider processing.

Handlers are invoked with a message context that provides methods to access and modify inbound and outbound messages and to manage a set of properties. Message context properties may be used to facilitate communication between individual handlers and between handlers and client and service implementations. Different types of handlers are invoked with different types of message context.

Handlers that only operate on message context properties and message payloads. Logical handlers are protocol agnostic and are unable to affect protocol specific parts of a message. Logical handlers are handlers that implement javax.xml.ws.handler.LogicalHandler.

Handlers that operate on message context properties and protocol specific messages. Protocol handlers are specific to a particular protocol and may access and change protocol specific aspects of a message. Protocol handlers are handlers that implement any interface derived from javax.xml.ws.handler.Handler except javax.xml.ws.handler.LogicalHandler.

On the service endpoint, handlers are defined using the @HandlerChain annotation.

@WebService

@HandlerChain(file = "jaxws-server-source-handlers.xml")

public class SOAPEndpointSourceImpl

{

...

}

The location of the handler chain file supports 2 formats

1. An absolute java.net.URL in externalForm. (ex: http://myhandlers.foo.com/handlerfile1.xml)

2. A relative path from the source file or class file. (ex: bar/handlerfile1.xml)

On the client side, handler can be configured using the @HandlerChain annotation on the SEI or dynamically using the API.

Service service = Service.create(wsdlURL, serviceName); Endpoint port = (Endpoint)service.getPort(Endpoint.class); BindingProvider bindingProvider = (BindingProvider)port; List<Handler> handlerChain = new ArrayList<Handler>(); handlerChain.add(new LogHandler()); handlerChain.add(new AuthorizationHandler()); handlerChain.add(new RoutingHandler()); bindingProvider.getBinding().setHandlerChain(handlerChain); // important!

MessageContext is the super interface for all JAX-WS message contexts. It extends Map<String,Object> with additional methods and constants to manage a set of properties that enable handlers in a handler chain to share processing related state. For example, a handler may use the put method to insert a property in the message context that one or more other handlers in the handler chain may subsequently obtain via the get method.

Properties are scoped as either APPLICATION or HANDLER. All properties are available to all handlers for an instance of an MEP on a particular endpoint. E.g., if a logical handler puts a property in the message context, that property will also be available to any protocol handlers in the chain during the execution of an MEP instance. APPLICATION scoped properties are also made available to client applications (see section 4.2.1) and service endpoint implementations. The defaultscope for a property is HANDLER.

There is currently no portable way of doing this in 4.0.5. @WebServiceContext injection will be available with 4.2. In the meantime you can access the message context like this:

CommonMessageContext msgContext = MessageContextAssociation.peekMessageContext(); msgContext.setProperty(<Name>, <Value>);

#Logical Handlers are passed a message context of type LogicalMessageContext when invoked. LogicalMessageContext extends MessageContext with methods to obtain and modify the message payload, it does not provide access to the protocol specific aspects of amessage. A protocol binding defines what component of a message are available via a logical message context. The SOAP binding defines that a logical handler deployed in a SOAP binding can access the contents of the SOAP body but not the SOAP headers whereas the XML/HTTP binding defines that a logical handler can access the entire XML payload of a message.

An implementation may thow a SOAPFaultException

public void throwSoapFaultException()

{

SOAPFactory factory = SOAPFactory.newInstance();

SOAPFault fault = factory.createFault("this is a fault string!", new QName("http://foo", "FooCode"));

fault.setFaultActor("mr.actor");

fault.addDetail().addChildElement("test");

throw new SOAPFaultException(fault);

}

or an application specific user exception

public void throwApplicationException() throws UserException

{

throw new UserException("validation", 123, "Some validation error");

}

Note

In case of the latter JBossWS generates the required fault wrapper beans at runtime if they are not part of the deployment

Since 2.0.2