- 1.1. Introduction in eXoJCR

- 1.2. Why use JCR?

- 1.3. eXo JCR Implementation

- 1.4. Advantages of eXo JCR

- 1.5. Compatibility Levels

- 1.6. Using JCR

- 1.7. JCR Service Extensions

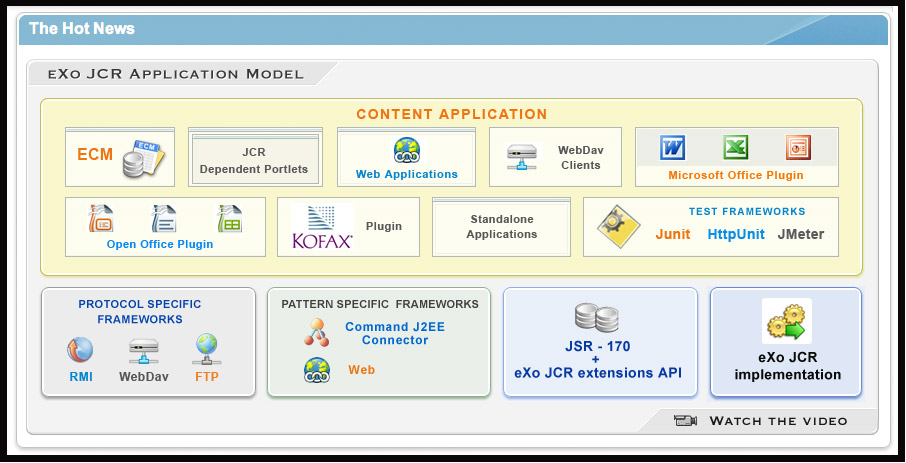

- 1.8. eXo JCR Application Model

- 1.9. NodeType Registration

- 1.10. Registry Service

- 1.11. Namespace altering

- 1.12. Node Types and Namespaces

- 1.13. eXo JCR configuration

- 1.13.1. Related documents

- 1.13.2. Portal and Standalone configuration

- 1.13.3. JCR Configuration

- 1.13.4. Repository service configuration (JCR repositories configuration)

- 1.13.5. Repository configuration

- 1.13.6. Workspace configuration

- 1.13.7. Value Storage plugin configuration (for data container):

- 1.13.8. Initializer configuration (optional)

- 1.13.9. Cache configuration

- 1.13.10. Query Handler configuration

- 1.13.11. Lock Manager configuration

- 1.13.12. Help application to prohibit the use of closed sessions

- 1.13.13. Help application to allow the use of closed datasources

- 1.13.14. Getting the effective configuration at Runtime of all the repositories

- 1.13.15. Configuration of workspaces using system properties

- 1.14. Multi-language support in eXo JCR RDB backend

- 1.15. How to host several JCR instances on the same database instance?

- 1.16. Search Configuration

- 1.17. JCR Configuration persister

- 1.18. JDBC Data Container Config

- 1.19. External Value Storages

- 1.20. Workspace Data Container

- 1.21. REST Services on Groovy

- 1.22. Configuring JBoss AS with eXo JCR in cluster

- 1.23. JBoss Cache configuration

- 1.24. LockManager configuration

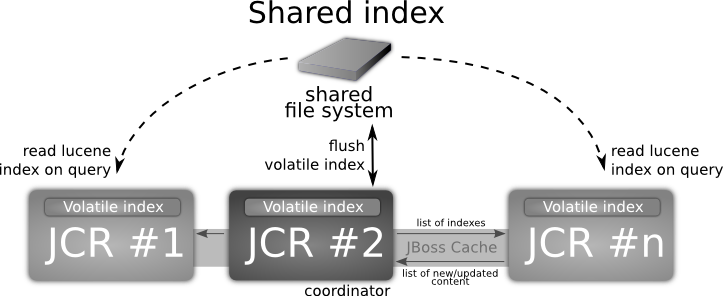

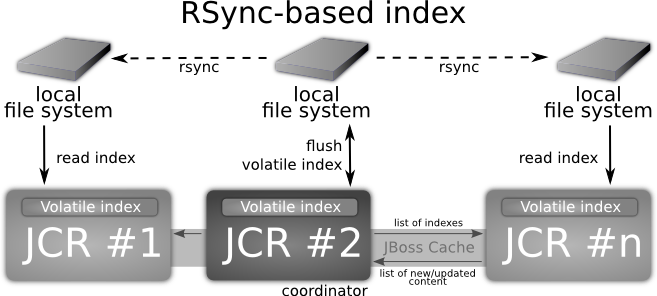

- 1.25. QueryHandler configuration

- 1.26. JBossTransactionsService

- 1.27. TransactionManagerLookup

- 1.28. Infinispan integration

- 1.29. RepositoryCreationService

- 1.30. JCR Query Usecases

- 1.30.1. Query Lifecycle

- 1.30.2. Query result settings

- 1.30.3. Type Constraints

- 1.30.4. Property Constraints

- 1.30.5. Path Constraint

- 1.30.6. Ordering specifying

- 1.30.7. Section 1.32, “Fulltext Search And Affecting Settings”

- 1.30.8. Indexing rules and additional features

- 1.30.9. Query Examples

- 1.30.10. Tips and tricks

- 1.31. Searching Repository Content

- 1.32. Fulltext Search And Affecting Settings

- 1.33. JCR API Extensions

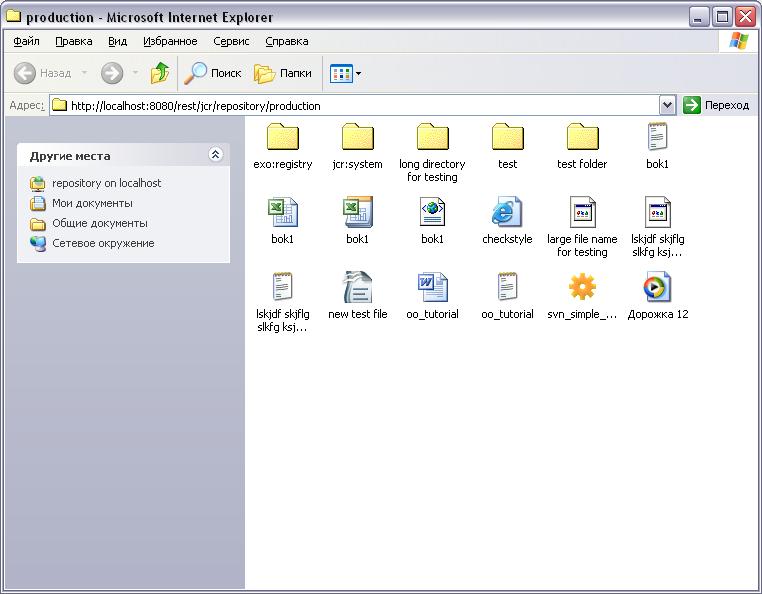

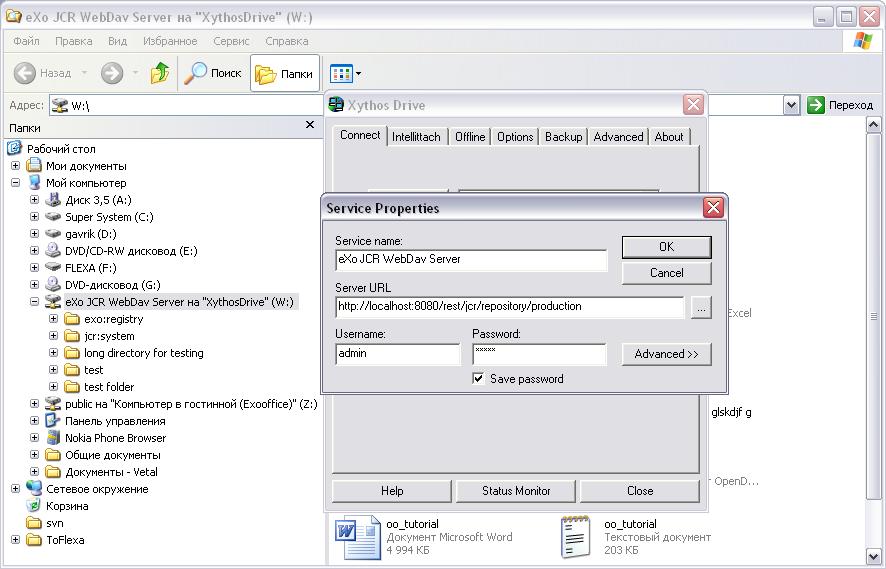

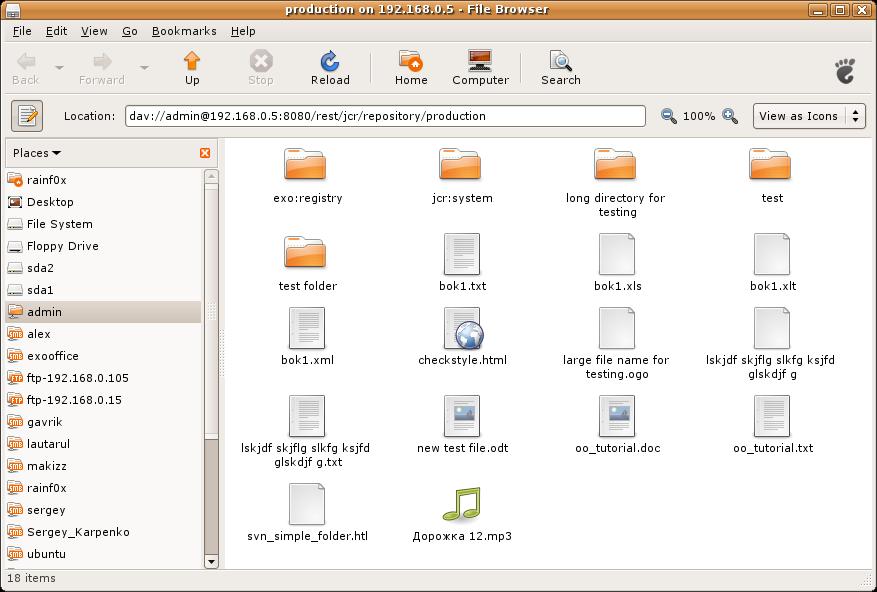

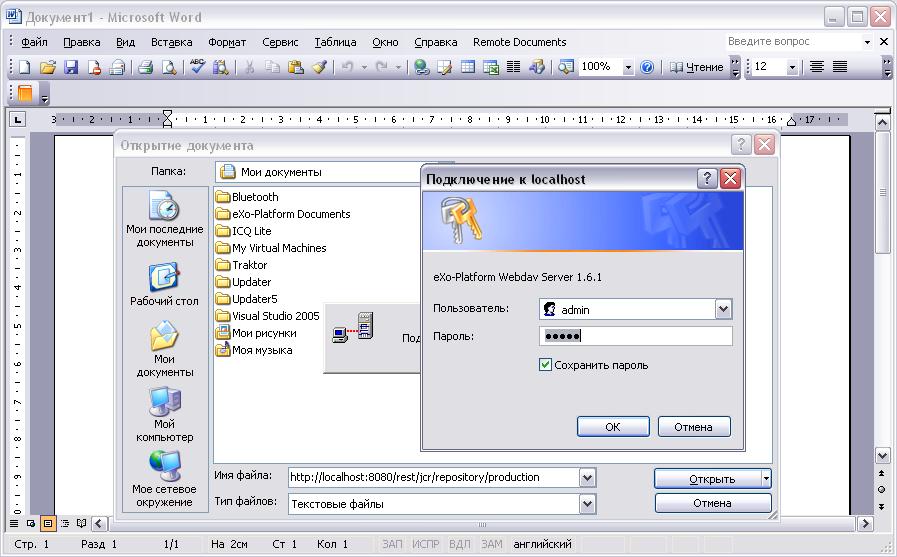

- 1.34. WebDAV

- 1.35. FTP

- 1.36. eXo JCR Backup Service

- 1.37. HTTPBackupAgent and backup client

- 1.38. How to backup the data of your JCR using an external backup tool in 3 steps?

- 1.39. eXo JCR statistics

- 1.40. Checking and repairing repository integrity and consistency

- 1.41. JTA

- 1.42. The JCA Resource Adapter

- 1.43. Access Control

- 1.44. Access Control Extension

- 1.45. Link Producer Service

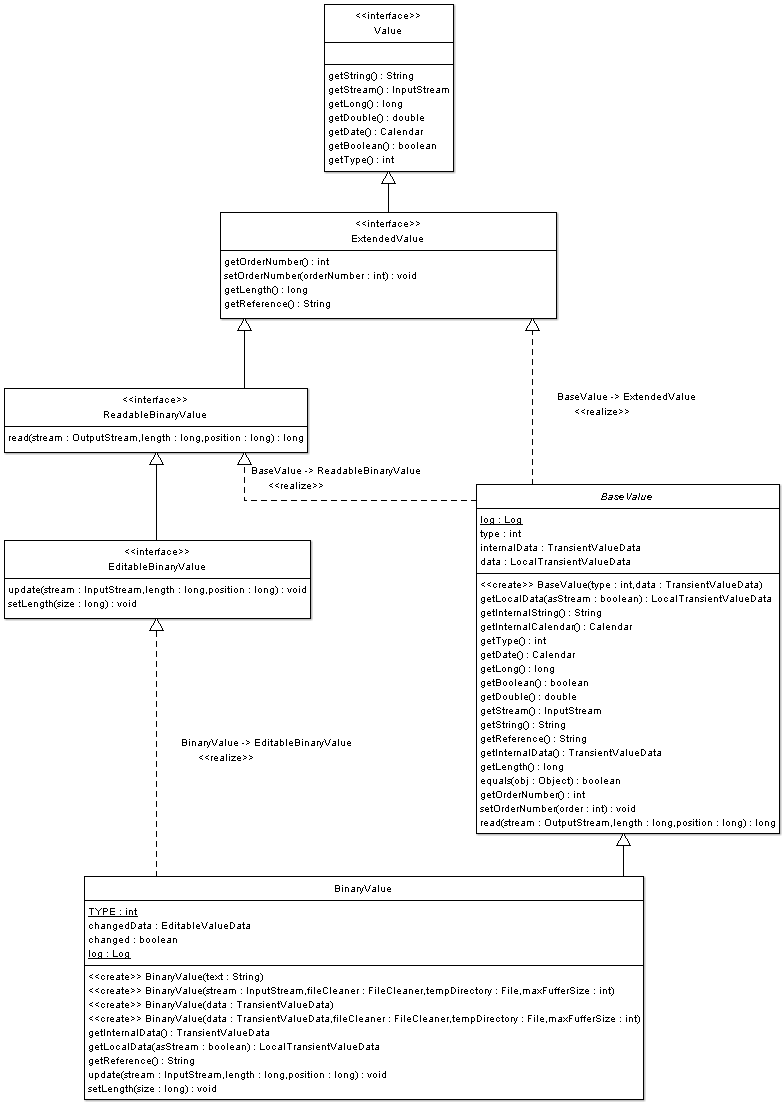

- 1.46. Binary Values Processing

- 1.47. JCR Resources:

- 1.48. JCR Workspace Data Container (architecture contract)

- 1.49. How to implement Workspace Data Container

- 1.50. DBCleanService

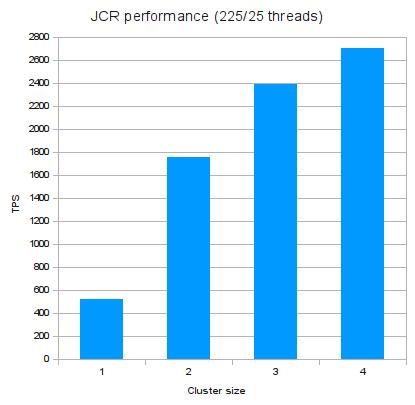

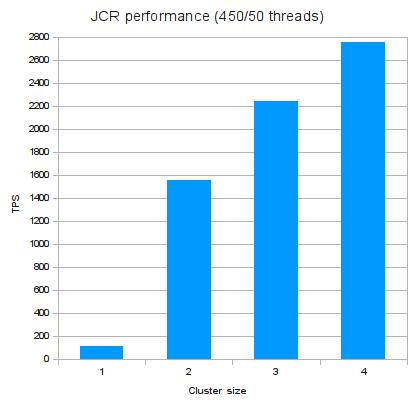

- 1.51. JCR Performance Tuning Guide

eXo provides JCR implementation called eXo JCR.

This part will show you how to configure and use eXo JCR in GateIn and standalone.

Java Content Repository API as well as other Java language related standards is created within the Java Community Process http://jcp.org/ as a result of collaboration of an expert group and the Java community. It is known as JSR-170 (Java Specification Request).

The main purpose of content repository is to maintain the data. The heart of CR is the data model:

The main data storage abstraction of JCR's data model is a workspace

Each repository should have one or more workspaces

The content is stored in a workspace as a hierarchy of items

Each workspace has its own hierarchy of items

Node is intended to support the data hierarchy. It is of type using namespaced names which allows the content to be structured in accordance with standardized constraints. A node may be versioned through an associated version graph (optional feature)

Property stored data are values of predefined types (String, Binary, Long, Boolean, Double, Date, Reference, Path).

It is important to note that the data model for the interface (the repository model) is rarely the same as the data models used by the repository's underlying storage subsystems. The repository knows how to make the client's changes persistent because that is part of the repository configuration, rather than part of the application programming task.

JCR (Java Content Repository) is a java interface used to access contents that are not only web contents, but also other hierarchically stored data. The content is stored in a repository. The repository can be a file system, a relational database or an XML document. The internal structure of JCR data looks similar to an XML document, that means a document tree with nodes and data, but with a small difference, in JCR the data are stored in "property items".

Or better to cite the specification of JCR: "A content repository is a high-level information management system that is a superset of traditional data repositories."

How do you know the data of your website are stored? The images are probably in a file system, the meta data are in some dedicated files - maybe in XML - the text documents and pdfs are stored in different folders with the meta data in an other place (a database?) and in a proprietary structure. How do you manage to update these data and how do you manage the access rights? If your boss asks you to manage different versions of each document or not? The larger your website is, the more you need a Content Management Systems (CMS) which tackles all these issues.

These CMS solutions are sold by different vendors and each vendor provides its own API for interfacing the proprietary content repository. The developers have to deal with this and need to learn the vendor-specific API. If in the future you wish to switch to a different vendor, everything will be different and you will have a new implementation, a new interface, etc.

JCR provides a unique java interface for interacting with both text and binary data, for dealing with any kind and amount of meta data your documents might have. JCR supplies methods for storing, updating, deleting and retrieving your data, independent of the fact if this data is stored in a RDBMS, in a file system or as an XML document - you just don't need to care about. The JCR interface is also defined as classes and methods for searching, versioning, access control, locking, and observation.

Furthermore, an export and import functionality is specified so that a switch to a different vendor is always possible.

eXo fully complies a JCR standard JSR 170; therefore with eXo JCR you can use a vendor-independent API. It means that you could switch any time to a different vendor. Using the standard lowers your lifecycle cost and reduces your long term risk.

Of course eXo does not only offer JCR, but also the complete solution for ECM (Enterprise Content Management) and for WCM (Web Content Management).

In order to further understand the theory of JCR and the API, please refer to some external documents about this standard:

Roy T. Fielding, JSR 170 Overview: Standardizing the Content Repository Interface (March 13, 2005)

Benjamin Mestrallet, Tuan Nguyen, Gennady Azarenkov, Francois Moron and Brice Revenant eXo Platform v2, Portal, JCR, ECM, Groupware and Business Intelligence. (January 2006)

Access Control Configuration, Export Import Implementation, External Value Storages, JDBC Data Container config, Locking, Multilanguage support, Node types and Namespaces, Repository and Workspace management, Repository container life cycle, Workspace, Persistence Storage Workspace, SimpleDB storage

eXo Repository Service is a standard eXo service and is a registered IoC component, i.e. can be deployed in some eXo Containers (see Service configuration for details). The relationships between components are shown in the picture below:

eXo Container: some subclasses of org.exoplatform.container.ExoContainer (usually org.exoplatform.container.StandaloneContainer or org.exoplatform.container.PortalContainer) that holds a reference to Repository Service.

Repository Service: contains information about repositories. eXo JCR is able to manage many Repositories.

Repository: Implementation of javax.jcr.Repository. It holds references to one or more Workspace(s).

Workspace: Container of a single rooted tree of Items. (Note that here it is not exactly the same as javax.jcr.Workspace as it is not a per Session object).

Usual JCR application use case includes two initial steps:

Obtaining Repository object by getting Repository Service from the current eXo Container (eXo "native" way) or via JNDI lookup if eXo repository is bound to the naming context using (see Service configuration for details).

Creating javax.jcr.Session object that calls Repository.login(..).

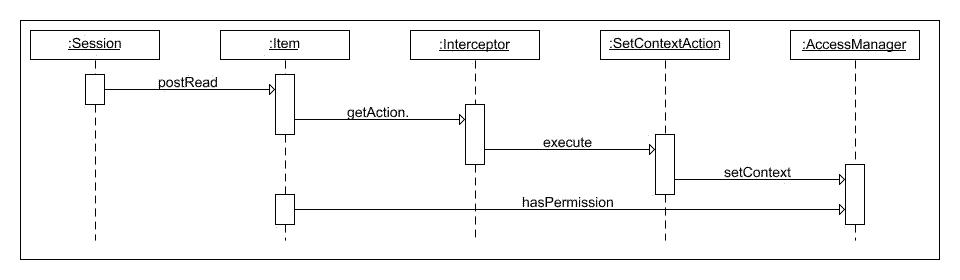

The following diagram explains which components of eXo JCR implementation are used in a data flow to perform operations specified in JCR API

The Workspace Data Model can be split into 4 levels by data isolation and value from the JCR model point of view.

eXo JCR core implements JCR API interfaces, such as Item, Node, Property. It contains JCR "logical" view on stored data.

Session Level: isolates transient data viewable inside one JCR Session and interacts with API level using eXo JCR internal API.

Session Data Manager: maintains transient session data. With data access/ modification/ validation logic, it contains Modified Items Storage to hold the data changed between subsequent save() calling and Session Items Cache.

Transaction Data Manager: maintains session data between save() and transaction commit/ rollback if the current session is part of a transaction.

Workspace Level: operates for particular workspace shared data. It contains per-Workspace objects

Workspace Storage Data Manager: maintains workspace data, including final validation, events firing, caching.

Workspace Data Container: implements physical data storage. It allows different types of backend (like RDB, FS files, etc) to be used as a storage for JCR data. With the main Data Container, other storages for persisted Property Values can be configured and used.

Indexer: maintains workspace data indexing for further queries.

Storage Level: Persistent storages for:

JCR Data

Indexes (Apache Lucene)

Values (e.g., for BLOBs) if different from the main Data Container

Data repository and application are isolated from each other so an application developer should not learn the details of particular data storage's interfaces, but can need to concentrate on business logic of a particular application built on the top of JCR.

Repositories can be simply exchanged between different applications without changing the applications themselves. This is the matter of the repository configuration.

Data storage types/ versions can be changed and also, different types of data storages can be combined in one repository data model (of course, the complexity and work of building interfaces between the repository and its data storage don't disappear but these changes are isolated in the repository and thus manageable from the point of view of the customer).

Using a standardized repository for content management reduces the risk of dependence on a particular software vendor and proprietary API.

Costs for maintaining and developing a content repository based custom application is significantly lower than developing and supporting your own interfaces and maintaining your own data repository applications (staff can be trained once, it is possible to take help from the community and the third party consulters).

Thanks to flexible layered JCR API (see below), it is possible to fit the legacy storage subsystem into new interfaces and decrease the costs and the risk of losing data.

An extension to the API exists as we can see in the following layer schema.

The Java Content Repository specification JSR-170 has been split into two compliance levels as well as a set of optional features.

Level 1 defines a read-only repository.

Level 2 defines methods for writing content and bidirectional interaction with the repository.

eXo JCR supports JSR-170 level 1 and level 2 and all optional features. The recent JSR-283 is not yet supported.

Level 1 includes read-only functionality for very simple repositories. It is useful to port an existing data repository and convert it to a more advanced form step by step. JCR uses a well-known Session abstraction to access the repository data (similar to the sessions we have in OS, web, etc).

The features of level 1:

Initiating a session calling login method with the name of desired workspace and client credentials. It involves some security mechanisms (JAAS) to authenticate the client and in case the client is authorized to use the data from a particular workspace, he can retrieve the session with a workspace tied to it.

Using the obtained session, the client can retrieve data (items) by traversing the tree, directly accessing a particular item (requesting path or UUID) or traversing the query result. So an application developer can choose the "best" form depending on the content structure and desired operation.

Reading property values. All content of a repository is ultimately accessed through properties and stored in property values of predefined types (Boolean, Binary Data, Double, Long, String) and special types Name, Reference, and Path. It is possible to read property value without knowing its real name as a primary item.

Export to XML. Repository supports two XML/JCR data model mappings: system and doc view. The system view provides complete XML serialization without loss of information and is somewhat difficult for a human to read. In contrast, the document view is well readable but does not completely reflect the state of repository, it is used for Xpath queries.

Query facility with Xpath syntax. Xpath, originally developed for XML, suits the JCR data model as well because the JCR data model is very close to XML's one. It is applied to JCR as it would be applied to the document view of the serialized repository content, returning a table of property names and content matching the query.

Discovery of available node types. Every node should have only one primary node type that defines names, types and other characteristics of child nodes and properties. It also can have one or more mixin data types that defines additional characteristics. Level 1 provides methods for discovering available in repository node types and node types of a concrete node.

Transient namespace remapping. Item name can have prefix, delimited by a single ':' (colon) character that indicates the namespace of this name. It is patterned after XML namespaces, prefix is mapped to URI to minimize names collisions. In Level 1, a prefix can be temporary overridden by another prefix in the scope of a session.

JCR level 2 includes reading/ writing content functionality, importing other sources and managing content definition and structuring using extensible node types.

In addition to the features of the Level 1, it also supports the following major features:

Adding, moving, copying and removing items inside workspace and moving, copying and cloning items between workspaces. The client can also compare the persisted state of an item with its unsaved states and either save the new state or discard it.

Modifying and writing value of properties. Property types are checked and can be converted to the defined format.

Importing XML document into the repository as a tree of nodes and properties. If the XML document is an export of JCR system view, the content of repository can be completely restored. If this is not the case, the document is interpreted as a document view and the import procedure builds a tree of JCR nodes and properties that matches the tree structure of the XML document.

Assigning node types to nodes. The primary node type is assigned when adding a node. This can be done automatically based on the parent node type definition and mixin node types.

Persistent namespaces changes. Adding, changing and removing namespaces stored in the namespace registry, excluding built-in namespaces required by JCR.

On the top of Level 1 or Level 2, a number of optional features are defined for a more advanced repository functionality. This includes functions such as Versioning, (JTA) Transactions, Query using SQL, Explicit Locking and Content Observation. eXo JCR supports all optional features.

A javax.jcr.Repository object can be obtained by:

Using the eXo Container "native" mechanism. All Repositories are kept with a single RepositoryService component. So it can be obtained from eXo Container, described as the following:

RepositoryService repositoryService = (RepositoryService) container.getComponentInstanceOfType(RepositoryService.class);

Repository repository = repositoryService.getRepository("repositoryName");

Using the eXo Container "native" mechanism with a thread local saved "current" repository (especially if you plan to use a single repository which covers more than 90% of use cases)

// set current repository at initial time

RepositoryService repositoryService = (RepositoryService) container.getComponentInstanceOfType(RepositoryService.class);

repositoryService.setCurrentRepositoryName("repositoryName");

....

// retrieve and use this repository

Repository repository = repositoryService.getCurrentRepository();

Using JNDI as specified in JSR-170. This way you have to configure the reference (see eXo JNDI Naming configuration )

Context ctx = new InitialContext();

Repository repository =(Repository) ctx.lookup("repositoryName");Remember that javax.jcr.Session is not a thread safe object. Never try to share it between threads.

Do not use System session from the user related code because a system session has unlimited rights. Call ManageableRepository.getSystemSession() from process related code only.

Call Session.logout() explicitly to release resources assigned to the session.

When designing your application, take care of the Session policy inside your application. Two strategies are possible: Stateless (Session per business request) and Stateful (Session per User) or some mix.

(one-shot logout for all opened sessions)

Use org.exoplatform.services.jcr.ext.common.SessionProvider which is responsible for caching/obtaining your JCR Sessions and closing all opened sessions at once.

public class SessionProvider implements SessionLifecycleListener {

/**

* Creates a SessionProvider for a certain identity

* @param cred

*/

public SessionProvider(Credentials cred)

/**

* Gets the session from internal cache or creates and caches a new one

*/

public Session getSession(String workspaceName, ManageableRepository repository)

throws LoginException, NoSuchWorkspaceException, RepositoryException

/**

* Calls a logout() method for all cached sessions

*/

public void close()

/**

* a Helper for creating a System session provider

* @return System session

*/

public static SessionProvider createSystemProvider()

/**

* a Helper for creating an Anonimous session provider

* @return System session

*/

public static SessionProvider createAnonimProvider()

/**

* Helper for creating session provider from AccessControlEntry.

*

* @return System session

*/

SessionProvider createProvider(List<AccessControlEntry> accessList)

/**

* Remove the session from the cache

*/

void onCloseSession(ExtendedSession session)

/**

* Gets the current repository used

*/

ManageableRepository getCurrentRepository()

/**

* Gets the current workspace used

*/

String getCurrentWorkspace()

/**

* Set the current repository to use

*/

void setCurrentRepository(ManageableRepository currentRepository)

/**

* Set the current workspace to use

*/

void setCurrentWorkspace(String currentWorkspace)

}The SessionProvider is per-request or per-user object, depending on your policy. Create it with your application before performing JCR operations, use it to obtain the Sessions and close at the end of an application session(request). See the following example:

// (1) obtain current javax.jcr.Credentials, for example get it from AuthenticationService

Credentials cred = ....

// (2) create SessionProvider for current user

SessionProvider sessionProvider = new SessionProvider(ConversationState.getCurrent());

// NOTE: for creating an Anonymous or System Session use the corresponding static SessionProvider.create...() method

// Get appropriate Repository as described in "Obtaining Repository object" section for example

ManageableRepository repository = (ManageableRepository) ctx.lookup("repositoryName");

// get an appropriate workspace's session

Session session = sessionProvider.getSession("workspaceName", repository);

.........

// your JCR code

.........

// Close the session provider

sessionProvider.close(); As shown above, creating the SessionProvider involves multiple steps and you may not want to repeat them each time you need to get a JCR session. In order to avoid all this plumbing code, we provide the SessionProviderService whose goal is to help you to get a SessionProvider object.

The org.exoplatform.services.jcr.ext.app.SessionProviderService interface is defined as follows:

public interface SessionProviderService {

void setSessionProvider(Object key, SessionProvider sessionProvider);

SessionProvider getSessionProvider(Object key);

void removeSessionProvider(Object key);

}Using this service is pretty straightforward, the main contract of an implemented component is getting a SessionProvider by key. eXo provides two implementations :

Table 1.1. SessionProvider implementations

| Implementation | Description | Typical Use |

|---|---|---|

| org.exoplatform.services.jcr.ext.app.MapStoredSessionProviderService | per-user style : keeps objects in a Map | per-user. The usual practice uses a user's name or Credentials as a key. |

| org.exoplatform.services.jcr.ext.app.ThreadLocalSessionProviderService | per-request style : keeps a single SessionProvider in a static ThreadLocal variable | Always use null for the key. |

For any implementation, your code should follow the following sequence :

Call SessionProviderService.setSessionProvider(Object key, SessionProvider sessionProvider) at the beginning of a business request for Stateless application or application's session for Statefull policy.

Call SessionProviderService.getSessionProvider(Object key) for obtaining a SessionProvider object

Call SessionProviderService.removeSessionProvider(Object key) at the end of a business request for Stateless application or application's session for Statefull policy.

eXo JCR supports observation (JSR-170 8.3), which enables applications to register interest in events that describe changes to a workspace, and then monitor and respond to those events. The standard observation feature allows dispatching events when persistent change to the workspace is made.

eXo JCR also offers a proprietary Extension Action which dispatches and fires an event upon each transient session level change, performed by a client. In other words, the event is triggered when a client's program invokes some updating methods in a session or a workspace (such as: Session.addNode(), Session.setProperty(), Workspace.move() etc.

By default when an action fails, the related exception is simply logged. In case you would like to change the default exception handling, you can implement the interface AdvancedAction. In case the JCR detects that your action is of type AdvancedAction, it will call the method onError instead of simply logging it. A default implementation of the onError method is available in the abstract class AbstractAdvancedAction. It reverts all pending changes of the current JCR session for any kind of event corresponding to a write operation. Then in case the provided exception is an instance of type AdvancedActionException, it will throw it otherwise it will log simply it. An AdvancedActionException will be thrown in case the changes could not be reverted.

Warning

AdvancedAction interface must be implemented with a lot of caution to avoid being a performance killer.

One important recommendation should be applied for an extension action implementation. Each action will add its own execution time to standard JCR methods (Session.addNode(), Session.setProperty(), Workspace.move() etc.) execution time. As a consequence, it's necessary to minimize Action.execute(Context) body execution time.

To make the rule, you can use the dedicated Thread in Action.execute(Context) body for a custom logic. But if your application logic requires the action to add items to a created/updated item and you save these changes immediately after the JCR API method call is returned, the suggestion with Thread is not applicable for you in this case.

Add a SessionActionCatalog service and an appropriate AddActionsPlugin (see the example below) configuration to your eXo Container configuration. As usual, the plugin can be configured as in-component-place, which is the case for a Standalone Container or externally, which is a usual case for Root/Portal Container configuration).

Each Action entry is exposed as org.exoplatform.services.jcr.impl.ext.action. ActionConfiguration of actions collection of org.exoplatform.services.jcr.impl.ext.action.AddActionsPlugin$ActionsConfig (see an example below). The mandatory field named actionClassName is the fully qualified name of org.exoplatform.services.command.action.Action implementation - the command will be launched in case the current event matches the criteria. All other fields are criteria. The criteria are *AND*ed together. In other words, for a particular item to be listened to, it must meet ALL the criteria:

* workspace: the comma delimited (ORed) list of workspaces

* eventTypes: a comma delimited (ORed) list of event names (see below) to be listened to. This is the only mandatory field, others are optional and if they are missing they are interpreted as ANY.

* path - a comma delimited (ORed) list of item absolute paths (or within its subtree if isDeep is true, which is the default value)

* nodeTypes - a comma delimited (ORed) list of the current NodeType. Since version 1.6.1 JCR supports the functionalities of nodeType and parentNodeType. This parameter has different semantics, depending on the type of the current item and the operation performed. If the current item is a property it means the parent node type. If the current item is a node, the semantic depends on the event type: ** add node event: the node type of the newly added node. ** add mixin event: the newly added mixing node type of the current node. ** remove mixin event the removed mixin type of the current node. ** other events: the already assigned NodeType(s) of the current node (can be both primary and mixin).

Note

The list of fields can be extended.

Note

No spaces between list elements.

Note

isDeep=false means node, node properties and child nodes.

The list of supported Event names: addNode, addProperty, changeProperty, removeProperty, removeNode, addMixin, removeMixin, lock, unlock, checkin, checkout, read.

<component>

<type>org.exoplatform.services.jcr.impl.ext.action.SessionActionCatalog</type>

<component-plugins>

<component-plugin>

<name>addActions</name>

<set-method>addPlugin</set-method>

<type>org.exoplatform.services.jcr.impl.ext.action.AddActionsPlugin</type>

<description>add actions plugin</description>

<init-params>

<object-param>

<name>actions</name>

<object type="org.exoplatform.services.jcr.impl.ext.action.AddActionsPlugin$ActionsConfig">

<field name="actions">

<collection type="java.util.ArrayList">

<value>

<object type="org.exoplatform.services.jcr.impl.ext.action.ActionConfiguration">

<field name="eventTypes"><string>addNode,removeNode</string></field>

<field name="path"><string>/test,/exo:test</string></field>

<field name="isDeep"><boolean>true</boolean></field>

<field name="nodeTypes"><string>nt:file,nt:folder,mix:lockable</string></field>

<!-- field name="workspace"><string>backup</string></field -->

<field name="actionClassName"><string>org.exoplatform.services.jcr.ext.DummyAction</string></field>

</object>

</value>

</collection>

</field>

</object>

</object-param>

</init-params>

</component-plugin>

</component-plugins>

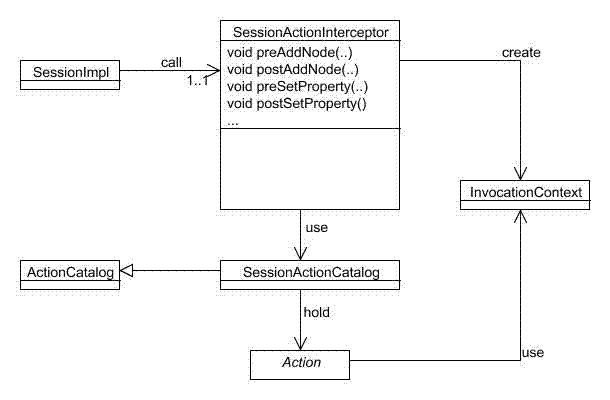

</component>The following is a picture about the interaction between Applications and JCR:

Every Content (JCR) dependent application interacts with eXo JCR via JSR-170 and eXo JCR API extension (mostly for administration) directly or using some intermediate Framework (Neither Application nor Framework should ever rely on Implementation directly!)

Content Application: all applications may use JCR as a data storage. Some of them are generic and completely decoupled from JCR API as interaction protocol hides Content storage nature (like WebDav client), some partially decoupled (like Command framework based), meaning that they do not use JCR API directly, and some (most part) use JSR-170 directly.

Frameworks is a special kind of JCR client that acts as an intermediate level between Content Repository and End Client Application. There are Protocol (WebDav, RMI or FTP servers for example) and Pattern (Command, Web(servlet), J2EE connector) specific Frameworks. It is possible to build a multi-layered (in framework sense) JCR application, for example Web application uses Web framework that uses Command framework underneath.

eXo JCR implementation supports two ways of Nodetypes registration:

From a NodeTypeValue POJO

From an XML document (stream)

The ExtendedNodeTypeManager (from JCR 1.11) interface provides the following methods related to registering node types:

public static final int IGNORE_IF_EXISTS = 0;

public static final int FAIL_IF_EXISTS = 2;

public static final int REPLACE_IF_EXISTS = 4;

/**

* Return NodeType for a given InternalQName.

*

* @param qname nodetype name

* @return NodeType

* @throws NoSuchNodeTypeException if no nodetype found with the name

* @throws RepositoryException Repository error

*/

NodeType findNodeType(InternalQName qname) throws NoSuchNodeTypeException, RepositoryException;

/**

* Registers node type using value object.

*

* @param nodeTypeValue

* @param alreadyExistsBehaviour

* @throws RepositoryException

*/

NodeType registerNodeType(NodeTypeValue nodeTypeValue, int alreadyExistsBehaviour) throws RepositoryException;

/**

* Registers all node types using XML binding value objects from xml stream.

*

* @param xml a InputStream

* @param alreadyExistsBehaviour a int

* @throws RepositoryException

*/

NodeTypeIterator registerNodeTypes(InputStream xml, int alreadyExistsBehaviour, String contentType)

throws RepositoryException;

/**

* Gives the {@link NodeTypeManager}

*

* @throws RepositoryException if another error occurs.

*/

NodeTypeDataManager getNodeTypesHolder() throws RepositoryException;

/**

* Return <code>NodeTypeValue</code> for a given nodetype name. Used for

* nodetype update. Value can be edited and registered via

* <code>registerNodeType(NodeTypeValue nodeTypeValue, int alreadyExistsBehaviour)</code>

* .

*

* @param ntName nodetype name

* @return NodeTypeValue

* @throws NoSuchNodeTypeException if no nodetype found with the name

* @throws RepositoryException Repository error

*/

NodeTypeValue getNodeTypeValue(String ntName) throws NoSuchNodeTypeException, RepositoryException;

/**

* Registers or updates the specified <code>Collection</code> of

* <code>NodeTypeValue</code> objects. This method is used to register or

* update a set of node types with mutual dependencies. Returns an iterator

* over the resulting <code>NodeType</code> objects. <p/> The effect of the

* method is "all or nothing"; if an error occurs, no node types are

* registered or updated. <p/> Throws an

* <code>InvalidNodeTypeDefinitionException</code> if a

* <code>NodeTypeDefinition</code> within the <code>Collection</code> is

* invalid or if the <code>Collection</code> contains an object of a type

* other than <code>NodeTypeDefinition</code> . <p/> Throws a

* <code>NodeTypeExistsException</code> if <code>allowUpdate</code> is

* <code>false</code> and a <code>NodeTypeDefinition</code> within the

* <code>Collection</code> specifies a node type name that is already

* registered. <p/> Throws an

* <code>UnsupportedRepositoryOperationException</code> if this implementation

* does not support node type registration.

*

* @param values a collection of <code>NodeTypeValue</code>s

* @param alreadyExistsBehaviour a int

* @return the registered node types.

* @throws InvalidNodeTypeDefinitionException if a

* <code>NodeTypeDefinition</code> within the

* <code>Collection</code> is invalid or if the

* <code>Collection</code> contains an object of a type other than

* <code>NodeTypeDefinition</code>.

* @throws NodeTypeExistsException if <code>allowUpdate</code> is

* <code>false</code> and a <code>NodeTypeDefinition</code> within

* the <code>Collection</code> specifies a node type name that is

* already registered.

* @throws UnsupportedRepositoryOperationException if this implementation does

* not support node type registration.

* @throws RepositoryException if another error occurs.

*/

public NodeTypeIterator registerNodeTypes(List<NodeTypeValue> values, int alreadyExistsBehaviour)

throws UnsupportedRepositoryOperationException, RepositoryException;

/**

* Unregisters the specified node type.

*

* @param name a <code>String</code>.

* @throws UnsupportedRepositoryOperationException if this implementation does

* not support node type registration.

* @throws NoSuchNodeTypeException if no registered node type exists with the

* specified name.

* @throws RepositoryException if another error occurs.

*/

public void unregisterNodeType(String name) throws UnsupportedRepositoryOperationException, NoSuchNodeTypeException,

RepositoryException;

/**

* Unregisters the specified set of node types.<p/> Used to unregister a set

* of node types with mutual dependencies.

*

* @param names a <code>String</code> array

* @throws UnsupportedRepositoryOperationException if this implementation does

* not support node type registration.

* @throws NoSuchNodeTypeException if one of the names listed is not a

* registered node type.

* @throws RepositoryException if another error occurs.

*/

public void unregisterNodeTypes(String[] names) throws UnsupportedRepositoryOperationException,

NoSuchNodeTypeException, RepositoryException;The NodeTypeValue interface represents a simple container structure used to define node types which are then registered through the ExtendedNodeTypeManager.registerNodeType method. The implementation of this interface does not contain any validation logic.

/** * @return Returns the declaredSupertypeNames. */ public List<String> getDeclaredSupertypeNames(); /** * @param declaredSupertypeNames *The declaredSupertypeNames to set. */ public void setDeclaredSupertypeNames(List<String> declaredSupertypeNames); /** * @return Returns the mixin. */ public boolean isMixin(); /** * @param mixin *The mixin to set. */ public void setMixin(boolean mixin); /** * @return Returns the name. */ public String getName(); /** * @param name *The name to set. */ public void setName(String name); /** * @return Returns the orderableChild. */ public boolean isOrderableChild(); /** * @param orderableChild *The orderableChild to set. */ public void setOrderableChild(boolean orderableChild); /** * @return Returns the primaryItemName. */ public String getPrimaryItemName(); /** * @param primaryItemName *The primaryItemName to set. */ public void setPrimaryItemName(String primaryItemName); /** * @return Returns the declaredChildNodeDefinitionNames. */ public List<NodeDefinitionValue> getDeclaredChildNodeDefinitionValues(); /** * @param declaredChildNodeDefinitionNames *The declaredChildNodeDefinitionNames to set. */ public void setDeclaredChildNodeDefinitionValues(List<NodeDefinitionValue> declaredChildNodeDefinitionValues); /** * @return Returns the declaredPropertyDefinitionNames. */ public List<PropertyDefinitionValue> getDeclaredPropertyDefinitionValues(); /** * @param declaredPropertyDefinitionNames *The declaredPropertyDefinitionNames to set. */ public void setDeclaredPropertyDefinitionValues(List<PropertyDefinitionValue> declaredPropertyDefinitionValues);

The NodeDefinitionValue interface extends ItemDefinitionValue with the addition of writing methods, enabling the characteristics of a child node definition to be set, after that the NodeDefinitionValue is added to a NodeTypeValue.

/** * @return Returns the defaultNodeTypeName. */ public String getDefaultNodeTypeName() /** * @param defaultNodeTypeName The defaultNodeTypeName to set. */ public void setDefaultNodeTypeName(String defaultNodeTypeName) /** * @return Returns the sameNameSiblings. */ public boolean isSameNameSiblings() /** * @param sameNameSiblings The sameNameSiblings to set. */ public void setSameNameSiblings(boolean multiple) /** * @return Returns the requiredNodeTypeNames. */ public List<String> getRequiredNodeTypeNames() /** * @param requiredNodeTypeNames The requiredNodeTypeNames to set. */ public void setRequiredNodeTypeNames(List<String> requiredNodeTypeNames)

The PropertyDefinitionValue interface extends ItemDefinitionValue with the addition of writing methods, enabling the characteristics of a child property definition to be set, after that the PropertyDefinitionValue is added to a NodeTypeValue.

/** * @return Returns the defaultValues. */ public List<String> getDefaultValueStrings(); /** * @param defaultValues The defaultValues to set. */ public void setDefaultValueStrings(List<String> defaultValues); /** * @return Returns the multiple. */ public boolean isMultiple(); /** * @param multiple The multiple to set. */ public void setMultiple(boolean multiple); /** * @return Returns the requiredType. */ public int getRequiredType(); /** * @param requiredType The requiredType to set. */ public void setRequiredType(int requiredType); /** * @return Returns the valueConstraints. */ public List<String> getValueConstraints(); /** * @param valueConstraints The valueConstraints to set. */ public void setValueConstraints(List<String> valueConstraints);

/** * @return Returns the autoCreate. */ public boolean isAutoCreate(); /** * @param autoCreate The autoCreate to set. */ public void setAutoCreate(boolean autoCreate); /** * @return Returns the mandatory. */ public boolean isMandatory(); /** * @param mandatory The mandatory to set. */ public void setMandatory(boolean mandatory); /** * @return Returns the name. */ public String getName(); /** * @param name The name to set. */ public void setName(String name); /** * @return Returns the onVersion. */ public int getOnVersion(); /** * @param onVersion The onVersion to set. */ public void setOnVersion(int onVersion); /** * @return Returns the readOnly. */ public boolean isReadOnly(); /** * @param readOnly The readOnly to set. */ public void setReadOnly(boolean readOnly);

eXo JCR implementation supports various methods of the node-type registration.

ExtendedNodeTypeManager nodeTypeManager = (ExtendedNodeTypeManager) session.getWorkspace()

.getNodeTypeManager();

InputStream is = MyClass.class.getResourceAsStream("mynodetypes.xml");

nodeTypeManager.registerNodeTypes(is,ExtendedNodeTypeManager.IGNORE_IF_EXISTS );ExtendedNodeTypeManager nodeTypeManager = (ExtendedNodeTypeManager) session.getWorkspace()

.getNodeTypeManager();

NodeTypeValue testNValue = new NodeTypeValue();

List<String> superType = new ArrayList<String>();

superType.add("nt:base");

testNValue.setName("exo:myNodeType");

testNValue.setPrimaryItemName("");

testNValue.setDeclaredSupertypeNames(superType);

List<PropertyDefinitionValue> props = new ArrayList<PropertyDefinitionValue>();

props.add(new PropertyDefinitionValue("*",

false,

false,

1,

false,

new ArrayList<String>(),

false,

0,

new ArrayList<String>()));

testNValue.setDeclaredPropertyDefinitionValues(props);

nodeTypeManager.registerNodeType(testNValue, ExtendedNodeTypeManager.FAIL_IF_EXISTS);If you want to replace existing node type definition, you should pass ExtendedNodeTypeManager.REPLACE_IF_EXISTS as a second parameter for the method ExtendedNodeTypeManager.registerNodeType.

ExtendedNodeTypeManager nodeTypeManager = (ExtendedNodeTypeManager) session.getWorkspace()

.getNodeTypeManager();

InputStream is = MyClass.class.getResourceAsStream("mynodetypes.xml");

.....

nodeTypeManager.registerNodeTypes(is,ExtendedNodeTypeManager.REPLACE_IF_EXISTS );Note

Node type is possible to remove only when the repository does not contain nodes of this type.

nodeTypeManager.unregisterNodeType("myNodeType");

NodeTypeValue myNodeTypeValue = nodeTypeManager.getNodeTypeValue(myNodeTypeName);

List<PropertyDefinitionValue> props = new ArrayList<PropertyDefinitionValue>();

props.add(new PropertyDefinitionValue("tt",

true,

true,

1,

false,

new ArrayList<String>(),

false,

PropertyType.STRING,

new ArrayList<String>()));

myNodeTypeValue.setDeclaredPropertyDefinitionValues(props);

nodeTypeManager.registerNodeType(myNodeTypeValue, ExtendedNodeTypeManager.REPLACE_IF_EXISTS);NodeTypeValue myNodeTypeValue = nodeTypeManager.getNodeTypeValue(myNodeTypeName);

List<NodeDefinitionValue> nodes = new ArrayList<NodeDefinitionValue>();

nodes.add(new NodeDefinitionValue("child",

false,

false,

1,

false,

"nt:base",

new ArrayList<String>(),

false));

testNValue.setDeclaredChildNodeDefinitionValues(nodes);

nodeTypeManager.registerNodeType(myNodeTypeValue, ExtendedNodeTypeManager.REPLACE_IF_EXISTS);Note that the existing data must be consistent before changing or removing a existing definition . JCR does not allow you to change the node type in the way in which the existing data would be incompatible with a new node type. But if these changes are needed, you can do it in several phases, consistently changing the node type and the existing data.

For example:

Add a new residual property definition with name "downloadCount" to the existing node type "myNodeType".

There are two limitations that do not allow us to make the task with a single call of registerNodeType method.

Existing nodes of the type "myNodeType", which does not contain properties "downloadCount" that conflicts with node type what we need.

Registered node type "myNodeType" will not allow us to add properties "downloadCount" because it has no such specific properties.

To complete the task, we need to make 3 steps:

Change the existing node type "myNodeType" by adding the mandatory property "downloadCount".

Add the node type "myNodeType" with the property "downloadCount" to all the existing node types.

Change the definition of the property "downloadCount" of the node type "myNodeType" to mandatory.

NodeTypeValue testNValue = nodeTypeManager.getNodeTypeValue("exo:myNodeType");

List<String> superType = testNValue.getDeclaredSupertypeNames();

superType.add("mix:versionable");

testNValue.setDeclaredSupertypeNames(superType);

nodeTypeManager.registerNodeType(testNValue, ExtendedNodeTypeManager.REPLACE_IF_EXISTS);The Registry Service is one of the key parts of the infrastructure built around eXo JCR. Each JCR that is based on service, applications, etc may have its own configuration, settings data and other data that have to be stored persistently and used by the approptiate service or application. ( We call it "Consumer").

The service acts as a centralized collector (Registry) for such data. Naturally, a registry storage is JCR based i.e. stored in some JCR workspace (one per Repository) as an Item tree under /exo:registry node.

Despite the fact that the structure of the tree is well defined (see the scheme below), it is not recommended for other services to manipulate data using JCR API directly for better flexibility. So the Registry Service acts as a mediator between a Consumer and its settings.

The proposed structure of the Registry Service storage is divided into 3 logical groups: services, applications and users:

exo:registry/ <-- registry "root" (exo:registry)

exo:services/ <-- service data storage (exo:registryGroup)

service1/

Consumer data (exo:registryEntry)

...

exo:applications/ <-- application data storage (exo:registryGroup)

app1/

Consumer data (exo:registryEntry)

...

exo:users/ <-- user personal data storage (exo:registryGroup)

user1/

Consumer data (exo:registryEntry)

...Each upper level eXo Service may store its configuration in eXo Registry. At first, start from xml-config (in jar etc) and then from Registry. In configuration file, you can add force-xml-configuration parameter to component to ignore reading parameters initialization from RegistryService and to use file instead:

<value-param> <name>force-xml-configuration</name> <value>true</value> </value-param>

The main functionality of the Registry Service is pretty simple and straightforward, it is described in the Registry abstract class as the following:

public abstract class Registry

{

/**

* Returns Registry node object which wraps Node of "exo:registry" type (the whole registry tree)

*/

public abstract RegistryNode getRegistry(SessionProvider sessionProvider) throws RepositoryConfigurationException,

RepositoryException;

/**

* Returns existed RegistryEntry which wraps Node of "exo:registryEntry" type

*/

public abstract RegistryEntry getEntry(SessionProvider sessionProvider, String entryPath)

throws PathNotFoundException, RepositoryException;

/**

* creates an entry in the group. In a case if the group does not exist it will be silently

* created as well

*/

public abstract void createEntry(SessionProvider sessionProvider, String groupPath, RegistryEntry entry)

throws RepositoryException;

/**

* updates an entry in the group

*/

public abstract void recreateEntry(SessionProvider sessionProvider, String groupPath, RegistryEntry entry)

throws RepositoryException;

/**

* removes entry located on entryPath (concatenation of group path / entry name)

*/

public abstract void removeEntry(SessionProvider sessionProvider, String entryPath) throws RepositoryException;

}As you can see it looks like a simple CRUD interface for the RegistryEntry object which wraps registry data for some Consumer as a Registry Entry. The Registry Service itself knows nothing about the wrapping data, it is Consumer's responsibility to manage and use its data in its own way.

To create an Entity Consumer you should know how to serialize the data to some XML structure and then create a RegistryEntry from these data at once or populate them in a RegistryEntry object (using RegistryEntry(String entryName) constructor and then obtain and fill a DOM document).

Example of RegistryService using:

RegistryService regService = (RegistryService) container

.getComponentInstanceOfType(RegistryService.class);

RegistryEntry registryEntry = regService.getEntry(sessionProvider,

RegistryService.EXO_SERVICES + "/my-service");

Document doc = registryEntry.getDocument();

String mySetting = getElementsByTagName("tagname").item(index).getTextContent();

.....RegistryService has two optional params: value parameter mixin-names and properties parameter locations. The mixin-names is used for adding additional mixins to nodes exo:registry, exo:applications, exo:services, exo:users and exo:groups of RegistryService. This allows the top level applications to manage these nodes in special way. Locations is used to mention where exo:registry is placed for each repository. The name of each property is interpreted as a repository name and its value as a workspace name (a system workspace by default).

<component>

<type>org.exoplatform.services.jcr.ext.registry.RegistryService</type>

<init-params>

<values-param>

<name>mixin-names</name>

<value>exo:hideable</value>

</values-param>

<properties-param>

<name>locations</name>

<property name="db1" value="ws2"/>

</properties-param>

</init-params>

</component>Since version 1.11, eXo JCR implementation supports namespaces altering.

ExtendedNamespaceRegistry namespaceRegistry = (ExtendedNamespaceRegistry) workspace.getNamespaceRegistry();

namespaceRegistry.registerNamespace("newMapping", "http://dumb.uri/jcr");ExtendedNamespaceRegistry namespaceRegistry = (ExtendedNamespaceRegistry) workspace.getNamespaceRegistry();

namespaceRegistry.registerNamespace("newMapping", "http://dumb.uri/jcr");

namespaceRegistry.registerNamespace("newMapping2", "http://dumb.uri/jcr");Support of node types and namespaces is required by the JSR-170 specification. Beyond the methods required by the specification, eXo JCR has its own API extension for the Node type registration as well as the ability to declaratively define node types in the Repository at the start-up time.

Node type registration extension is declared in org.exoplatform.services.jcr.core.nodetype.ExtendedNodeTypeManager interface

Your custom service can register some neccessary predefined node types at the start-up time. The node definition should be placed in a special XML file (see DTD below) and declared in the service's configuration file thanks to eXo component plugin mechanism, described as follows:

<external-component-plugins>

<target-component>org.exoplatform.services.jcr.RepositoryService</target-component>

<component-plugin>

<name>add.nodeType</name>

<set-method>addPlugin</set-method>

<type>org.exoplatform.services.jcr.impl.AddNodeTypePlugin</type>

<init-params>

<values-param>

<name>autoCreatedInNewRepository</name>

<description>Node types configuration file</description>

<value>jar:/conf/test/nodetypes-tck.xml</value>

<value>jar:/conf/test/nodetypes-impl.xml</value>

</values-param>

<values-param>

<name>repo1</name>

<description>Node types configuration file for repository with name repo1</description>

<value>jar:/conf/test/nodetypes-test.xml</value>

</values-param>

<values-param>

<name>repo2</name>

<description>Node types configuration file for repository with name repo2</description>

<value>jar:/conf/test/nodetypes-test2.xml</value>

</values-param>

</init-params>

</component-plugin>There are two types of registration. The first type is the registration of node types in all created repositories, it is configured in values-param with the name autoCreatedInNewRepository. The second type is registration of node types in specified repository and it is configured in values-param with the name of repository.

Node type definition file format:

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE nodeTypes [

<!ELEMENT nodeTypes (nodeType)*>

<!ELEMENT nodeType (supertypes?|propertyDefinitions?|childNodeDefinitions?)>

<!ATTLIST nodeType

name CDATA #REQUIRED

isMixin (true|false) #REQUIRED

hasOrderableChildNodes (true|false)

primaryItemName CDATA

>

<!ELEMENT supertypes (supertype*)>

<!ELEMENT supertype (CDATA)>

<!ELEMENT propertyDefinitions (propertyDefinition*)>

<!ELEMENT propertyDefinition (valueConstraints?|defaultValues?)>

<!ATTLIST propertyDefinition

name CDATA #REQUIRED

requiredType (String|Date|Path|Name|Reference|Binary|Double|Long|Boolean|undefined) #REQUIRED

autoCreated (true|false) #REQUIRED

mandatory (true|false) #REQUIRED

onParentVersion (COPY|VERSION|INITIALIZE|COMPUTE|IGNORE|ABORT) #REQUIRED

protected (true|false) #REQUIRED

multiple (true|false) #REQUIRED

>

<!-- For example if you need to set ValueConstraints [],

you have to add an empty element <valueConstraints/>.

The same order is for other properties like defaultValues, requiredPrimaryTypes etc.

-->

<!ELEMENT valueConstraints (valueConstraint*)>

<!ELEMENT valueConstraint (CDATA)>

<!ELEMENT defaultValues (defaultValue*)>

<!ELEMENT defaultValue (CDATA)>

<!ELEMENT childNodeDefinitions (childNodeDefinition*)>

<!ELEMENT childNodeDefinition (requiredPrimaryTypes)>

<!ATTLIST childNodeDefinition

name CDATA #REQUIRED

defaultPrimaryType CDATA #REQUIRED

autoCreated (true|false) #REQUIRED

mandatory (true|false) #REQUIRED

onParentVersion (COPY|VERSION|INITIALIZE|COMPUTE|IGNORE|ABORT) #REQUIRED

protected (true|false) #REQUIRED

sameNameSiblings (true|false) #REQUIRED

>

<!ELEMENT requiredPrimaryTypes (requiredPrimaryType+)>

<!ELEMENT requiredPrimaryType (CDATA)>

]>Default namespaces are registered by repository at the start-up time

Your custom service can extend a set of namespaces with some application specific ones, declaring it in service's configuration file thanks to eXo component plugin mechanism, described as follows:

<component-plugin>

<name>add.namespaces</name>

<set-method>addPlugin</set-method>

<type>org.exoplatform.services.jcr.impl.AddNamespacesPlugin</type>

<init-params>

<properties-param>

<name>namespaces</name>

<property name="test" value="http://www.test.org/test"/>

</properties-param>

</init-params>

</component-plugin>This section provides you the knowledge about eXo JCR configuration in details, including the basic and advanced configuration.

Like other eXo services, eXo JCR can be configured and used in the portal or embedded mode (as a service embedded in GateIn) and in standalone mode.

In Embedded mode, JCR services are registered in the Portal container and the second option is to use a Standalone container. The main difference between these container types is that the first one is intended to be used in a Portal (Web) environment, while the second one can be used standalone (see the comprehensive page Service Configuration for Beginners for more details).

The following setup procedure is used to obtain a Standalone configuration (see more in Container configuration):

Configuration that is set explicitly using StandaloneContainer.addConfigurationURL(String url) or StandaloneContainer.addConfigurationPath(String path) before getInstance()

Configuration from $base:directory/exo-configuration.xml or $base:directory/conf/exo-configuration.xml file. Where $base:directory is either AS's home directory in case of J2EE AS environment or just the current directory in case of a standalone application.

/conf/exo-configuration.xml in the current classloader (e.g. war, ear archive)

Configuration from $service_jar_file/conf/portal/configuration.xml. WARNING: Don't rely on some concrete jar's configuration if you have more than one jar containing conf/portal/configuration.xml file. In this case choosing a configuration is unpredictable.

JCR service configuration looks like:

<component>

<key>org.exoplatform.services.jcr.RepositoryService</key>

<type>org.exoplatform.services.jcr.impl.RepositoryServiceImpl</type>

</component>

<component>

<key>org.exoplatform.services.jcr.config.RepositoryServiceConfiguration</key>

<type>org.exoplatform.services.jcr.impl.config.RepositoryServiceConfigurationImpl</type>

<init-params>

<value-param>

<name>conf-path</name>

<description>JCR repositories configuration file</description>

<value>jar:/conf/standalone/exo-jcr-config.xml</value>

</value-param>

<value-param>

<name>max-backup-files</name>

<value>5</value>

</value-param>

<properties-param>

<name>working-conf</name>

<description>working-conf</description>

<property name="source-name" value="jdbcjcr" />

<property name="dialect" value="hsqldb" />

<property name="persister-class-name" value="org.exoplatform.services.jcr.impl.config.JDBCConfigurationPersister" />

</properties-param>

</init-params>

</component>conf-path : a path to a RepositoryService JCR Configuration.

max-backup-files : max number of backup files. This option lets you specify the number of stored backups. Number of backups can't exceed this value. File which will exceed the limit will replace the oldest file.

working-conf : optional; JCR configuration persister configuration. If there isn't a working-conf, the persister will be disabled.

The Configuration is defined in an XML file (see DTD below).

JCR Service can use multiple Repositories and each repository can have multiple Workspaces.

From v.1.9 JCR, repositories configuration parameters support human-readable formats of values. They are all case-insensitive:

Numbers formats: K,KB - kilobytes, M,MB - megabytes, G,GB - gigabytes, T,TB - terabytes. Examples: 100.5 - digit 100.5, 200k - 200 Kbytes, 4m - 4 Mbytes, 1.4G - 1.4 Gbytes, 10T - 10 Tbytes

Time format endings: ms - milliseconds, m - minutes, h - hours, d - days, w - weeks, if no ending - seconds. Examples: 500ms - 500 milliseconds, 20 - 20 seconds, 30m - 30 minutes, 12h - 12 hours, 5d - 5 days, 4w - 4 weeks.

Service configuration may be placed in jar:/conf/standalone/exo-jcr-config.xml for standalone mode. For portal mode, it is located in the portal web application portal/WEB-INF/conf/jcr/repository-configuration.xml.

default-repository: The name of a default repository (one returned by RepositoryService.getRepository()).

repositories: The list of repositories.

name: The name of a repository.

default-workspace: The name of a workspace obtained using Session's login() or login(Credentials) methods (ones without an explicit workspace name).

system-workspace: The name of workspace where /jcr:system node is placed.

security-domain: The name of a security domain for JAAS authentication.

access-control: The name of an access control policy. There can be 3 types: optional - ACL is created on-demand(default), disable - no access control, mandatory - an ACL is created for each added node(not supported yet).

authentication-policy: The name of an authentication policy class.

workspaces: The list of workspaces.

session-max-age: The time after which an idle session will be removed (called logout). If session-max-age is not set up, idle session will never be removed.

lock-remover-max-threads: Number of threads that can serve LockRemover tasks. Default value is 1. Repository may have many workspaces, each workspace have own LockManager. JCR supports Locks with defined lifetime. Such a lock must be removed is it become expired. That is what LockRemovers does. But LockRemovers is not an independent timer-threads, its a task that executed each 30 seconds. Such a task is served by ThreadPoolExecutor which may use different number of threads.

name: The name of a workspace

container: Workspace data container (physical storage) configuration.

initializer: Workspace initializer configuration.

cache: Workspace storage cache configuration.

query-handler: Query handler configuration.

auto-init-permissions: DEPRECATED in JCR 1.9 (use initializer). Default permissions of the root node. It is defined as a set of semicolon-delimited permissions containing a group of space-delimited identities (user, group, etc, see Organization service documentation for details) and the type of permission. For example, any read; :/admin read;:/admin add_node; :/admin set_property;:/admin remove means that users from group admin have all permissions and other users have only a 'read' permission.

Note

The value-storage element is optional. If you don't include it, the values will be stored as BLOBs inside the database.

value-storage: Optional value Storage plugin definition.

class: A value storage plugin class name (attribute).

properties: The list of properties (name-value pairs) for a concrete Value Storage plugin.

filters: The list of filters defining conditions when this plugin is applicable.

class: Initializer implementation class.

properties: The list of properties (name-value pairs). Properties are supported.

root-nodetype: The node type for root node initialization.

root-permissions: Default permissions of the root node. It is defined as a set of semicolon-delimited permissions containing a group of space-delimited identities (user, group etc, see Organization service documentation for details) and the type of permission. For example any read; :/admin read;:/admin add_node; :/admin set_property;:/admin remove means that users from group admin have all permissions and other users have only a 'read' permission.

Configurable initializer adds a capability to override workspace initial startup procedure (used for Clustering).

enabled: If workspace cache is enabled or not.

class: Cache implementation class, optional from 1.9. Default value is. org.exoplatform.services.jcr.impl.dataflow.persistent.LinkedWorkspaceStorageCacheImpl.

Cache can be configured to use concrete implementation of WorkspaceStorageCache interface. JCR core has two implementation to use:

LinkedWorkspaceStorageCacheImpl - default, with configurable read behavior and statistic.

WorkspaceStorageCacheImpl - pre 1.9, still can be used.

properties: The list of properties (name-value pairs) for Workspace cache.

max-size: Cache maximum size (maxSize prior to v.1.9).

live-time: Cached item live time (liveTime prior to v.1.9).

From 1.9 LinkedWorkspaceStorageCacheImpl supports additional optional parameters.

statistic-period: Period (time format) of cache statistic thread execution, 5 minutes by default.

statistic-log: If true cache statistic will be printed to default logger (log.info), false by default or not.

statistic-clean: If true cache statistic will be cleaned after was gathered, false by default or not.

cleaner-period: Period of the eldest items remover execution, 20 minutes by default.

blocking-users-count: Number of concurrent users allowed to read cache storage, 0 - unlimited by default.

class: A Query Handler class name.

properties: The list of properties (name-value pairs) for a Query Handler (indexDir).

Properties and advanced features described in Search Configuration.

time-out: Time after which the unused global lock will be removed.

persister: A class for storing lock information for future use. For example, remove lock after jcr restart.

path: A lock folder. Each workspace has its own one.

Note

Also see lock-remover-max-threads repository configuration parameter.

<!ELEMENT repository-service (repositories)> <!ATTLIST repository-service default-repository NMTOKEN #REQUIRED> <!ELEMENT repositories (repository)> <!ELEMENT repository (security-domain,access-control,session-max-age,authentication-policy,workspaces)> <!ATTLIST repository default-workspace NMTOKEN #REQUIRED name NMTOKEN #REQUIRED system-workspace NMTOKEN #REQUIRED > <!ELEMENT security-domain (#PCDATA)> <!ELEMENT access-control (#PCDATA)> <!ELEMENT session-max-age (#PCDATA)> <!ELEMENT authentication-policy (#PCDATA)> <!ELEMENT workspaces (workspace+)> <!ELEMENT workspace (container,initializer,cache,query-handler)> <!ATTLIST workspace name NMTOKEN #REQUIRED> <!ELEMENT container (properties,value-storages)> <!ATTLIST container class NMTOKEN #REQUIRED> <!ELEMENT value-storages (value-storage+)> <!ELEMENT value-storage (properties,filters)> <!ATTLIST value-storage class NMTOKEN #REQUIRED> <!ELEMENT filters (filter+)> <!ELEMENT filter EMPTY> <!ATTLIST filter property-type NMTOKEN #REQUIRED> <!ELEMENT initializer (properties)> <!ATTLIST initializer class NMTOKEN #REQUIRED> <!ELEMENT cache (properties)> <!ATTLIST cache enabled NMTOKEN #REQUIRED class NMTOKEN #REQUIRED > <!ELEMENT query-handler (properties)> <!ATTLIST query-handler class NMTOKEN #REQUIRED> <!ELEMENT access-manager (properties)> <!ATTLIST access-manager class NMTOKEN #REQUIRED> <!ELEMENT lock-manager (time-out,persister)> <!ELEMENT time-out (#PCDATA)> <!ELEMENT persister (properties)> <!ELEMENT properties (property+)> <!ELEMENT property EMPTY>

Products that use eXo JCR, sometimes missuse it since they continue to use a session that has been closed through a method call on a node, a property or even the session itself. To prevent bad practices we propose three modes which are the folllowing:

If the system property exo.jcr.prohibit.closed.session.usage has been set to true, then a RepositoryException will be thrown any time an application will try to access to a closed session. In the stack trace, you will be able to know the call stack that closes the session.

If the system property exo.jcr.prohibit.closed.session.usage has not been set and the system property exo.product.developing has been set to true, then a warning will be logged in the log file with the full stack trace in order to help identifying the root cause of the issue. In the stack trace, you will be able to know the call stack that closes the session.

If none of the previous system properties have been set, then we will ignore that the issue and let the application use the closed session as it was possible before without doing anything in order to allow applications to migrate step by step.

Since usage of closed session affects usage of closed datasource we propose three ways to resolve such kind of isses:

If the system property exo.jcr.prohibit.closed.datasource.usage is set to true (default value) then a SQLException will be thrown any time an application will try to access to a closed datasource. In the stack trace, you will be able to know the call stack that closes the datasource.

If the system property exo.jcr.prohibit.closed.datasource.usage is set to false and the system property exo.product.developing is set to true, then a warning will be logged in the log file with the full stack trace in order to help identifying the root cause of the issue. In the stack trace, you will be able to know the call stack that closes the datasource.

If the system property exo.jcr.prohibit.closed.datasource.usage is set to false and the system property exo.product.developing is set to false usage of closed datasource will be allowed and nothing will be logged or thrown.

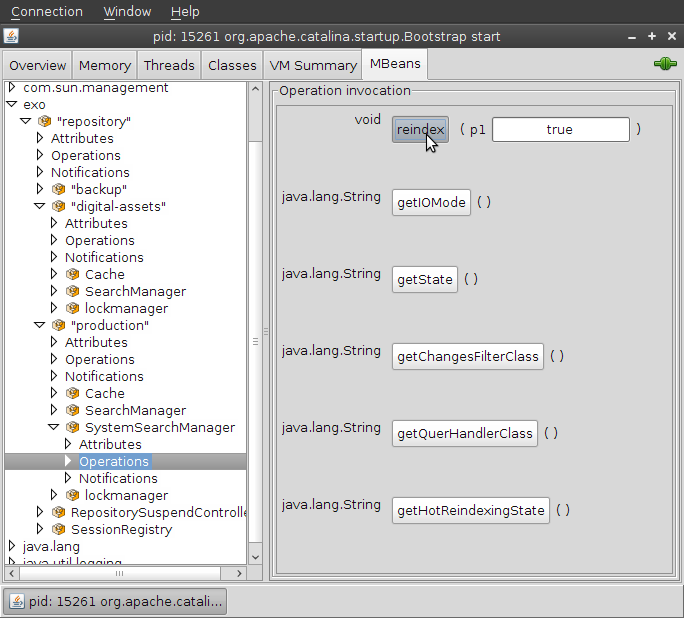

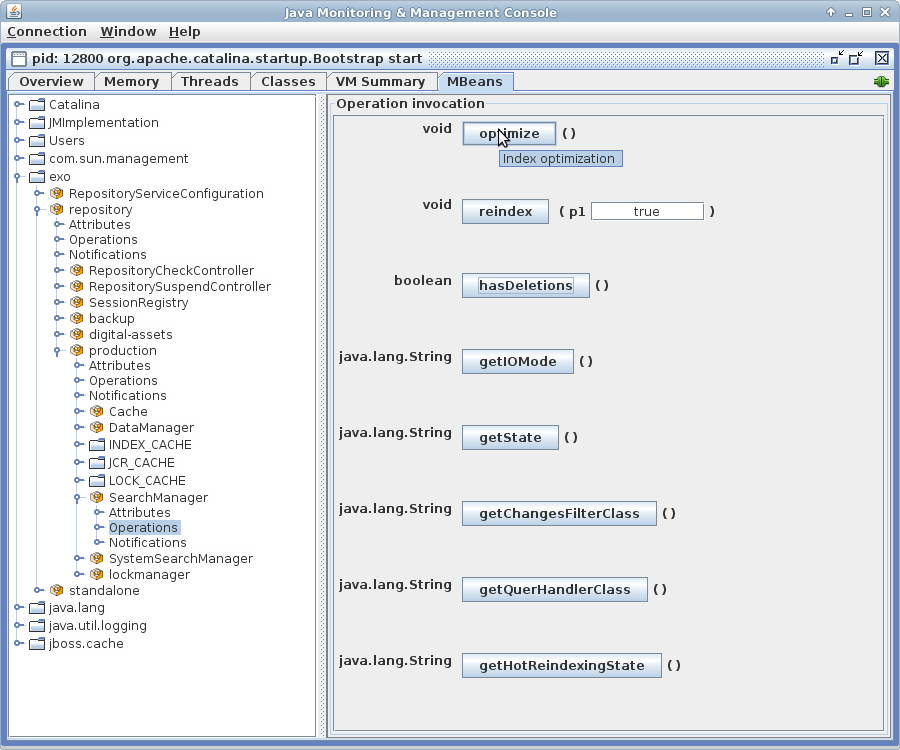

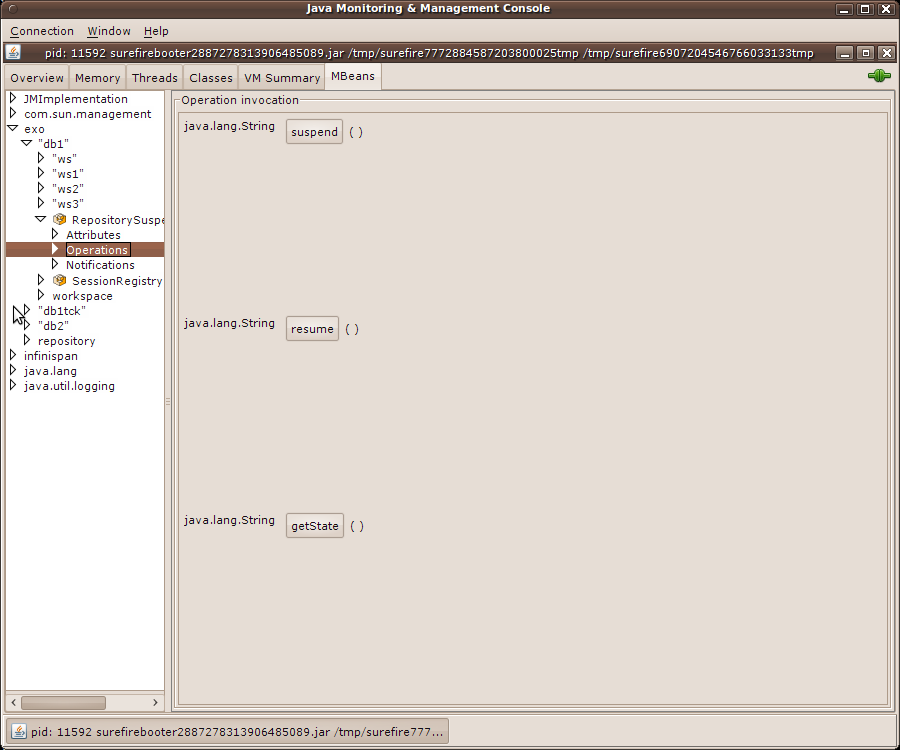

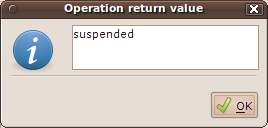

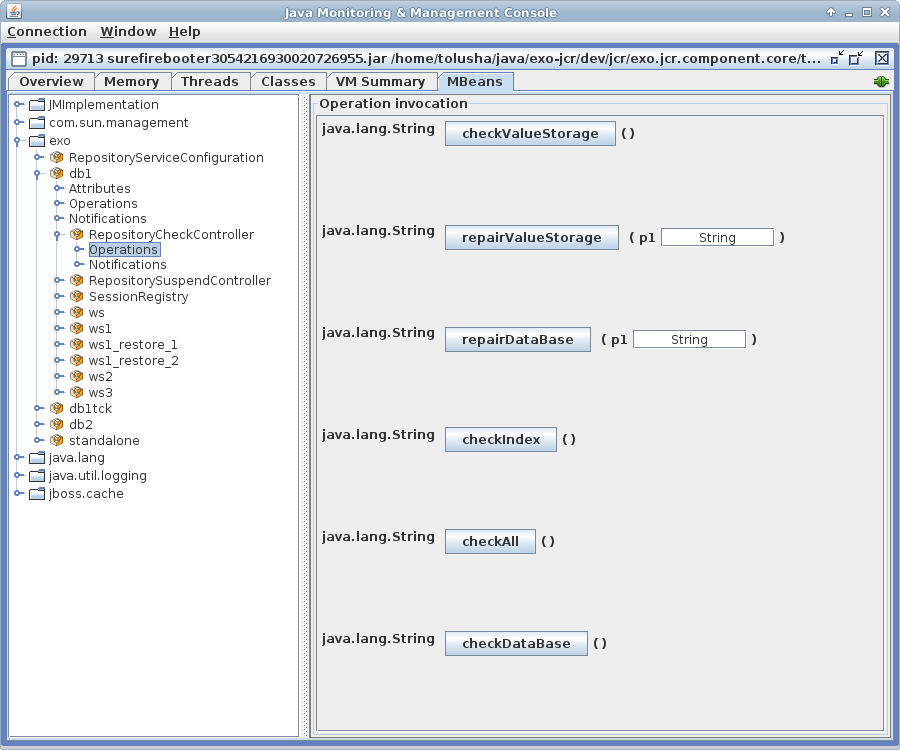

The effective configuration of all the repositories and their workspaces can be known thanks to the method getConfigurationXML() that is exposed through JMX at the RepositoryServiceConfiguration level in case of a PortalContainer the name of the related MBean will be of type exo:portal=${portal-container-name},service=RepositoryServiceConfiguration. This method will give you the effective configuration in XML format that has been really interpreted by the the JCR core. This could be helpful to understand how your repositories/workspaces are configured especially if you would like to overwrite the configuration for some reasons.

You can configure values of properties defined in the file repository-configuration.xml using System Properties. This is quite helpful especially when you want to change the default configuration of all the workspaces for example if we want to disable the rdms indexing for all the workspace without this kind of improvement it is very error prone. For all components that can be configured thanks to properties such as container, value-storage, workspace-initializer, cache, query-handler, lock-manager, access-manager and persister the logic for example for the component 'container' and the property called 'foo' will be the following:

If we have a system property called exo.jcr.config.force.workspace.repository_collaboration.container.foo that has been defined, its value will be used for the configuration of the repository 'repository' and the workspace 'collaboration'

If we have a system property called exo.jcr.config.force.repository.repository.container.foo that has been defined, its value will be used for the configuration of all the workspaces of the repository 'repository' except the workspaces for which we configured the same property using system properties defined in #1

If we have a system property called exo.jcr.config.force.all.container.foo that has been defined, its value will be used for the configuration of all the workspaces except the workspaces for which we configured the same property using system properties defined in #1 or #2

If we have a property 'foo' configured for the repository 'repository' and the workspace 'collaboration' and we have no system properties corresponding to rule #1, #2 and #3, we will use this value (current behavior)

If the previous rules don't allow to give a value to the property 'foo', we will then check the default value in the following order exo.jcr.config.default.workspace.repository_collaboration.container.foo, exo.jcr.config.default.repository.repository.container.foo, exo.jcr.config.default.all.container.foo

To turn on this feature you need to define a component called SystemParametersPersistenceConfigurator. A simple example:

<component>

<key>org.exoplatform.services.jcr.config.SystemParametersPersistenceConfigurator</key>

<type>org.exoplatform.services.jcr.config.SystemParametersPersistenceConfigurator</type>

<init-params>

<value-param>

<name>file-path</name>

<value>target/temp</value>

</value-param>

<values-param>

<name>unmodifiable</name>

<value>cache.test-parameter-I</value>

</values-param>

<values-param>

<name>before-initialize</name>

<value>value-storage.enabled</value>

</values-param>

</init-params>

</component>To make the configuration process easier here you can define thee parameters.

file-path — this is mandatory parameter which defines the location of the file where all parameters configured on pervious launch of AS are stored.

unmodifiable — this defines the list of parameters which cannot be modified using system properties

before-initialize — this defines the list of parameters which can be set only for not initialized workspaces (e.g. during the first start of the AS)

The parameter in the list have the following format: {component-name}.{parameter-name}. This takes affect for every workspace component called {component-name}.

Please take into account that if this component is not defined in the configuration, the workspace configuration overriding using system properties mechanism will be disabled. In other words: if you don't configure SystemParametersPersistenceConfigurator, the system properties are ignored.

Whenever relational database is used to store multilingual text data of eXo Java Content Repository, it is necessary to adapt configuration in order to support UTF-8 encoding. Here is a short HOWTO instruction for several supported RDBMS with examples.

The configuration file you have to modify: .../webapps/portal/WEB-INF/conf/jcr/repository-configuration.xml

Note

Datasource jdbcjcr used in examples can be

configured via InitialContextInitializer

component.

In order to run multilanguage JCR on an Oracle backend Unicode

encoding for characters set should be applied to the database. Other

Oracle globalization parameters don't make any impact. The only property

to modify is NLS_CHARACTERSET.

We have tested NLS_CHARACTERSET =

AL32UTF8 and it works well for many European and

Asian languages.

Example of database configuration (used for JCR testing):

NLS_LANGUAGE AMERICAN NLS_TERRITORY AMERICA NLS_CURRENCY $ NLS_ISO_CURRENCY AMERICA NLS_NUMERIC_CHARACTERS ., NLS_CHARACTERSET AL32UTF8 NLS_CALENDAR GREGORIAN NLS_DATE_FORMAT DD-MON-RR NLS_DATE_LANGUAGE AMERICAN NLS_SORT BINARY NLS_TIME_FORMAT HH.MI.SSXFF AM NLS_TIMESTAMP_FORMAT DD-MON-RR HH.MI.SSXFF AM NLS_TIME_TZ_FORMAT HH.MI.SSXFF AM TZR NLS_TIMESTAMP_TZ_FORMAT DD-MON-RR HH.MI.SSXFF AM TZR NLS_DUAL_CURRENCY $ NLS_COMP BINARY NLS_LENGTH_SEMANTICS BYTE NLS_NCHAR_CONV_EXCP FALSE NLS_NCHAR_CHARACTERSET AL16UTF16

Warning

JCR doesn't use NVARCHAR columns, so that the value of the parameter NLS_NCHAR_CHARACTERSET does not matter for JCR.

Create database with Unicode encoding and use Oracle dialect for the Workspace Container:

<workspace name="collaboration">

<container class="org.exoplatform.services.jcr.impl.storage.jdbc.optimisation.CQJDBCWorkspaceDataContainer">

<properties>

<property name="source-name" value="jdbcjcr" />

<property name="dialect" value="oracle" />

<property name="multi-db" value="false" />

<property name="max-buffer-size" value="200k" />

<property name="swap-directory" value="target/temp/swap/ws" />

</properties>

.....DB2 Universal Database (DB2 UDB) supports UTF-8 and UTF-16/UCS-2. When a Unicode database is created, CHAR, VARCHAR, LONG VARCHAR data are stored in UTF-8 form. It's enough for JCR multi-lingual support.

Example of UTF-8 database creation:

DB2 CREATE DATABASE dbname USING CODESET UTF-8 TERRITORY US

Create database with UTF-8 encoding and use db2 dialect for Workspace Container on DB2 v.9 and higher:

<workspace name="collaboration">

<container class="org.exoplatform.services.jcr.impl.storage.jdbc.optimisation.CQJDBCWorkspaceDataContainer">

<properties>

<property name="source-name" value="jdbcjcr" />

<property name="dialect" value="db2" />

<property name="multi-db" value="false" />

<property name="max-buffer-size" value="200k" />

<property name="swap-directory" value="target/temp/swap/ws" />

</properties>

.....Note

For DB2 v.8.x support change the property "dialect" to db2v8.

JCR MySQL-backend requires special dialect MySQL-UTF8 to be used for internationalization support. But the database default charset should be latin1 to use limited index space effectively (1000 bytes for MyISAM engine, 767 for InnoDB). If database default charset is multibyte, a JCR database initialization error is thrown concerning index creation failure. In other words, JCR can work on any singlebyte default charset of database, with UTF8 supported by MySQL server. But we have tested it only on latin1 database default charset.

Repository configuration, workspace container entry example:

<workspace name="collaboration">

<container class="org.exoplatform.services.jcr.impl.storage.jdbc.optimisation.CQJDBCWorkspaceDataContainer">

<properties>

<property name="source-name" value="jdbcjcr" />

<property name="dialect" value="mysql-utf8" />

<property name="multi-db" value="false" />

<property name="max-buffer-size" value="200k" />

<property name="swap-directory" value="target/temp/swap/ws" />

</properties>

.....You will need also to indicate the charset name either at server level using the server parameter --character-set-server (find more details there ) or at datasource configuration level by adding a new property as below:

<property name="connectionProperties" value="useUnicode=yes;characterEncoding=utf8;characterSetResults=UTF-8;" />

On PostgreSQL/PostgrePlus-backend, multilingual support can be enabled in different ways:

Using the locale features of the operating system to provide locale-specific collation order, number formatting, translated messages, and other aspects. UTF-8 is widely used on Linux distributions by default, so it can be useful in such case.

Providing a number of different character sets defined in the PostgreSQL/PostgrePlus server, including multiple-byte character sets, to support storing text of any languages, and providing character set translation between client and server. We recommend to use UTF-8 database charset, it will allow any-to-any conversations and make this issue transparent for the JCR.

Create database with UTF-8 encoding and use a PgSQL dialect for Workspace Container:

<workspace name="collaboration">

<container class="org.exoplatform.services.jcr.impl.storage.jdbc.optimisation.CQJDBCWorkspaceDataContainer">

<properties>

<property name="source-name" value="jdbcjcr" />

<property name="dialect" value="pgsql" />

<property name="multi-db" value="false" />

<property name="max-buffer-size" value="200k" />

<property name="swap-directory" value="target/temp/swap/ws" />

</properties>

.....Frequently, a single database instance must be shared by several other applications. But some of our customers have also asked for a way to host several JCR instances in the same database instance. To fulfill this need, we had to review our queries and scope them to the current schema; it is now possible to have one JCR instance per DB schema instead of per DB instance. To benefit of the work done for this feature you will need to apply the configuration changes described below.