JBoss Cache is a thread safe caching API, and uses its own efficient mechanisms of controlling concurrent access. It uses a pessimistic locking scheme by default for this purpose. Optimistic locking may alternatively be used, and is discussed later.

Locking is done internally, on a node-level. For example when we want to access "/a/b/c", a lock will be acquired for nodes "a", "b" and "c". When the same transaction wants to access "/a/b/c/d", since we already hold locks for "a", "b" and "c", we only need to acquire a lock for "d".

Lock owners are either transactions (call is made within the scope of an existing transaction)

or threads (no transaction associated with the call).

Regardless, a transaction or a thread is internally transformed into

an instance of

GlobalTransaction

, which is used as a globally unique identifier

for modifications across a cluster. E.g. when we run a two-phase commit

protocol across the cluster, the

GlobalTransaction

uniquely identifies a unit of work across a cluster.

Locks can be read or write locks. Write locks serialize read and write access, whereas read-only locks only serialize read access. When a write lock is held, no other write or read locks can be acquired. When a read lock is held, others can acquire read locks. However, to acquire write locks, one has to wait until all read locks have been released. When scheduled concurrently, write locks always have precedence over read locks. Note that (if enabled) read locks can be upgraded to write locks.

Using read-write locks helps in the following scenario: consider a tree with entries "/a/b/n1" and "/a/b/n2". With write-locks, when Tx1 accesses "/a/b/n1", Tx2 cannot access "/a/b/n2" until Tx1 has completed and released its locks. However, with read-write locks this is possible, because Tx1 acquires read-locks for "/a/b" and a read-write lock for "/a/b/n1". Tx2 is then able to acquire read-locks for "/a/b" as well, plus a read-write lock for "/a/b/n2". This allows for more concurrency in accessing the cache.

By default, JBoss Cache uses pessimistic locking. Locking is not exposed directly to user. Instead, a transaction isolation level which provides different locking behaviour is configurable.

JBoss Cache supports the following transaction isolation levels, analogous to database ACID isolation levels. A user can configure an instance-wide isolation level of NONE, READ_UNCOMMITTED, READ_COMMITTED, REPEATABLE_READ, or SERIALIZABLE. REPEATABLE_READ is the default isolation level used.

NONE. No transaction support is needed. There is no locking at this level, e.g., users will have to manage the data integrity. Implementations use no locks.

READ_UNCOMMITTED. Data can be read anytime while write operations are exclusive. Note that this level doesn't prevent the so-called "dirty read" where data modified in Tx1 can be read in Tx2 before Tx1 commits. In other words, if you have the following sequence,

Tx1 Tx2 W Rusing this isolation level will not prevent Tx2 read operation. Implementations typically use an exclusive lock for writes while reads don't need to acquire a lock.

READ_COMMITTED. Data can be read any time as long as there is no write. This level prevents the dirty read. But it doesn’t prevent the so-called ‘non-repeatable read’ where one thread reads the data twice can produce different results. For example, if you have the following sequence,

Tx1 Tx2 R W Rwhere the second read in Tx1 thread will produce different result.

Implementations usually use a read-write lock; reads succeed acquiring the lock when there are only reads, writes have to wait until there are no more readers holding the lock, and readers are blocked acquiring the lock until there are no more writers holding the lock. Reads typically release the read-lock when done, so that a subsequent read to the same data has to re-acquire a read-lock; this leads to nonrepeatable reads, where 2 reads of the same data might return different values. Note that, the write only applies regardless of transaction state (whether it has been committed or not).

REPEATABLE_READ. Data can be read while there is no write and vice versa. This level prevents "non-repeatable read" but it does not completely prevent the so-called "phantom read" where new data can be inserted into the tree from another transaction. Implementations typically use a read-write lock. This is the default isolation level used.

SERIALIZABLE. Data access is synchronized with exclusive locks. Only 1 writer or reader can have the lock at any given time. Locks are released at the end of the transaction. Regarded as very poor for performance and thread/transaction concurrency.

By default, before inserting a new node into the tree or removing an existing node from the

tree, JBoss Cache will only attempt to acquire a read lock on the new node's parent node.

This approach does not treat child nodes as an integral part of a parent node's state.

This approach allows greater concurrency if nodes are frequently added or removed, but

at a cost of lesser correctness. For use cases where greater correctness is necessary, JBoss

Cache provides a configuration option

LockParentForChildInsertRemove

.

If this is set to

true

, insertions and removals of child nodes

require the acquisition of a

write lock

on the parent node.

In addition to the above, in version 2.1.0 and above, JBoss Cache offers the ability to override this

configuration on a per-node basis. See

Node.setLockForChildInsertRemove()

and it's

corresponding javadocs for details.

The motivation for optimistic locking is to improve concurrency. When a lot of threads have a lot of contention for access to the data tree, it can be inefficient to lock portions of the tree - for reading or writing - for the entire duration of a transaction as we do in pessimistic locking. Optimistic locking allows for greater concurrency of threads and transactions by using a technique called data versioning, explained here. Note that isolation levels (if configured) are ignored if optimistic locking is enabled.

Optimistic locking treats all method calls as transactional [7] . Even if you do not invoke a call within the scope of an ongoing transaction, JBoss Cache creates an implicit transaction and commits this transaction when the invocation completes. Each transaction maintains a transaction workspace, which contains a copy of the data used within the transaction.

For example, if a transaction calls

cache.getRoot().getChild( Fqn.fromString("/a/b/c") )

,

nodes a, b and c are copied from the main data tree

and into the workspace. The data is versioned and all calls in the transaction work on the copy of the

data rather than the actual data. When the transaction commits, its workspace is merged back into the

underlying tree by matching versions. If there is a version mismatch - such as when the actual data tree

has a higher version than the workspace, perhaps if another transaction were to access the same data,

change it and commit before the first transaction can finish - the transaction throws a

RollbackException

when committing and the commit fails.

Optimistic locking uses the same locks we speak of above, but the locks are only held for a very short duration - at the start of a transaction to build a workspace, and when the transaction commits and has to merge data back into the tree.

So while optimistic locking may occasionally fail if version validations fail or may run slightly slower than pessimistic locking due to the inevitable overhead and extra processing of maintaining workspaces, versioned data and validating on commit, it does buy you a near-SERIALIZABLE degree of data integrity while maintaining a very high level of concurrency.

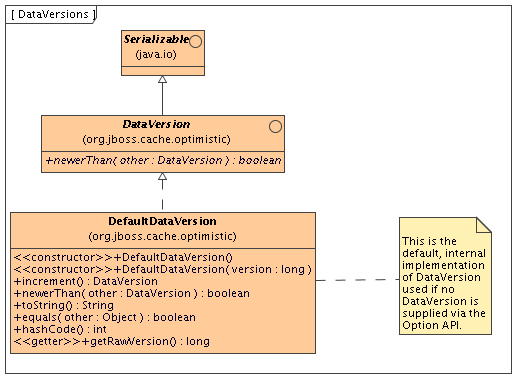

Optimistic locking makes use of the

DataVersion

interface (and an internal and default

DefaultDataVersion

implementation to keep a track of node versioning. In certain cases,

where cached data is an in-memory representation of data from an external source such as a database,

it makes sense to align the versions used in JBoss Cache with the versions used externally. As such,

using the

options API

, it is possible to set the

DataVersion

you wish to use on a per-invocation basis, allowing you to implement the

DataVersion

interface to hold the versioning information obtained externally before putting your data into the

cache.

Optimistic locking is enabled by using the NodeLockingScheme XML attribute, and setting it to "OPTIMISTIC":

...

<!--

Node locking scheme:

OPTIMISTIC

PESSIMISTIC (default)

-->

<attribute name="NodeLockingScheme">OPTIMISTIC</attribute>

...

It is generally advisable that if you have an eviction policy defined along with optimistic locking, you

define

the eviction policy's

minTimeToLiveSeconds

parameter to be slightly greater than the transaction

timeout value set in your transaction manager. This ensures that data versions in the cache are not

evicted

while transactions are in progress

[8]

.

JBoss Cache can be configured to use and participate in JTA compliant transactions. Alternatively, if transaction support is disabled, it is equivalent to setting AutoCommit to on where modifications are potentially [9] replicated after every change (if replication is enabled).

What JBoss Cache does on every incoming call is:

Retrieve the current

javax.transaction.Transactionassociated with the threadIf not already done, register a

javax.transaction.Synchronizationwith the transaction manager to be notified when a transaction commits or is rolled back.

In order to do this, the cache has to be provided with a

reference to environment's

javax.transaction.TransactionManager

. This is usually done by configuring the cache

with the class name of an implementation of the

TransactionManagerLookup

interface. When the cache starts, it will create an instance of this

class and invoke its

getTransactionManager()

method, which returns a reference to the

TransactionManager

.

JBoss Cache ships with

JBossTransactionManagerLookup

and

GenericTransactionManagerLookup

. The

JBossTransactionManagerLookup

is able to bind to a running JBoss AS instance and retrieve a

TransactionManager

while the

GenericTransactionManagerLookup

is able to bind to most popular Java EE application servers and provide the same functionality. A dummy

implementation -

DummyTransactionManagerLookup

- is also provided, primarily for unit tests. Being a dummy, this is just for demo and testing purposes and is

not recommended for production use.

An alternative to configuring a

TransactionManagerLookup

is to programatically inject a reference to the

TransactionManager

into the

Configuration

object's

RuntimeConfig

element:

TransactionManager tm = getTransactionManager(); // magic method

cache.getConfiguration().getRuntimeConfig().setTransactionManager(tm);

Injecting the

TransactionManager

is the recommended

approach when the

Configuration

is built by some sort of

IOC container that already has a reference to the TM.

When the transaction commits, we initiate either a one- two-phase commit protocol. See replicated caches and transactions for details.

[7] Because of this requirement, you must always have a transaction manager configured when using optimistic locking.

[8] See JBCACHE-1155

[9] Depending on whether interval-based asynchronous replication is used