Preface

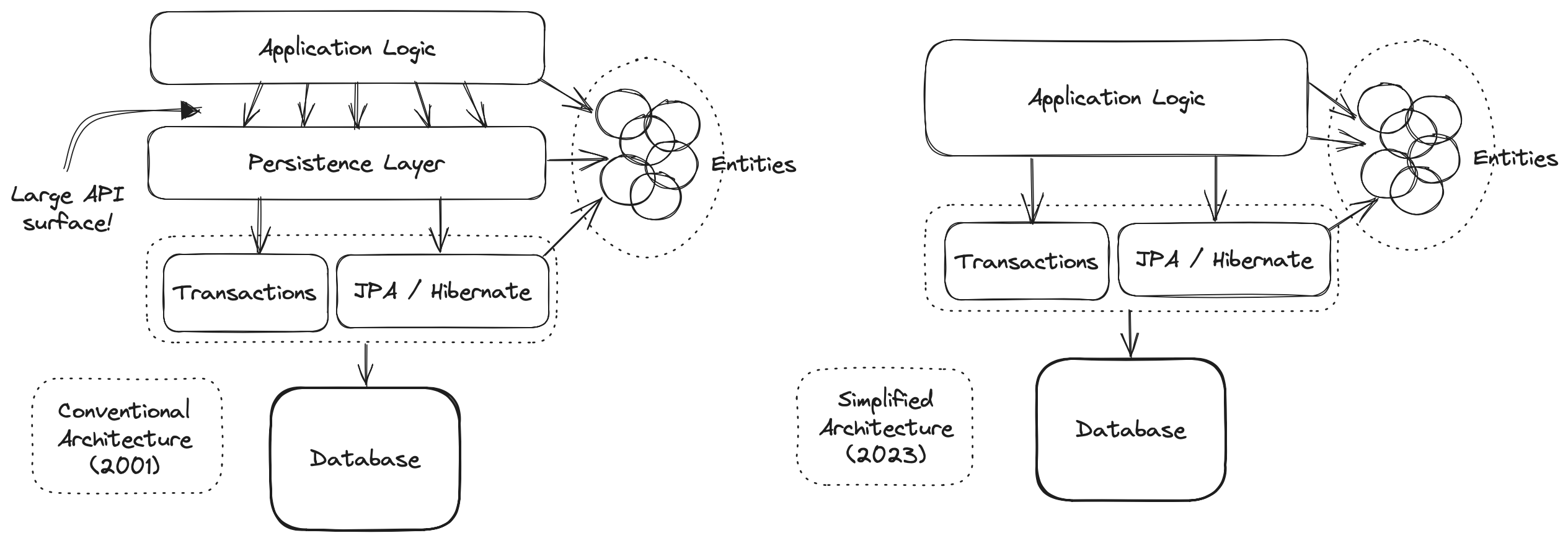

Hibernate 6 was a major redesign of the world’s most popular and feature-rich ORM solution. The redesign touched almost every subsystem of Hibernate, including the APIs, mapping annotations, and the query language. This new Hibernate was suddenly more powerful, more robust, more portable, and more type safe.

Hibernate 7 builds on this foundation, adds support for JPA 3.2, and introduces Hibernate Data Repositories, an implementation of the Jakarta Data specification. Taken together, these enhancements yield a level of compile-time type safety—and resulting developer productivity—which was previously impossible. Hibernate Data Repositories offers truly seamless integration of the ORM solution with the persistence layer, obsoleting older add-on repository frameworks.

Hibernate ORM and Hibernate Reactive are core components of Quarkus 3, the most exciting new environment for cloud-native development in Java, and Hibernate remains the persistence solution of choice for almost every major Java framework or server.

Unfortunately, the changes in Hibernate 6 also obsoleted much of the information about Hibernate that’s available in books, in blog posts, and on stackoverflow.

This guide is an up-to-date, high-level discussion of the current feature set and recommended usage. It does not attempt to cover every feature and should be used in conjunction with other documentation:

-

Hibernate’s extensive Javadoc,

-

the Hibernate User Guide.

|

The Hibernate User Guide includes detailed discussions of most aspects of Hibernate. But with so much information to cover, readability is difficult to achieve, and so it’s most useful as a reference. Where necessary, we’ll provide links to relevant sections of the User Guide. |

1. Introduction

Hibernate is usually described as a library that makes it easy to map Java classes to relational database tables. But this formulation does no justice to the central role played by the relational data itself. So a better description might be:

Here the relational data is the focus, along with the importance of type safety. The goal of object/relational mapping (ORM) is to eliminate fragile and untypesafe code, and make large programs easier to maintain in the long run.

ORM takes the pain out of persistence by relieving the developer of the need to hand-write tedious, repetitive, and fragile code for flattening graphs of objects to database tables and rebuilding graphs of objects from flat SQL query result sets. Even better, ORM makes it much easier to tune performance later, after the basic persistence logic has already been written.

|

A perennial question is: should I use ORM, or plain SQL? The answer is usually: use both. JPA and Hibernate were designed to work in conjunction with handwritten SQL. You see, most programs with nontrivial data access logic will benefit from the use of ORM at least somewhere. But if Hibernate is making things more difficult, for some particularly tricky piece of data access logic, the only sensible thing to do is to use something better suited to the problem! Just because you’re using Hibernate for persistence doesn’t mean you have to use it for everything. |

Let’s underline the important point here: the goal of ORM is not to hide SQL or the relational model. After all, Hibernate’s query language is nothing more than an object-oriented dialect of ANSI SQL.

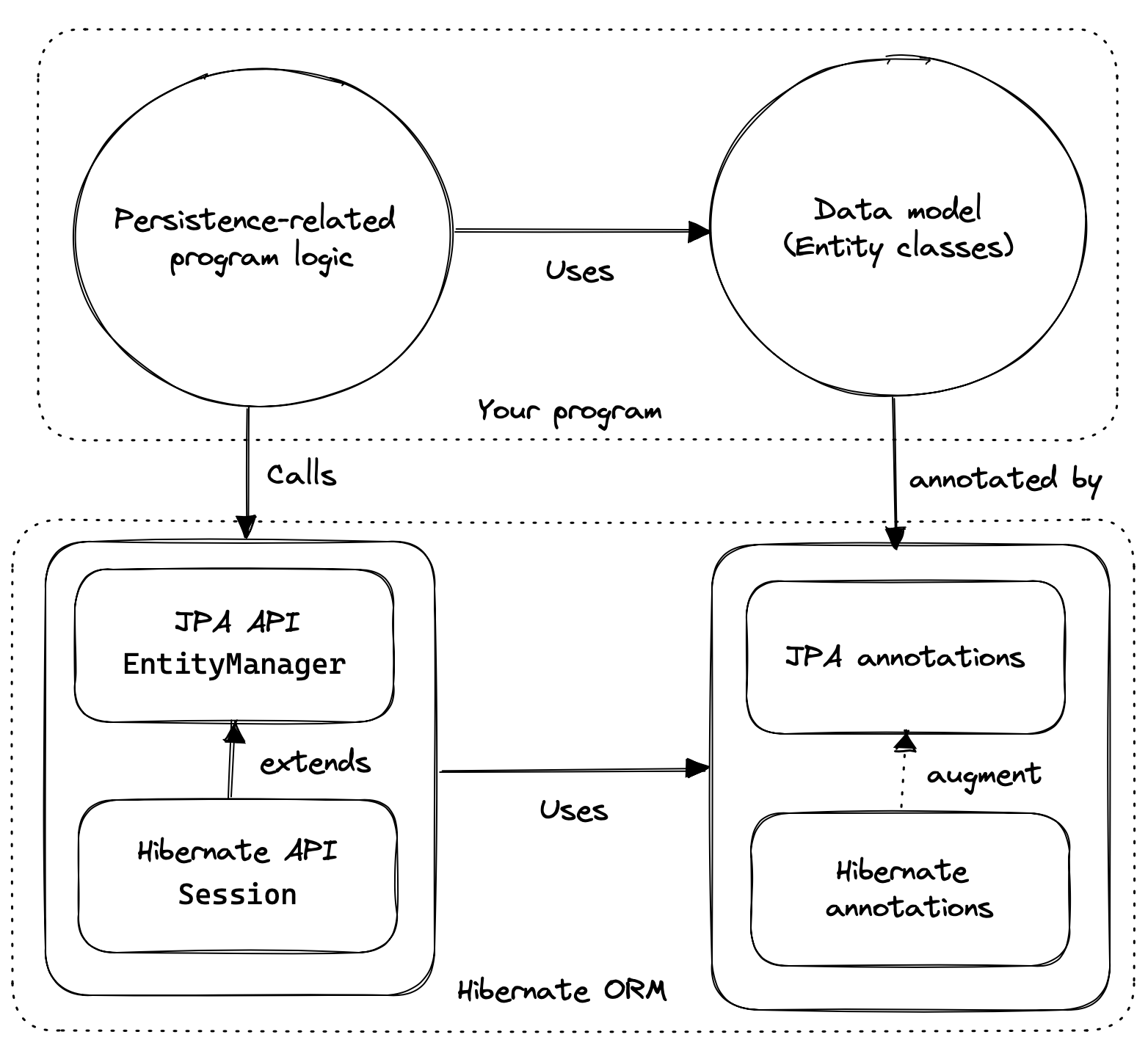

Developers often ask about the relationship between Hibernate and JPA, so let’s take a short detour into some history.

1.1. Hibernate and JPA

Hibernate was the inspiration behind the Java (now Jakarta) Persistence API, or JPA, and includes a complete implementation of the latest revision of this specification.

We can think of the API of Hibernate in terms of three basic elements:

-

an implementation of the JPA-defined APIs, most importantly, of the interfaces

EntityManagerFactoryandEntityManager, and of the JPA-defined O/R mapping annotations, -

a native API exposing the full set of available functionality, centered around the interfaces

SessionFactory, which extendsEntityManagerFactory, andSession, which extendsEntityManager, and -

a set of mapping annotations which augment the O/R mapping annotations defined by JPA, and which may be used with the JPA-defined interfaces, or with the native API.

Hibernate also offers a range of SPIs for frameworks and libraries which extend or integrate with Hibernate, but we’re not interested in any of that stuff here.

As an application developer, you must decide whether to:

-

write your program in terms of

SessionandSessionFactory, or -

maximize portability to other implementations of JPA by, wherever reasonable, writing code in terms of

EntityManagerandEntityManagerFactory, falling back to the native APIs only where necessary.

Whichever path you take, you will use the JPA-defined mapping annotations most of the time, and the Hibernate-defined annotations for more advanced mapping problems.

|

You might wonder if it’s possible to develop an application using only JPA-defined APIs, and, indeed, that’s possible in principle. JPA is a great baseline that really nails the basics of the object/relational mapping problem. But without the native APIs, and extended mapping annotations, you miss out on much of the power of Hibernate. |

Since Hibernate existed before JPA, and since JPA was modelled on Hibernate, we unfortunately have some competition and duplication in naming between the standard and native APIs. For example:

| Hibernate | JPA |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Typically, the Hibernate-native APIs offer something a little extra that’s missing in JPA, so this isn’t exactly a flaw. But it’s something to watch out for.

1.2. Writing Java code with Hibernate

If you’re completely new to Hibernate and JPA, you might already be wondering how the persistence-related code is structured.

Well, typically, our persistence-related code comes in two layers:

-

a representation of our data model in Java, which takes the form of a set of annotated entity classes, and

-

a larger number of functions which interact with Hibernate’s APIs to perform the persistence operations associated with our various transactions.

The first part, the data or "domain" model, is usually easier to write, but doing a great and very clean job of it will strongly affect your success in the second part.

Most people implement the domain model as a set of what we used to call "Plain Old Java Objects", that is, as simple Java classes with no direct dependencies on technical infrastructure, nor on application logic which deals with request processing, transaction management, communications, or interaction with the database.

|

Take your time with this code, and try to produce a Java model that’s as close as reasonable to the relational data model. Avoid using exotic or advanced mapping features when they’re not really needed.

When in the slightest doubt, map a foreign key relationship using |

The second part of the code is much trickier to get right. This code must:

-

manage transactions and sessions,

-

interact with the database via the Hibernate session,

-

publish CDI events and send JMS messages,

-

fetch and prepare data needed by the UI, and

-

handle failures.

|

Responsibility for transaction and session management, and for recovery from certain kinds of failure, is best handled in some sort of framework code. |

We’re going to come back soon to the thorny question of how this persistence logic should be organized, and how it should fit into the rest of the system.

1.3. Hello, Hibernate

Before we get deeper into the weeds, we’ll quickly present a basic example program that will help you get started if you don’t already have Hibernate integrated into your project.

We begin with a simple Gradle build file:

build.gradleplugins {

id 'java'

}

group = 'org.example'

version = '1.0-SNAPSHOT'

repositories {

mavenCentral()

}

dependencies {

// the GOAT ORM

implementation 'org.hibernate.orm:hibernate-core:7.0.10.Final'

// Hibernate Processor

annotationProcessor 'org.hibernate.orm:hibernate-processor:7.0.10.Final'

// Hibernate Validator

implementation 'org.hibernate.validator:hibernate-validator:8.0.1.Final'

implementation 'org.glassfish:jakarta.el:4.0.2'

// Agroal connection pool

runtimeOnly 'org.hibernate.orm:hibernate-agroal:7.0.10.Final'

runtimeOnly 'io.agroal:agroal-pool:2.5'

// logging via Log4j

runtimeOnly 'org.apache.logging.log4j:log4j-core:2.24.1'

// H2 database

runtimeOnly 'com.h2database:h2:2.3.232'

}Only the first of these dependencies is absolutely required to run Hibernate.

Next, we’ll add a logging configuration file for log4j:

log4j2.propertiesrootLogger.level = info

rootLogger.appenderRefs = console

rootLogger.appenderRef.console.ref = console

# SQL statements (set level=debug to enable)

logger.hibernate.name = org.hibernate.SQL

logger.hibernate.level = info

# JDBC parameter binding (set level=trace to enable)

logger.jdbc-bind.name=org.hibernate.orm.jdbc.bind

logger.jdbc-bind.level=info

# JDBC result set extraction (set level=trace to enable)

logger.jdbc-extract.name=org.hibernate.orm.jdbc.extract

logger.jdbc-extract.level=info

# JDBC batching (set level=trace to enable)

logger.batch.name=org.hibernate.orm.jdbc.batch

logger.batch.level=info

# direct log output to the console

appender.console.name = console

appender.console.type = Console

appender.console.layout.type = PatternLayout

appender.console.layout.pattern = %highlight{[%p]} %m%nNow we need some Java code. We begin with our entity class:

Book.javapackage org.hibernate.example;

import jakarta.persistence.Entity;

import jakarta.persistence.Id;

import jakarta.validation.constraints.NotNull;

@Entity

class Book {

@Id

String isbn;

@NotNull

String title;

Book() {}

Book(String isbn, String title) {

this.isbn = isbn;

this.title = title;

}

}Finally, let’s see code which configures and instantiates Hibernate and asks it to persist and query the entity. Don’t worry if this makes no sense at all right now. It’s the job of the rest of this Short Guide to make all this crystal clear.

Main.javapackage org.hibernate.example;

import org.hibernate.jpa.HibernatePersistenceConfiguration;

import static java.lang.System.out;

public class Main {

public static void main(String[] args) {

var sessionFactory =

new HibernatePersistenceConfiguration("Bookshelf")

.managedClass(Book.class)

// use H2 in-memory database

.jdbcUrl("jdbc:h2:mem:db1")

.jdbcCredentials("sa", "")

// set the Agroal connection pool size

.jdbcPoolSize(16)

// display SQL in console

.showSql(true, true, true)

.createEntityManagerFactory();

// export the inferred database schema

sessionFactory.getSchemaManager().create(true);

// persist an entity

sessionFactory.inTransaction(session -> {

session.persist(new Book("9781932394153", "Hibernate in Action"));

});

// query data using HQL

sessionFactory.inSession(session -> {

out.println(session.createSelectionQuery("select isbn||': '||title from Book").getSingleResult());

});

// query data using criteria API

sessionFactory.inSession(session -> {

var builder = sessionFactory.getCriteriaBuilder();

var query = builder.createQuery(String.class);

var book = query.from(Book.class);

query.select(builder.concat(builder.concat(book.get(Book_.isbn), builder.literal(": ")),

book.get(Book_.title)));

out.println(session.createSelectionQuery(query).getSingleResult());

});

}

}In practice, we never access the database directly from a main() method.

So now let’s talk about how to organize persistence logic in a real system.

The rest of this chapter is not compulsory.

If you’re itching for more details about Hibernate itself, you’re quite welcome to skip straight to the next chapter, and come back later.

1.4. Entities

A class in the domain model which directly represents a relational database table is called an entity. Entity classes are central to object persistence and to object/relational mapping. They’re also, typically, central players in the business logic of our application program. Entities represent the things in our business domain. This makes them very important objects indeed!

Given how much weight an entity already bears due to its very nature, we need to think carefully before weighing it down with too many additional responsibilities.

In keeping with our commitment to anti-dogmatism, we would like to add the following important caveat to the discussion in the previous callout.

For now, we’re going to assume that entities are implemented as plain Java classes.

1.5. Stateful and stateless sessions

It should be very clear from the example code above, that the session is also a very important object.

It exposes basic operations like persist() and createQuery(), and so it’s our first port of call when we want to interact with the database via Hibernate.

In the code we just saw, we’ve used a stateful session.

Later, we’ll learn about the idea of a persistence context.

Oversimplifying for now, you can think of it as a cache of data which has been read in the current transaction.

Thus, in the architecture of Hibernate, it’s sometimes called the first-level cache.

Each stateful session — that is, every Hibernate Session, and every JPA EntityManager — has its own persistence context.

But stateful sessions have never been the only possibility.

The StatelessSession interface offers a way to interact with Hibernate without going through a persistence context.

However, the programming model is somewhat different.

As of Hibernate 7, a key decision for any new project is which of these programming models to take as a baseline.

Fortunately, the two models aren’t mutually exclusive.

This is a friendly competition, where the two APIs are designed to complement each other.

Even if we decide to use StatefulSession most of the time, we can still use a StatelessSession wherever it’s more convenient.

On the other hand, if you decide to adopt Jakarta Data, the decision is made for you: repositories in Jakarta Data 1.0 are always stateless, and in Hibernate Data Repositories a repository is backed by a StatelessSession.

|

But now we’ve got just a little bit ahead of ourselves. In the next section taking we’re taking a journey which might — but definitely doesn’t necessarily — end at the idea of a "repository".

1.6. Organizing persistence logic

In a real program, persistence logic like the code shown above is usually interleaved with other sorts of code, including logic:

-

implementing the rules of the business domain, or

-

for interacting with the user.

Therefore, many developers quickly—even too quickly, in our opinion—reach for ways to isolate the persistence logic into some sort of separate architectural layer. We’re going to ask you to suppress this urge for now.

We prefer a bottom-up approach to organizing our code. We like to start thinking about methods and functions, not about architectural layers and container-managed objects.

To illustrate the sort of approach to code organization that we advocate, let’s consider a service which queries the database using HQL or SQL. We might start with something like this, a mix of UI and persistence logic:

@Path("/")

@Produces("application/json")

public class BookResource {

private final SessionFactory sessionfactory = .... ;

@GET

@Path("book/{isbn}")

public Book getBook(String isbn) {

var book = sessionFactory.fromTransaction(session -> session.find(Book.class, isbn));

return book == null ? Response.status(404).build() : book;

}

}Indeed, we might also finish with something like that—it’s quite hard to identify anything concretely wrong with the code above, and for such a simple case it seems really difficult to justify making this code more complicated by introducing additional objects.

One very nice aspect of this code, which we wish to draw your attention to, is that session and transaction management is handled by generic "framework" code, just as we already recommended above.

In this case, we’re using the fromTransaction() method, which happens to come built in to Hibernate.

But you might prefer to use something else, for example:

-

in a container environment like Jakarta EE or Quarkus, container-managed transactions and container-managed persistence contexts, or

-

something you write yourself.

The important thing is that calls like createEntityManager() and getTransaction().begin() don’t belong in regular program logic, because it’s tricky and tedious to get the error handling correct.

Let’s now consider a slightly more complicated case.

@Path("/")

@Produces("application/json")

public class BookResource {

private static final int RESULTS_PER_PAGE = 20;

private final SessionFactory sessionfactory = .... ;

@GET

@Path("books/{titlePattern}/{pageNumber:\\d+}")

public List<Book> findBooks(String titlePattern, int pageNumber) {

var page = Page.page(RESULTS_PER_PAGE, pageNumber);

var books =

sessionFactory.fromTransaction(session -> {

var findBooksByTitle = "from Book where title like ?1 order by title";

return session.createSelectionQuery(findBooksByTitle, Book.class)

.setParameter(1, titlePattern)

.setPage(page)

.getResultList();

});

return books.isEmpty() ? Response.status(404).build() : books;

}

}This is fine, and we won’t complain if you prefer to leave the code exactly as it appears above. But there’s one thing we could perhaps improve. We love super-short methods with single responsibilities, and there looks to be an opportunity to introduce one here. Let’s hit the code with our favorite thing, the Extract Method refactoring. We obtain:

static List<Book> findBooksTitled(Session session, String titlePattern, Page page) {

var findBooksByTitle = "from Book where title like ?1 order by title";

return session.createSelectionQuery(findBooksByTitle, Book.class)

.setParameter(1, titlePattern)

.setPage(page)

.getResultList();

}This is an example of a query method, a function which accepts arguments to the parameters of a HQL or SQL query, and executes the query, returning its results to the caller. And that’s all it does; it doesn’t orchestrate additional program logic, and it doesn’t perform transaction or session management.

It’s even better to specify the query string using the @NamedQuery annotation, so that Hibernate can validate the query at startup time, that is, when the SessionFactory is created, instead of when the query is first executed.

Indeed, since we included Hibernate Processor in our Gradle build, the query can even be validated at compile time.

We need a place to put the annotation, so let’s move our query method to a new class:

@CheckHQL // validate named queries at compile time

@NamedQuery(name = "findBooksByTitle",

query = "from Book where title like :title order by title")

class Queries {

static List<Book> findBooksTitled(Session session, String titlePattern, Page page) {

return session.createQuery(Queries_._findBooksByTitle_) //type safe reference to the named query

.setParameter("title", titlePattern)

.setPage(page)

.getResultList();

}

}Notice that our query method doesn’t attempt to hide the EntityManager from its clients.

Indeed, the client code is responsible for providing the EntityManager or Session to the query method.

The client code may:

-

obtain an

EntityManagerorSessionby callinginTransaction()orfromTransaction(), as we saw above, or, -

in an environment with container-managed transactions, it might obtain it via dependency injection.

Whatever the case, the code which orchestrates a unit of work usually just calls the Session or EntityManager directly, passing it along to helper methods like our query method if necessary.

@GET

@Path("books/{titlePattern}/{pageNumber:\\d+}")

public List<Book> findBooks(String titlePattern, int pageNumber) {

var page = Page.page(RESULTS_PER_PAGE, pageNumber);

var books =

sessionFactory.fromTransaction(session ->

// call handwritten query method

Queries.findBooksTitled(session, titlePattern, page));

return books.isEmpty() ? Response.status(404).build() : books;

}You might be thinking that our query method looks a bit boilerplatey.

That’s true, perhaps, but we’re much more concerned that it’s still not perfectly typesafe.

Indeed, for many years, the lack of compile-time checking for HQL queries and code which binds arguments to query parameters was our number one source of discomfort with Hibernate.

Here, the @CheckHQL annotation takes care of checking the query itself, but the call to setParameter() is still not type safe.

Fortunately, there’s now a great solution to both problems. Hibernate Processor is able to fill in the implementation of such query methods for us. This facility is the topic of a whole chapter of this introduction, so for now we’ll just leave you with one simple example.

Suppose we simplify Queries to just the following:

// a sort of proto-repository, this interface is never implemented

interface Queries {

// a HQL query method with a generated static "implementation"

@HQL("where title like :title order by title")

List<Book> findBooksTitled(String title, Page page);

}Then Hibernate Processor automatically produces an implementation of the method annotated @HQL in a class named Queries_.

We can call it just like we were previously calling our handwritten version:

@GET

@Path("books/{titlePattern}/{pageNumber:\\d+}")

public List<Book> findBooks(String titlePattern, int pageNumber) {

var page = Page.page(RESULTS_PER_PAGE, pageNumber);

var books =

sessionFactory.fromTransaction(session ->

// call the generated query method "implementation"

Queries_.findBooksTitled(session, titlePattern, page));

return books.isEmpty() ? Response.status(404).build() : books;

}In this case, the quantity of code eliminated is pretty trivial. The real value is in improved type safety. We now find out about errors in assignments of arguments to query parameters at compile time.

This is all quite nice so far, but at this point you’re probably wondering whether we could use dependency injection to obtain an instance of the Queries interface, and have this object take care of obtaining its own Session.

Well, indeed we can.

What we need to do is indicate the kind of session the Queries interface depends on, by adding a method to retrieve the session.

Observe, again, that we’re still not attempting to hide the Session from the client code.

// a true repository interface with generated implementation

interface Queries {

// declare the kind of session backing this repository

Session session();

// a HQL query method with a generated implementation

@HQL("where title like :title order by title")

List<Book> findBooksTitled(String title, Page page);

}The Queries interface is now considered a repository, and we may use CDI to inject the repository implementation generated by Hibernate Processor.

Also, since I guess we’re now working in some sort of container environment, we’ll let the container manage transactions for us.

@Inject Queries queries; // inject the repository

@GET

@Path("books/{titlePattern}/{pageNumber:\\d+}")

@Transactional

public List<Book> findBooks(String titlePattern, int pageNumber) {

var page = Page.page(RESULTS_PER_PAGE, pageNumber);

var books = queries.findBooksTitled(session, titlePattern, page); // call the repository method

return books.isEmpty() ? Response.status(404).build() : books;

}Alternatively, if CDI isn’t available, we may directly instantiate the generated repository implementation class using new Queries_(entityManager).

|

The Jakarta Data specification now formalizes this approach using standard annotations, and our implementation of this specification, Hibernate Data Repositories, is built into Hibernate Processor. You probably already have it available in your program. Unlike other repository frameworks, Hibernate Data Repositories offers something that plain JPA simply doesn’t have: full compile-time type safety for your queries. To learn more, please refer to Introducing Hibernate Data Repositories. |

Now that we have a rough picture of what our persistence logic might look like, it’s natural to ask how we should test our code.

1.7. Testing persistence logic

When we write tests for our persistence logic, we’re going to need:

-

a database, with

-

an instance of the schema mapped by our persistent entities, and

-

a set of test data, in a well-defined state at the beginning of each test.

It might seem obvious that we should test against the same database system that we’re going to use in production, and, indeed, we should certainly have at least some tests for this configuration. But on the other hand, tests which perform I/O are much slower than tests which don’t, and most databases can’t be set up to run in-process.

So, since most persistence logic written using Hibernate 6 is extremely portable between databases, it often makes good sense to test against an in-memory Java database. (H2 is the one we recommend.)

|

We do need to be careful here if our persistence code uses native SQL, or if it uses concurrency-management features like pessimistic locks. |

Whether we’re testing against our real database, or against an in-memory Java database, we’ll need to export the schema at the beginning of a test suite.

We usually do this when we create the Hibernate SessionFactory or JPA EntityManagerFactory, and so traditionally we’ve used a configuration property for this.

The JPA-standard property is jakarta.persistence.schema-generation.database.action.

For example, if we’re using PersistenceConfiguration to configure Hibernate, we could write:

configuration.property(PersistenceConfiguration.SCHEMAGEN_DATABASE_ACTION,

Action.SPEC_ACTION_DROP_AND_CREATE);Alternatively, we may use the new SchemaManager API to export the schema, just as we did above.

This option is especially convenient when writing tests.

sessionFactory.getSchemaManager().create(true);Since executing DDL statements is very slow on many databases, we don’t want to do this before every test. Instead, to ensure that each test begins with the test data in a well-defined state, we need to do two things before each test:

-

clean up any mess left behind by the previous test, and then

-

reinitialize the test data.

We may truncate all the tables, leaving an empty database schema, using the SchemaManager.

sessionFactory.getSchemaManager().truncate();After truncating tables, we might need to initialize our test data. We may specify test data in a SQL script, for example:

insert into Books (isbn, title) values ('9781932394153', 'Hibernate in Action')

insert into Books (isbn, title) values ('9781932394887', 'Java Persistence with Hibernate')

insert into Books (isbn, title) values ('9781617290459', 'Java Persistence with Hibernate, Second Edition')If we name this file import.sql, and place it in the root classpath, that’s all we need to do.

Otherwise, we need to specify the file in the configuration property jakarta.persistence.sql-load-script-source.

If we’re using PersistenceConfiguration to configure Hibernate, we could write:

configuration.property(AvailableSettings.JAKARTA_HBM2DDL_LOAD_SCRIPT_SOURCE,

"/org/example/test-data.sql");The SQL script will be executed every time export() or truncate() is called.

|

There’s another sort of mess a test can leave behind: cached data in the second-level cache. We recommend disabling Hibernate’s second-level cache for most sorts of testing. Alternatively, if the second-level cache is not disabled, then before each test we should call: |

Now, suppose you’ve followed our advice, and written your entities and query methods to minimize dependencies on "infrastructure", that is, on libraries other than JPA and Hibernate, on frameworks, on container-managed objects, and even on bits of your own system which are hard to instantiate from scratch. Then testing persistence logic is now straightforward!

You’ll need to:

-

bootstrap Hibernate and create a

SessionFactoryorEntityManagerFactoryat the beginning of your test suite (we’ve already seen how to do that), and -

create a new

SessionorEntityManagerinside each@Testmethod, usinginTransaction(), for example.

Actually, some tests might require multiple sessions. But be careful not to leak a session between different tests.

|

Another important test we’ll need is one which validates our O/R mapping annotations against the actual database schema. This is again the job of the schema management tooling, either: Or: This "test" is one which many people like to run even in production, when the system starts up. |

1.8. Overview

It’s now time to begin our journey toward actually understanding the code we saw earlier.

This introduction will guide you through the basic tasks involved in developing a program that uses Hibernate for persistence:

-

configuring and bootstrapping Hibernate, and obtaining an instance of

SessionFactoryorEntityManagerFactory, -

writing a domain model, that is, a set of entity classes which represent the persistent types in your program, and which map to tables of your database,

-

customizing these mappings when the model maps to a pre-existing relational schema,

-

using the

SessionorEntityManagerto perform operations which query the database and return entity instances, or which update the data held in the database, -

using Hibernate Processor to improve compile-time type-safety,

-

writing complex queries using the Hibernate Query Language (HQL) or native SQL, and, finally

-

tuning performance of the data access logic.

Naturally, we’ll start at the top of this list, with the least-interesting topic: configuration.

2. Configuration and bootstrap

We would love to make this section short. Unfortunately, there are several distinct ways to configure and bootstrap Hibernate, and we’re going to have to describe at least two of them in detail.

The five basic ways to obtain an instance of Hibernate are shown in the following table:

Using the standard JPA-defined XML, and the operation |

Usually chosen when portability between JPA implementations is important. |

Using the standard JPA-defined |

Usually chosen when portability between JPA implementations is important, but programmatic control is desired. |

Using |

When portability between JPA implementations is not important, this option adds some convenience and saves a typecast. |

Using the more complex APIs defined in |

Used primarily by framework integrators, this option is outside the scope of this document. |

By letting the container take care of the bootstrap process and of injecting the |

Used in a container environment like WildFly or Quarkus. |

Here we’ll focus on the first two options.

If you’re using Hibernate outside of a container environment, you’ll need to:

-

include Hibernate ORM itself, along with the appropriate JDBC driver, as dependencies of your project, and

-

configure Hibernate with information about your database, by specifying configuration properties.

2.1. Including Hibernate in your project build

First, add the following dependency to your project:

org.hibernate.orm:hibernate-core:{version}

Where {version} is the version of Hibernate you’re using, 7.0.10.Final, for example.

You’ll also need to add a dependency for the JDBC driver for your database.

| Database | Driver dependency |

|---|---|

PostgreSQL or CockroachDB |

|

MySQL or TiDB |

|

MariaDB |

|

DB2 |

|

SQL Server |

|

Oracle |

|

H2 |

|

HSQLDB |

|

Where {version} is the latest version of the JDBC driver for your database.

2.2. Optional dependencies

Optionally, you might also add any of the following additional features:

| Optional feature | Dependencies |

|---|---|

An SLF4J logging implementation |

|

A JDBC connection pool, for example, Agroal |

|

The Hibernate Processor, especially if you’re using Jakarta Data or the JPA criteria query API |

|

The Query Validator, for compile-time checking of HQL |

|

Hibernate Validator, an implementation of Bean Validation |

|

Local second-level cache support via JCache and EHCache |

|

Local second-level cache support via JCache and Caffeine |

|

Distributed second-level cache support via Infinispan |

|

A JSON serialization library for working with JSON datatypes, for example, Jackson or Yasson |

|

|

|

Envers, for auditing historical data |

|

Hibernate JFR, for monitoring via Java Flight Recorder |

|

You might also add the Hibernate bytecode enhancer to your Gradle build if you want to use field-level lazy fetching.

2.3. Configuration using JPA XML

Sticking to the JPA-standard approach, we would provide a file named persistence.xml, which we usually place in the META-INF directory of a persistence archive, that is, of the .jar file or directory which contains our entity classes.

<persistence xmlns="http://java.sun.com/xml/ns/persistence"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://java.sun.com/xml/ns/persistence https://jakarta.ee/xml/ns/persistence/persistence_3_0.xsd"

version="2.0">

<persistence-unit name="org.hibernate.example">

<class>org.hibernate.example.Book</class>

<class>org.hibernate.example.Author</class>

<properties>

<!-- PostgreSQL -->

<property name="jakarta.persistence.jdbc.url"

value="jdbc:postgresql://localhost/example"/>

<!-- Credentials -->

<property name="jakarta.persistence.jdbc.user"

value="gavin"/>

<property name="jakarta.persistence.jdbc.password"

value="hibernate"/>

<!-- Automatic schema export -->

<property name="jakarta.persistence.schema-generation.database.action"

value="drop-and-create"/>

<!-- SQL statement logging -->

<property name="hibernate.show_sql" value="true"/>

<property name="hibernate.format_sql" value="true"/>

<property name="hibernate.highlight_sql" value="true"/>

</properties>

</persistence-unit>

</persistence>The <persistence-unit> element defines a named persistence unit, that is:

-

a collection of associated entity types, along with

-

a set of default configuration settings, which may be augmented or overridden at runtime.

Each <class> element specifies the fully-qualified name of an entity class.

Each <property> element specifies a configuration property and its value.

Note that:

-

the configuration properties in the

jakarta.persistencenamespace are standard properties defined by the JPA spec, and -

properties in the

hibernatenamespace are specific to Hibernate.

We may obtain an EntityManagerFactory by calling Persistence.createEntityManagerFactory():

EntityManagerFactory entityManagerFactory =

Persistence.createEntityManagerFactory("org.hibernate.example");If necessary, we may override configuration properties specified in persistence.xml:

EntityManagerFactory entityManagerFactory =

Persistence.createEntityManagerFactory("org.hibernate.example",

Map.of(AvailableSettings.JAKARTA_JDBC_PASSWORD, password));2.4. Programmatic configuration using JPA API

The new PersistenceConfiguration class allows full programmatic control over creation of the EntityManagerFactory.

EntityManagerFactory entityManagerFactory =

new PersistenceConfiguration("Bookshop")

.managedClass(Book.class)

.managedClass(Author.class)

// PostgreSQL

.property(PersistenceConfiguration.JDBC_URL, "jdbc:postgresql://localhost/example")

// Credentials

.property(PersistenceConfiguration.JDBC_USER, user)

.property(PersistenceConfiguration.JDBC_PASSWORD, password)

// Automatic schema export

.property(PersistenceConfiguration.SCHEMAGEN_DATABASE_ACTION,

Action.SPEC_ACTION_DROP_AND_CREATE)

// SQL statement logging

.property(JdbcSettings.SHOW_SQL, true)

.property(JdbcSettings.FORMAT_SQL, true)

.property(JdbcSettings.HIGHLIGHT_SQL, true)

// Create a new EntityManagerFactory

.createEntityManagerFactory();The specification gives JPA implementors like Hibernate explicit permission to extend this class, and so Hibernate offers the HibernatePersistenceConfiguration, which lets us obtain a SessionFactory without any need for a cast.

SessionFactory sessionFactory =

new HibernatePersistenceConfiguration("Bookshop")

.managedClass(Book.class)

.managedClass(Author.class)

// PostgreSQL

.jdbcUrl("jdbc:postgresql://localhost/example")

// Credentials

.jdbcCredentials(user, password)

// Automatic schema export

.schemaToolingAction(Action.SPEC_ACTION_DROP_AND_CREATE)

// SQL statement logging

.showSql(true, true, true)

// Create a new SessionFactory

.createEntityManagerFactory();Alternatively, the venerable class Configuration offers similar functionality.

2.5. Configuration using Hibernate properties file

If we’re using programmatic configuration, but we don’t want to put certain configuration properties directly in the Java code, we can specify them in a file named hibernate.properties, and place the file in the root classpath.

# PostgreSQL

jakarta.persistence.jdbc.url=jdbc:postgresql://localhost/example

# Credentials

jakarta.persistence.jdbc.user=hibernate

jakarta.persistence.jdbc.password=zAh7mY$2MNshzAQ5

# SQL statement logging

hibernate.show_sql=true

hibernate.format_sql=true

hibernate.highlight_sql=true2.6. Basic configuration settings

The PersistenceConfiguration class declares static final constants holding the names of all configuration properties defined by the specification itself, for example, JDBC_URL holds the property name "jakarta.persistence.jdbc.driver".

Similarly, the class AvailableSettings enumerates all the configuration properties understood by Hibernate.

Of course, we’re not going to cover every useful configuration setting in this chapter. Instead, we’ll mention the ones you need to get started, and come back to some other important settings later, especially when we talk about performance tuning.

|

Hibernate has many—too many—switches and toggles. Please don’t go crazy messing about with these settings; most of them are rarely needed, and many only exist to provide backward compatibility with older versions of Hibernate. With rare exception, the default behavior of every one of these settings was carefully chosen to be the behavior we recommend. |

The properties you really do need to get started are these three:

| Configuration property name | Purpose |

|---|---|

|

JDBC URL of your database |

|

Your database credentials |

|

Since Hibernate 6, you don’t need to specify Similarly, neither |

In some environments it’s useful to be able to start Hibernate without accessing the database. In this case, we must explicitly specify not only the database platform, but also the version of the database, using the standard JPA configuration properties.

# disable use of JDBC database metadata

hibernate.boot.allow_jdbc_metadata_access=false

# explicitly specify database and version

jakarta.persistence.database-product-name=PostgreSQL

jakarta.persistence.database-major-version=15

jakarta.persistence.database-minor-version=7The product name is the value returned by java.sql.DatabaseMetaData.getDatabaseProductName(), for example, PostgreSQL, MySQL, H2, Oracle, EnterpriseDB, MariaDB, or Microsoft SQL Server.

| Configuration property name | Purpose |

|---|---|

|

Set to |

|

The database product name, according to the JDBC driver |

|

The major and minor versions of the database |

Pooling JDBC connections is an extremely important performance optimization. You can set the size of Hibernate’s built-in connection pool using this property:

| Configuration property name | Purpose |

|---|---|

|

The size of the connection pool |

This configuration property is also respected when you use Agroal, HikariCP, or c3p0 for connection pooling.

|

By default, Hibernate uses a simplistic built-in connection pool. This pool is not meant for use in production, and later, when we discuss performance, we’ll see how to select a more robust implementation. |

Alternatively, in a container environment, you’ll need at least one of these properties:

| Configuration property name | Purpose |

|---|---|

|

(Optional, defaults to |

|

JNDI name of a JTA datasource |

|

JNDI name of a non-JTA datasource |

In this case, Hibernate obtains pooled JDBC database connections from a container-managed DataSource.

2.7. Automatic schema export

You can have Hibernate infer your database schema from the mapping annotations you’ve specified in your Java code, and export the schema at initialization time by specifying one or more of the following configuration properties:

| Configuration property name | Purpose |

|---|---|

|

|

|

(Optional) If |

|

(Optional) If |

|

(Optional) The name of a SQL DDL script to be executed |

|

(Optional) The name of a SQL DML script to be executed |

This feature is extremely useful for testing.

|

The easiest way to pre-initialize a database with test or "reference" data is to place a list of SQL |

As we mentioned earlier, it can also be useful to control schema export programmatically.

|

The |

2.8. Logging the generated SQL

To see the generated SQL as it’s sent to the database, you have two options.

One way is to set the property hibernate.show_sql to true, and Hibernate will log SQL directly to the console.

You can make the output much more readable by enabling formatting or highlighting.

These settings really help when troubleshooting the generated SQL statements.

| Configuration property name | Purpose |

|---|---|

|

If |

|

If |

|

If |

Alternatively, you can enable debug-level logging for the category org.hibernate.SQL using your preferred SLF4J logging implementation.

For example, if you’re using Log4J 2 (as above in Optional dependencies), add these lines to your log4j2.properties file:

# SQL execution

logger.hibernate.name = org.hibernate.SQL

logger.hibernate.level = debug

# JDBC parameter binding

logger.jdbc-bind.name=org.hibernate.orm.jdbc.bind

logger.jdbc-bind.level=trace

# JDBC result set extraction

logger.jdbc-extract.name=org.hibernate.orm.jdbc.extract

logger.jdbc-extract.level=traceSQL logging respects the settings hibernate.format_sql and hibernate.highlight_sql, so we don’t miss out on the pretty formatting and highlighting.

2.9. Minimizing repetitive mapping information

The following properties are very useful for minimizing the amount of information you’ll need to explicitly specify in @Table and @Column annotations, which we’ll discuss below in Object/relational mapping:

| Configuration property name | Purpose |

|---|---|

A default schema name for entities which do not explicitly declare one |

|

A default catalog name for entities which do not explicitly declare one |

|

A |

|

An |

|

Writing your own |

2.10. Quoting SQL identifiers

By default, Hibernate never quotes a SQL table or column name in generated SQL when the name contains only alphanumeric characters.

This behavior is usually much more convenient, especially when working with a legacy schema, since unquoted identifiers aren’t case-sensitive, and so Hibernate doesn’t need to know or care whether a column is named NAME, name, or Name on the database side.

On the other hand, any table or column name containing a punctuation character like $ is automatically quoted by default.

The following settings enable additional automatic quoting:

| Configuration property name | Purpose |

|---|---|

Automatically quote any identifier which is a SQL keyword |

|

Automatically quote every identifier |

Note that hibernate.globally_quoted_identifiers is a synonym for <delimited-identifiers/> in persistence.xml.

We don’t recommend the use of global identifier quoting, and in fact these settings are rarely used.

|

A better alternative is to explicitly quote table and column names where necessary, by writing |

2.11. Nationalized character data in SQL Server

By default, SQL Server’s char and varchar types don’t accommodate Unicode data.

But a Java string may contain any Unicode character.

So, if you’re working with SQL Server, you might need to force Hibernate to use the nchar and nvarchar column types.

| Configuration property name | Purpose |

|---|---|

Use |

On the other hand, if only some columns store nationalized data, use the @Nationalized annotation to indicate fields of your entities which map these columns.

|

Alternatively, you can configure SQL Server to use the UTF-8 enabled collation |

2.12. Date and time types and JDBC

By default, Hibernate handles date and time types defined by java.time by:

-

converting

java.timetypes to JDBC date/time types defined injava.sqlwhen sending data to the database, and -

reading

java.sqltypes from JDBC and then converting them tojava.timetypes when retrieving data from the database.

This works best when the database server time zone agrees with JVM system time zone.

| We therefore recommend setting things up so that the database server and the JVM agree on the same time zone. Hint: when in doubt, UTC is quite a nice time zone. |

There are two system configuration properties which influence this behavior:

| Configuration property name | Purpose |

|---|---|

Use an explicit time zone when interacting with JDBC |

|

Read and write |

You may set hibernate.jdbc.time_zone to the time zone of the database server if for some reason the JVM needs to operate in a different time zone.

We do not recommend this approach.

On the other hand, we would love to recommend the use of hibernate.type.java_time_use_direct_jdbc, but this option is still experimental for now, and does result in some subtle differences in behavior which might affect legacy programs using Hibernate.

3. Entities

An entity is a Java class which represents data in a relational database table. We say that the entity maps or maps to the table. Much less commonly, an entity might aggregate data from multiple tables, but we’ll get to that later.

An entity has attributes—properties or fields—which map to columns of the table. In particular, every entity must have an identifier or id, which maps to the primary key of the table. The id allows us to uniquely associate a row of the table with an instance of the Java class, at least within a given persistence context.

We’ll explore the idea of a persistence context later. For now, think of it as a one-to-one mapping between ids and entity instances.

An instance of a Java class cannot outlive the virtual machine to which it belongs.

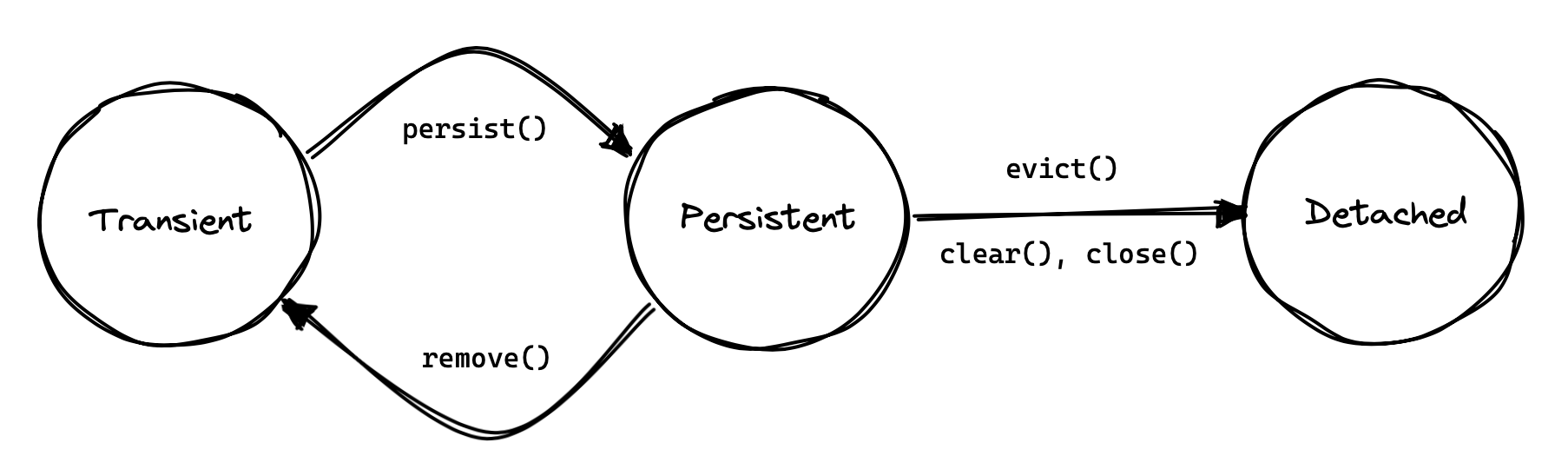

But we may think of an entity instance having a lifecycle which transcends a particular instantiation in memory.

By providing its id to Hibernate, we may re-materialize the instance in a new persistence context, as long as the associated row is present in the database.

Therefore, the operations persist() and remove() may be thought of as demarcating the beginning and end of the lifecycle of an entity, at least with respect to persistence.

Thus, an id represents the persistent identity of an entity, an identity that outlives a particular instantiation in memory.

And this is an important difference between entity class itself and the values of its attributes—the entity has a persistent identity, and a well-defined lifecycle with respect to persistence, whereas a String or List representing one of its attribute values doesn’t.

An entity usually has associations to other entities. Typically, an association between two entities maps to a foreign key in one of the database tables. A group of mutually associated entities is often called a domain model, though data model is also a perfectly good term.

3.1. Entity classes

An entity must:

-

be a non-

finalclass, -

with a non-

privateconstructor with no parameters.

On the other hand, the entity class may be either concrete or abstract, and it may have any number of additional constructors.

|

An entity class may be a |

Every entity class must be annotated @Entity.

@Entity

class Book {

Book() {}

...

}Alternatively, the class may be identified as an entity type by providing an XML-based mapping for the class.

We won’t have much more to say about XML-based mappings in this Short Guide, since it’s not our preferred way to do things.

3.2. Access types

Each entity class has a default access type, either:

-

direct field access, or

-

property access.

Hibernate automatically determines the access type from the location of attribute-level annotations. Concretely:

-

if a field is annotated

@Id, field access is used, or -

if a getter method is annotated

@Id, property access is used.

Back when Hibernate was just a baby, property access was quite popular in the Hibernate community. Today, however, field access is much more common.

|

The default access type may be specified explicitly using the |

|

Mapping annotations should be placed consistently:

It is in principle possible to mix field and property access using explicit |

An entity class like Book, which does not extend any other entity class, is called a root entity.

Every root entity must declare an identifier attribute.

3.3. Entity class inheritance

An entity class may extend another entity class.

@Entity

class AudioBook extends Book {

AudioBook() {}

...

}A subclass entity inherits every persistent attribute of every entity it extends.

A root entity may also extend another class and inherit mapped attributes from the other class.

But in this case, the class which declares the mapped attributes must be annotated @MappedSuperclass.

@MappedSuperclass

class Versioned {

...

}

@Entity

class Book extends Versioned {

...

}A root entity class must declare an attribute annotated @Id, or inherit one from a @MappedSuperclass.

A subclass entity always inherits the identifier attribute of the root entity.

It may not declare its own @Id attribute.

3.4. Identifier attributes

An identifier attribute is usually a field:

@Entity

class Book {

Book() {}

@Id

Long id;

...

}But it may be a property:

@Entity

class Book {

Book() {}

private Long id;

@Id

Long getId() { return id; }

void setId(Long id) { this.id = id; }

...

}An identifier attribute must be annotated @Id or @EmbeddedId.

Identifier values may be:

-

assigned by the application, that is, by your Java code, or

-

generated and assigned by Hibernate.

We’ll discuss the second option first.

3.5. Generated identifiers

An identifier is often system-generated, in which case it should be annotated @GeneratedValue:

@Id @GeneratedValue

Long id;|

System-generated identifiers, or surrogate keys make it easier to evolve or refactor the relational data model. If you have the freedom to define the relational schema, we recommend the use of surrogate keys. On the other hand, if, as is more common, you’re working with a pre-existing database schema, you might not have the option. |

JPA defines the following strategies for generating ids, which are enumerated by GenerationType:

| Strategy | Java type | Implementation |

|---|---|---|

|

|

A Java |

|

|

An identity or autoincrement column |

|

|

A database sequence |

|

|

A database table |

|

|

Selects |

For example, this UUID is generated in Java code:

@Id @GeneratedValue UUID id; // AUTO strategy selects UUID based on the field typeThis id maps to a SQL identity, auto_increment, or bigserial column:

@Id @GeneratedValue(strategy = IDENTITY) Long id;The @SequenceGenerator and @TableGenerator annotations allow further control over SEQUENCE and TABLE generation respectively.

Consider this sequence generator:

@SequenceGenerator(name = "bookSeq", sequenceName = "seq_book", initialValue = 5, allocationSize=10)Values are generated using a database sequence defined as follows:

create sequence seq_book start with 5 increment by 10Notice that Hibernate doesn’t have to go to the database every time a new identifier is needed.

Instead, a given process obtains a block of ids, of size allocationSize, and only needs to hit the database each time the block is exhausted.

Of course, the downside is that generated identifiers are not contiguous.

|

If you let Hibernate export your database schema, the sequence definition will have the right |

Any identifier attribute may now make use of the generator named bookSeq:

@Id

@GeneratedValue(generator = "bookSeq") // reference to generator defined elsewhere

Long id;Actually, it’s extremely common to place the @SequenceGenerator annotation on the @Id attribute that makes use of it:

@Id

@GeneratedValue // uses the generator defined below

@SequenceGenerator(sequenceName = "seq_book", initialValue = 5, allocationSize=10)

Long id;In this case, the name of the @SequenceGenerator should not be specified.

We may even place a @SequenceGenerator or @TableGenerator annotation at the package level:

@SequenceGenerator(sequenceName = "id_sequence", initialValue = 5, allocationSize=10)

@TableGenerator(table = "id_table", initialValue = 5, allocationSize=10)

package org.example.entities;Then any entity in this package which specifies strategy=SEQUENCE or strategy=TABLE without also explicitly specifying a generator name will be assigned a generator based on the package-level annotation.

@Id

@GeneratedValue(strategy=SEQUENCE) // uses the sequence generator defined at the package level

Long id;As you can see, JPA provides quite adequate support for the most common strategies for system-generated ids.

However, the annotations themselves are a bit more intrusive than they should be, and there’s no well-defined way to extend this framework to support custom strategies for id generation.

Nor may @GeneratedValue be used on a property not annotated @Id.

Since custom id generation is a rather common requirement, Hibernate provides a very carefully-designed framework for user-defined Generators, which we’ll discuss in User-defined generators.

3.6. Natural keys as identifiers

Not every identifier attribute maps to a (system-generated) surrogate key. Primary keys which are meaningful to the user of the system are called natural keys.

When the primary key of a table is a natural key, we don’t annotate the identifier attribute @GeneratedValue, and it’s the responsibility of the application code to assign a value to the identifier attribute.

@Entity

class Book {

@Id

String isbn;

...

}Of particular interest are natural keys which comprise more than one database column, and such natural keys are called composite keys.

3.7. Composite identifiers

If your database uses composite keys, you’ll need more than one identifier attribute. There are two ways to map composite keys in JPA:

-

using an

@IdClass, or -

using an

@EmbeddedId.

Perhaps the most immediately-natural way to represent this in an entity class is with multiple fields annotated @Id, for example:

@Entity

@IdClass(BookId.class)

class Book {

Book() {}

@Id

String isbn;

@Id

int printing;

...

}But this approach comes with a problem: what object can we use to identify a Book and pass to methods like find() which accept an identifier?

The solution is to write a separate class with fields that match the identifier attributes of the entity.

Every such id class must override equals() and hashCode().

Of course, the easiest way to satisfy these requirements is to declare the id class as a record.

record BookId(String isbn, int printing) {}The @IdClass annotation of the Book entity identifies BookId as the id class to use for that entity.

This is not our preferred approach.

Instead, we recommend that the BookId class be declared as an @Embeddable type:

@Embeddable

record BookId(String isbn, int printing) {}We’ll learn more about Embeddable objects below.

Now the entity class may reuse this definition using @EmbeddedId, and the @IdClass annotation is no longer required:

@Entity

class Book {

Book() {}

@EmbeddedId

BookId bookId;

...

}This second approach eliminates some duplicated code.

Either way, we may now use BookId to obtain instances of Book:

Book book = session.find(Book.class, new BookId(isbn, printing));3.8. Version attributes

An entity may have an attribute which is used by Hibernate for optimistic lock verification.

A version attribute is usually of type Integer, Short, Long, LocalDateTime, OffsetDateTime, ZonedDateTime, or Instant.

@Version

int version;@Version

LocalDateTime lastUpdated;A version attribute is automatically assigned by Hibernate when an entity is made persistent, and automatically incremented or updated each time the entity is updated.

If the version attribute is numeric, then an entity is, by default, assigned the version number 0 when it’s first made persistent.

It’s easy to specify that the initial version should be assigned the number 1 instead:

@Version

int version = 1; // the initial version numberAlmost every entity which is frequently updated should have a version attribute.

|

If an entity doesn’t have a version number, which often happens when mapping legacy data, we can still do optimistic locking.

The |

The @Id and @Version attributes we’ve already seen are just specialized examples of basic attributes.

3.9. Natural id attributes

Even when an entity has a surrogate key, it should always be possible to write down a combination of fields which uniquely identifies an instance of the entity, from the point of view of the user of the system. This combination of fields is its natural key. Above, we considered the case where the natural key coincides with the primary key. Here, the natural key is a second unique key of the entity, distinct from its surrogate primary key.

|

If you can’t identify a natural key, it might be a sign that you need to think more carefully about some aspect of your data model. If an entity doesn’t have a meaningful unique key, then it’s impossible to say what event or object it represents in the "real world" outside your program. |

Since it’s extremely common to retrieve an entity based on its natural key, Hibernate has a way to mark the attributes of the entity which make up its natural key.

Each attribute must be annotated @NaturalId.

@Entity

class Book {

Book() {}

@Id @GeneratedValue

Long id; // the system-generated surrogate key

@NaturalId

String isbn; // belongs to the natural key

@NaturalId

int printing; // also belongs to the natural key

...

}Hibernate automatically generates a UNIQUE constraint on the columns mapped by the annotated fields.

|

Consider using the natural id attributes to implement |

The payoff for doing this extra work, as we will see much later, is that we can take advantage of optimized natural id lookups that make use of the second-level cache.

Note that even when you’ve identified a natural key, we still recommend the use of a generated surrogate key in foreign keys, since this makes your data model much easier to change.

3.10. Basic attributes

A basic attribute of an entity is a field or property which maps to a single column of the associated database table. The JPA specification defines a quite limited set of basic types:

| Classification | Package | Types |

|---|---|---|

Primitive types |

|

|

Primitive wrappers |

|

|

Strings |

|

|

Arbitrary-precision numeric types |

|

|

UUIDs |

|

|

Date/time types |

|

|

Deprecated date/time types 💀 |

|

|

Deprecated JDBC date/time types 💀 |

|

|

Binary and character arrays |

|

|

Binary and character wrapper arrays 💀 |

|

|

Enumerated types |

Any |

|

Serializable types |

Any type which implements |

|

We’re begging you to use types from the |

|

The use of |

|

Serializing a Java object and storing its binary representation in the database is usually wrong. As we’ll soon see in Embeddable objects, Hibernate has much better ways to handle complex Java objects. |

Hibernate slightly extends this list with the following types:

| Classification | Package | Types |

|---|---|---|

Additional date/time types |

|

|

JDBC LOB types |

|

|

Java class object |

|

|

Miscellaneous types |

|

|

The @Basic annotation explicitly specifies that an attribute is basic, but it’s often not needed, since attributes are assumed basic by default.

On the other hand, if a non-primitively-typed attribute cannot be null, use of @Basic(optional=false) is highly recommended.

@Basic(optional=false) String firstName;

@Basic(optional=false) String lastName;

String middleName; // may be nullNote that primitively-typed attributes are inferred NOT NULL by default.

3.11. Enumerated types

We included Java enums on the list above.

An enumerated type is considered a sort of basic type, but since most databases don’t have a native ENUM type, JPA provides a special @Enumerated annotation to specify how the enumerated values should be represented in the database:

-

by default, an enum is stored as an integer, the value of its

ordinal()member, but -

if the attribute is annotated

@Enumerated(STRING), it will be stored as a string, the value of itsname()member.

//here, an ORDINAL encoding makes sense

@Enumerated

@Basic(optional=false)

DayOfWeek dayOfWeek;

//but usually, a STRING encoding is better

@Enumerated(EnumType.STRING)

@Basic(optional=false)

Status status;The @EnumeratedValue annotation allows the column value to be customized:

enum Resolution {

UNRESOLVED(0), FIXED(1), REJECTED(-1);

@EnumeratedValue // store the code, not the enum ordinal() value

final int code;

Resolution(int code) {

this.code = code;

}

}Since Hibernate 6, an enum annotated @Enumerated(STRING) is mapped to:

-

a

VARCHARcolumn type with aCHECKconstraint on most databases, or -

an

ENUMcolumn type on MySQL.

Any other enum is mapped to a TINYINT column with a CHECK constraint.

|

JPA picks the wrong default here.

In most cases, storing an integer encoding of the Even considering So we prefer |

An interesting special case arises on PostgreSQL and Oracle.

The limited set of pre-defined basic attribute types can be stretched a bit further by supplying a converter.

3.12. Converters

A JPA AttributeConverter is responsible for:

-

converting a given Java type to one of the types listed above, and/or

-

perform any other sort of pre- and post-processing you might need to perform on a basic attribute value before writing and reading it to or from the database.

Converters substantially widen the set of attribute types that can be handled by JPA.

There are two ways to apply a converter:

-

the

@Convertannotation applies anAttributeConverterto a particular entity attribute, or -

the

@Converterannotation (or, alternatively, the@ConverterRegistrationannotation) registers anAttributeConverterfor automatic application to all attributes of a given type.

For example, the following converter will be automatically applied to any attribute of type EnumSet<DayOfWeek>, and takes care of persisting the EnumSet<DayOfWeek> to a column of type INTEGER:

@Converter(autoApply = true)

public static class EnumSetConverter

// converts Java values of type EnumSet<DayOfWeek> to integers for storage in an INT column

implements AttributeConverter<EnumSet<DayOfWeek>,Integer> {

@Override

public Integer convertToDatabaseColumn(EnumSet<DayOfWeek> enumSet) {

int encoded = 0;

var values = DayOfWeek.values();

for (int i = 0; i<values.length; i++) {

if (enumSet.contains(values[i])) {

encoded |= 1<<i;

}

}

return encoded;

}

@Override

public EnumSet<DayOfWeek> convertToEntityAttribute(Integer encoded) {

var set = EnumSet.noneOf(DayOfWeek.class);

var values = DayOfWeek.values();

for (int i = 0; i<values.length; i++) {

if (((1<<i) & encoded) != 0) {

set.add(values[i]);

}

}

return set;

}

}On the other hand, if we don’t set autoapply=true, then we must explicitly apply the converter using the @Convert annotation:

@Convert(converter = EnumSetConverter.class)

@Basic(optional = false)

EnumSet<DayOfWeek> daysOfWeek;All this is nice, but it probably won’t surprise you that Hibernate goes beyond what is required by JPA.

3.13. Compositional basic types

Hibernate considers a "basic type" to be formed by the marriage of two objects:

-

a

JavaType, which models the semantics of a certain Java class, and -

a

JdbcType, representing a SQL type which is understood by JDBC.

When mapping a basic attribute, we may explicitly specify a JavaType, a JdbcType, or both.

JavaType

An instance of org.hibernate.type.descriptor.java.JavaType represents a particular Java class.

It’s able to:

-

compare instances of the class to determine if an attribute of that class type is dirty (modified),

-

produce a useful hash code for an instance of the class,

-

coerce values to other types, and, in particular,

-

convert an instance of the class to one of several other equivalent Java representations at the request of its partner

JdbcType.

For example, IntegerJavaType knows how to convert an Integer or int value to the types Long, BigInteger, and String, among others.

We may explicitly specify a Java type using the @JavaType annotation, but for the built-in JavaTypes this is never necessary.

@JavaType(LongJavaType.class) // not needed, this is the default JavaType for long

long currentTimeMillis;For a user-written JavaType, the annotation is more useful:

@JavaType(BitSetJavaType.class)

BitSet bitSet;Alternatively, the @JavaTypeRegistration annotation may be used to register BitSetJavaType as the default JavaType for BitSet.

JdbcType

An org.hibernate.type.descriptor.jdbc.JdbcType is able to read and write a single Java type from and to JDBC.

For example, VarcharJdbcType takes care of:

-

writing Java strings to JDBC

PreparedStatements by callingsetString(), and -

reading Java strings from JDBC

ResultSets usinggetString().

By pairing LongJavaType with VarcharJdbcType in holy matrimony, we produce a basic type which maps Longs and primitive longss to the SQL type VARCHAR.

We may explicitly specify a JDBC type using the @JdbcType annotation.

@JdbcType(VarcharJdbcType.class)

long currentTimeMillis;Alternatively, we may specify a JDBC type code:

@JdbcTypeCode(Types.VARCHAR)

long currentTimeMillis;The @JdbcTypeRegistration annotation may be used to register a user-written JdbcType as the default for a given SQL type code.

AttributeConverter

If a given JavaType doesn’t know how to convert its instances to the type required by its partner JdbcType, we must help it out by providing a JPA AttributeConverter to perform the conversion.

For example, to form a basic type using LongJavaType and TimestampJdbcType, we would provide an AttributeConverter<Long,Timestamp>.

@JdbcType(TimestampJdbcType.class)

@Convert(converter = LongToTimestampConverter.class)

long currentTimeMillis;Let’s abandon our analogy right here, before we start calling this basic type a "throuple".

3.14. Date and time types, and time zones

Dates and times should always be represented using the types defined in java.time.

|

Never use the legacy types |

Some of the types in java.time map naturally to an ANSI SQL column type.

A source of confusion is that some databases still don’t follow the ANSI standard naming here.

Also, as you’re probably aware, the DATE type on Oracle is not an ANSI SQL DATE.

In fact, Oracle doesn’t have DATE or TIME types—every date or time must be stored as a timestamp.

java.time class |

ANSI SQL type | MySQL | SQL Server | Oracle |

|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

On the other hand, there are no perfectly natural mappings for Instant and Duration on must databases.

By default:

-

Durationis mapped to a column of typeNUMERIC(21)holding the length of the duration in nanoseconds, and -

Instantis mapped to a column of typeTIMESTAMP(DATETIMEon MySQL).

Fortunately, these mappings can be modified by specifying the JdbcType.

For example, if we wanted to store an Instant using TIMESTAMP WITH TIME ZONE (TIMESTAMP on MySQL) instead of TIMESTAMP, then we could annotate the field:

// store the Instant as a TIMESTAMP WITH TIME ZONE, instead of as a TIMESTAMP

@JdbcTypeCode(SqlTypes.TIMESTAMP_WITH_TIMEZONE)

Instant instant;Alternatively, we could set the configuration property hibernate.type.preferred_instant_jdbc_type:

// store field of type Instant as TIMESTAMP WITH TIME ZONE, instead of as a TIMESTAMP

config.setProperty(MappingSettings.PREFERRED_INSTANT_JDBC_TYPE, SqlTypes.TIMESTAMP_WITH_TIMEZONE);We have worked very hard to make sure that Java date and time types work with consistent and correct semantics across all databases supported by Hibernate. In particular, Hibernate is very careful in how it handles time zones.

|

Unfortunately, with the notable exception of Oracle, most SQL databases feature embarrassingly poor support for timezones.

Even some databases which do supposedly support |

|

The still-experimental annotation |

3.15. Embeddable objects

An embeddable object is a Java class whose state maps to multiple columns of a table, but which doesn’t have its own persistent identity.

That is, it’s a class with mapped attributes, but no @Id attribute.

An embeddable object can only be made persistent by assigning it to the attribute of an entity. Since the embeddable object does not have its own persistent identity, its lifecycle with respect to persistence is completely determined by the lifecycle of the entity to which it belongs.

An embeddable class must be annotated @Embeddable instead of @Entity.

@Embeddable

class Name {

@Basic(optional=false)

String firstName;

@Basic(optional=false)

String lastName;

String middleName;

Name() {}

Name(String firstName, String middleName, String lastName) {

this.firstName = firstName;

this.middleName = middleName;

this.lastName = lastName;

}

...

}An embeddable class must satisfy the same requirements that entity classes satisfy, with the exception that an embeddable class has no @Id attribute.

In particular, it must have a constructor with no parameters.

Alternatively, an embeddable type may be defined as a Java record type:

@Embeddable

record Name(String firstName, String middleName, String lastName) {}In this case, the requirement for a constructor with no parameters is relaxed.

We may now use our Name class (or record) as the type of an entity attribute:

@Entity

class Author {

@Id @GeneratedValue

Long id;

Name name;

...

}Embeddable types can be nested.

That is, an @Embeddable class may have an attribute whose type is itself a different @Embeddable class.

|

JPA provides an |

On the other hand a reference to an embeddable type is never polymorphic.

One @Embeddable class F may inherit a second @Embeddable class E, but an attribute of type E will always refer to an instance of that concrete class E, never to an instance of F.

Usually, embeddable types are stored in a "flattened" format. Their attributes map columns of the table of their parent entity. Later, in Mapping embeddable types to UDTs or to JSON, we’ll see a couple of different options.

An attribute of embeddable type represents a relationship between a Java object with a persistent identity, and a Java object with no persistent identity. We can think of it as a whole/part relationship. The embeddable object belongs to the entity, and can’t be shared with other entity instances. And it exists for only as long as its parent entity exists.

Next we’ll discuss a different kind of relationship: a relationship between Java objects which each have their own distinct persistent identity and persistence lifecycle.

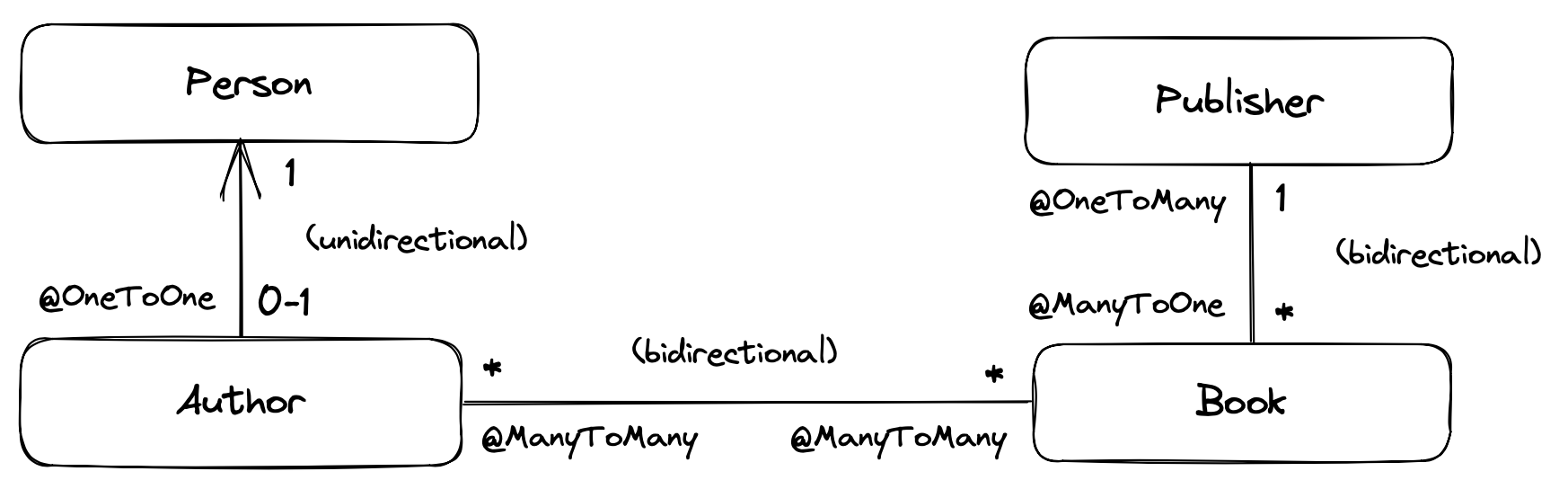

3.16. Associations

An association is a relationship between entities.

We usually classify associations based on their multiplicity.

If E and F are both entity classes, then:

-

a one-to-one association relates at most one unique instance

Ewith at most one unique instance ofF, -

a many-to-one association relates zero or more instances of

Ewith a unique instance ofF, and -

a many-to-many association relates zero or more instances of

Ewith zero or more instance ofF.

An association between entity classes may be either:

-

unidirectional, navigable from

EtoFbut not fromFtoE, or -

bidirectional, and navigable in either direction.

In this example data model, we can see the sorts of associations which are possible:

|

An astute observer of the diagram above might notice that the relationship we’ve presented as a unidirectional one-to-one association could reasonably be represented in Java using subtyping.

This is quite normal.

A one-to-one association is the usual way we implement subtyping in a fully-normalized relational model.

It’s related to the |

There are three annotations for mapping associations: @ManyToOne, @OneToMany, and @ManyToMany.

They share some common annotation members:

| Member | Interpretation | Default value |

|---|---|---|

|

Persistence operations which should cascade to the associated entity; a list of |

|

|

Whether the association is eagerly fetched or may be proxied |

|

|

The associated entity class |

Determined from the attribute type declaration |

|

For a |

|

|

For a bidirectional association, an attribute of the associated entity which maps the association |

By default, the association is assumed unidirectional |

We’ll explain the effect of these members as we consider the various types of association mapping.

It’s not a requirement to represent every foreign key relationship as an association at the Java level.

It’s perfectly acceptable to replace a @ManyToOne mapping with a basic-typed attribute holding an identifier, if it’s inconvenient to think of this relationship as an association at the Java level.