- 3.1. Directory configuration

- 3.2. Sharding indexes

- 3.3. Sharing indexes (two entities into the same directory)

- 3.4. Worker configuration

- 3.5. JMS Master/Slave configuration

- 3.6. JGroups Master/Slave configuration

- 3.7. Reader strategy configuration

- 3.8. Enabling Hibernate Search and automatic indexing

- 3.9. Tuning Lucene indexing performance

- 3.10. LockFactory configuration

- 3.11. Exception Handling Configuration

Apache Lucene has a notion of Directory to store

the index files. The Directory implementation can

be customized, but Lucene comes bundled with a file system

(FSDirectoryProvider) and an in memory

(RAMDirectoryProvider) implementation.

DirectoryProviders are the Hibernate Search abstraction

around a Lucene Directory and handle the

configuration and the initialization of the underlying Lucene resources.

Table 3.1, “List of built-in Directory Providers” shows the list of the

directory providers bundled with Hibernate Search.

Table 3.1. List of built-in Directory Providers

| Class | Description | Properties |

|---|---|---|

| org.hibernate.search.store.RAMDirectoryProvider | Memory based directory, the directory will be uniquely

identified (in the same deployment unit) by the

@Indexed.index element | none |

| org.hibernate.search.store.FSDirectoryProvider | File system based directory. The directory used will be <indexBase>/< indexName > |

|

| org.hibernate.search.store.FSMasterDirectoryProvider | File system based directory. Like FSDirectoryProvider. It also copies the index to a source directory (aka copy directory) on a regular basis. The recommended value for the refresh period is (at least) 50% higher that the time to copy the information (default 3600 seconds - 60 minutes). Note that the copy is based on an incremental copy mechanism reducing the average copy time. DirectoryProvider typically used on the master node in a JMS back end cluster. The |

|

| org.hibernate.search.store.FSSlaveDirectoryProvider | File system based directory. Like FSDirectoryProvider, but retrieves a master version (source) on a regular basis. To avoid locking and inconsistent search results, 2 local copies are kept. The recommended value for the refresh period is (at least) 50% higher that the time to copy the information (default 3600 seconds - 60 minutes). Note that the copy is based on an incremental copy mechanism reducing the average copy time. DirectoryProvider typically used on slave nodes using a JMS back end. The

|

|

If the built-in directory providers do not fit your needs, you can

write your own directory provider by implementing the

org.hibernate.store.DirectoryProvider

interface.

Each indexed entity is associated to a Lucene index (an index can be

shared by several entities but this is not usually the case). You can

configure the index through properties prefixed by

hibernate.search.indexname

. Default properties inherited to all indexes can be defined using the

prefix hibernate.search.default.

To define the directory provider of a given index, you use the

hibernate.search.indexname.directory_provider

Example 3.1. Configuring directory providers

hibernate.search.default.directory_provider org.hibernate.search.store.FSDirectoryProvider hibernate.search.default.indexBase=/usr/lucene/indexes hibernate.search.Rules.directory_provider org.hibernate.search.store.RAMDirectoryProvider

applied on

Example 3.2. Specifying the index name using the index

parameter of @Indexed

@Indexed(index="Status")

public class Status { ... }

@Indexed(index="Rules")

public class Rule { ... }will create a file system directory in

/usr/lucene/indexes/Status where the Status entities

will be indexed, and use an in memory directory named

Rules where Rule entities will be indexed.

You can easily define common rules like the directory provider and base directory, and override those defaults later on on a per index basis.

Writing your own DirectoryProvider, you can

utilize this configuration mechanism as well.

In some cases, it is necessary to split (shard) the indexing data of a given entity type into several Lucene indexes. This solution is not recommended unless there is a pressing need because by default, searches will be slower as all shards have to be opened for a single search. In other words don't do it until you have problems :)

For example, sharding may be desirable if:

A single index is so huge that index update times are slowing the application down.

A typical search will only hit a sub-set of the index, such as when data is naturally segmented by customer, region or application.

Hibernate Search allows you to index a given entity type into

several sub indexes. Data is sharded into the different sub indexes thanks

to an IndexShardingStrategy. By default, no

sharding strategy is enabled, unless the number of shards is configured.

To configure the number of shards use the following property

Example 3.3. Enabling index sharding by specifying nbr_of_shards for a specific index

hibernate.search.<indexName>.sharding_strategy.nbr_of_shards 5

This will use 5 different shards.

The default sharding strategy, when shards are set up, splits the

data according to the hash value of the id string representation

(generated by the Field Bridge). This ensures a fairly balanced sharding.

You can replace the strategy by implementing

IndexShardingStrategy and by setting the following

property

Example 3.4. Specifying a custom sharding strategy

hibernate.search.<indexName>.sharding_strategy my.shardingstrategy.Implementation

Using a custom IndexShardingStrategy

implementation, it's possible to define what shard a given entity is

indexed to.

It also allows for optimizing searches by selecting which shard to

run the query onto. By activating a filter (see Section 5.3.1, “Using filters in a sharded environment”), a sharding strategy can select a subset

of the shards used to answer a query

(IndexShardingStrategy.getDirectoryProvidersForQuery)

and thus speed up the query execution.

Each shard has an independent directory provider configuration as

described in Section 3.1, “Directory configuration”. The

DirectoryProvider default name for the previous

example are <indexName>.0 to

<indexName>.4. In other words, each shard has the

name of it's owning index followed by . (dot) and its

index number.

Example 3.5. Configuring the sharding configuration for an example entity

Animal

hibernate.search.default.indexBase /usr/lucene/indexes hibernate.search.Animal.sharding_strategy.nbr_of_shards 5 hibernate.search.Animal.directory_provider org.hibernate.search.store.FSDirectoryProvider hibernate.search.Animal.0.indexName Animal00 hibernate.search.Animal.3.indexBase /usr/lucene/sharded hibernate.search.Animal.3.indexName Animal03

This configuration uses the default id string hashing strategy and

shards the Animal index into 5 subindexes. All subindexes are

FSDirectoryProvider instances and the directory

where each subindex is stored is as followed:

for subindex 0: /usr/lucene/indexes/Animal00 (shared indexBase but overridden indexName)

for subindex 1: /usr/lucene/indexes/Animal.1 (shared indexBase, default indexName)

for subindex 2: /usr/lucene/indexes/Animal.2 (shared indexBase, default indexName)

for subindex 3: /usr/lucene/shared/Animal03 (overridden indexBase, overridden indexName)

for subindex 4: /usr/lucene/indexes/Animal.4 (shared indexBase, default indexName)

Note

This is only presented here so that you know the option is available. There is really not much benefit in sharing indexes.

It is technically possible to store the information of more than one entity into a single Lucene index. There are two ways to accomplish this:

Configuring the underlying directory providers to point to the same physical index directory. In practice, you set the property

hibernate.search.[fully qualified entity name].indexNameto the same value. As an example let’s use the same index (directory) for theFurnitureandAnimalentity. We just setindexNamefor both entities to for example “Animal”. Both entities will then be stored in the Animal directoryhibernate.search.org.hibernate.search.test.shards.Furniture.indexName = Animal hibernate.search.org.hibernate.search.test.shards.Animal.indexName = AnimalSetting the

@Indexedannotation’sindexattribute of the entities you want to merge to the same value. If we again wanted allFurnitureinstances to be indexed in theAnimalindex along with all instances ofAnimalwe would specify@Indexed(index=”Animal”)on bothAnimalandFurnitureclasses.

It is possible to refine how Hibernate Search interacts with Lucene through the worker configuration. The work can be executed to the Lucene directory or sent to a JMS queue for later processing. When processed to the Lucene directory, the work can be processed synchronously or asynchronously to the transaction commit.

You can define the worker configuration using the following properties

Table 3.2. worker configuration

| Property | Description |

hibernate.search.worker.backend | Out of the box support for the Apache Lucene back end and

the JMS back end. Default to lucene. Supports

also jms, blackhole,

jgroupsMaster and jgroupsSlave. |

hibernate.search.worker.execution | Supports synchronous and asynchronous execution. Default to

async. |

hibernate.search.worker.thread_pool.size | Defines the number of threads in the pool. useful only for asynchronous execution. Default to 1. |

hibernate.search.worker.buffer_queue.max | Defines the maximal number of work queue if the thread poll is starved. Useful only for asynchronous execution. Default to infinite. If the limit is reached, the work is done by the main thread. |

hibernate.search.worker.jndi.* | Defines the JNDI properties to initiate the InitialContext (if needed). JNDI is only used by the JMS back end. |

hibernate.search.worker.jms.connection_factory | Mandatory for the JMS back end. Defines the JNDI name to

lookup the JMS connection factory from

(/ConnectionFactory by default in JBoss

AS) |

hibernate.search.worker.jms.queue | Mandatory for the JMS back end. Defines the JNDI name to lookup the JMS queue from. The queue will be used to post work messages. |

hibernate.search.worker.jgroups.clusterName | Optional for JGroups back end. Defines the name of JGroups channel. |

hibernate.search.worker.jgroups.configurationFile | Optional JGroups network stack configuration. Defines the name of a JGroups configuration file, which must exist on classpath. |

hibernate.search.worker.jgroups.configurationXml | Optional JGroups network stack configuration. Defines a String representing JGroups configuration as XML. |

hibernate.search.worker.jgroups.configurationString | Optional JGroups network stack configuration. Provides JGroups configuration in plain text. |

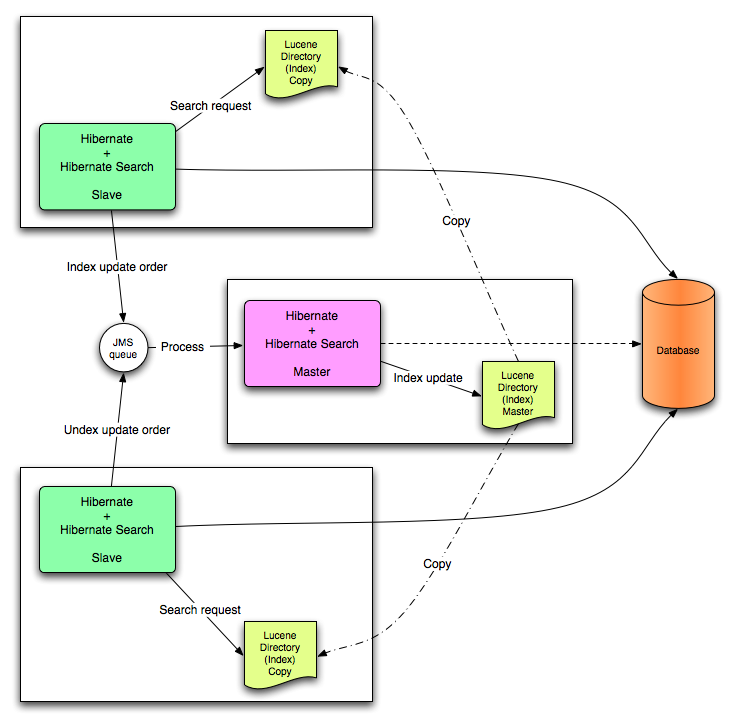

This section describes in greater detail how to configure the Master / Slaves Hibernate Search architecture.

JMS back end configuration.

Every index update operation is sent to a JMS queue. Index querying operations are executed on a local index copy.

Example 3.6. JMS Slave configuration

### slave configuration ## DirectoryProvider # (remote) master location hibernate.search.default.sourceBase = /mnt/mastervolume/lucenedirs/mastercopy # local copy location hibernate.search.default.indexBase = /Users/prod/lucenedirs # refresh every half hour hibernate.search.default.refresh = 1800 # appropriate directory provider hibernate.search.default.directory_provider = org.hibernate.search.store.FSSlaveDirectoryProvider ## Backend configuration hibernate.search.worker.backend = jms hibernate.search.worker.jms.connection_factory = /ConnectionFactory hibernate.search.worker.jms.queue = queue/hibernatesearch #optional jndi configuration (check your JMS provider for more information) ## Optional asynchronous execution strategy # hibernate.search.worker.execution = async # hibernate.search.worker.thread_pool.size = 2 # hibernate.search.worker.buffer_queue.max = 50

A file system local copy is recommended for faster search results.

The refresh period should be higher that the expected time copy.

Every index update operation is taken from a JMS queue and executed. The master index is copied on a regular basis.

Example 3.7. JMS Master configuration

### master configuration ## DirectoryProvider # (remote) master location where information is copied to hibernate.search.default.sourceBase = /mnt/mastervolume/lucenedirs/mastercopy # local master location hibernate.search.default.indexBase = /Users/prod/lucenedirs # refresh every half hour hibernate.search.default.refresh = 1800 # appropriate directory provider hibernate.search.default.directory_provider = org.hibernate.search.store.FSMasterDirectoryProvider ## Backend configuration #Backend is the default lucene one

The refresh period should be higher that the expected time copy.

In addition to the Hibernate Search framework configuration, a Message Driven Bean should be written and set up to process the index works queue through JMS.

Example 3.8. Message Driven Bean processing the indexing queue

@MessageDriven(activationConfig = {

@ActivationConfigProperty(propertyName="destinationType", propertyValue="javax.jms.Queue"),

@ActivationConfigProperty(propertyName="destination", propertyValue="queue/hibernatesearch"),

@ActivationConfigProperty(propertyName="DLQMaxResent", propertyValue="1")

} )

public class MDBSearchController extends AbstractJMSHibernateSearchController implements MessageListener {

@PersistenceContext EntityManager em;

//method retrieving the appropriate session

protected Session getSession() {

return (Session) em.getDelegate();

}

//potentially close the session opened in #getSession(), not needed here

protected void cleanSessionIfNeeded(Session session)

}

}This example inherits from the abstract JMS controller class

available in the Hibernate Search source code and implements a JavaEE 5

MDB. This implementation is given as an example and, while most likely

be more complex, can be adjusted to make use of non Java EE Message

Driven Beans. For more information about the

getSession() and

cleanSessionIfNeeded(), please check

AbstractJMSHibernateSearchController's

javadoc.

Describes how to configure JGroups Master/Slave back end. Configuration examples illustrated in JMS Master/Slave configuration section (Section 3.5, “JMS Master/Slave configuration”) also apply here, only a different backend needs to be set.

Every index update operation is sent through a JGroups channel to the master node. Index querying operations are executed on a local index copy.

Example 3.9. JGroups Slave configuration

### slave configuration

## Backend configuration

hibernate.search.worker.backend = jgroupsSlave

Every index update operation is taken from a JGroups channel and executed. The master index is copied on a regular basis.

Example 3.10. JGroups Master configuration

### master configuration

## Backend configuration

hibernate.search.worker.backend = jgroupsMaster

Optionally configuration for JGroups transport protocols

(UDP, TCP) and channel name can be defined. It can be applied to both master and slave nodes.

There are several ways to configure JGroups transport details.

If it is not defined explicity, configuration found in the

flush-udp.xml file is used.

Example 3.11. JGroups transport protocols configuration

## configuration

#udp.xml file needs to be located in the classpath

hibernate.search.worker.backend.jgroups.configurationFile = udp.xml

#protocol stack configuration provided in XML format

hibernate.search.worker.backend.jgroups.configurationXml =

<config xmlns="urn:org:jgroups"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="urn:org:jgroups file:schema/JGroups-2.8.xsd">

<UDP

mcast_addr="${jgroups.udp.mcast_addr:228.10.10.10}"

mcast_port="${jgroups.udp.mcast_port:45588}"

tos="8"

thread_naming_pattern="pl"

thread_pool.enabled="true"

thread_pool.min_threads="2"

thread_pool.max_threads="8"

thread_pool.keep_alive_time="5000"

thread_pool.queue_enabled="false"

thread_pool.queue_max_size="100"

thread_pool.rejection_policy="Run"/>

<PING timeout="1000" num_initial_members="3"/>

<MERGE2 max_interval="30000" min_interval="10000"/>

<FD_SOCK/>

<FD timeout="3000" max_tries="3"/>

<VERIFY_SUSPECT timeout="1500"/>

<pbcast.STREAMING_STATE_TRANSFER/>

<pbcast.FLUSH timeout="0"/>

</config>

#protocol stack configuration provided in "old style" jgroups format

hibernate.search.worker.backend.jgroups.configurationString =

UDP(mcast_addr=228.1.2.3;mcast_port=45566;ip_ttl=32):PING(timeout=3000;

num_initial_members=6):FD(timeout=5000):VERIFY_SUSPECT(timeout=1500):

pbcast.NAKACK(gc_lag=10;retransmit_timeout=3000):UNICAST(timeout=5000):

FRAG:pbcast.GMS(join_timeout=3000;shun=false;print_local_addr=true)

Master and slave nodes communicate over JGroups channel

that is identified by this same name. Name of the channel can be defined

explicity, if not default HSearchCluster is used.

Example 3.12. JGroups channel name configuration

## Backend configuration

hibernate.search.worker.backend.jgroups.clusterName = Hibernate-Search-Cluster

The different reader strategies are described in Reader strategy. Out of the box strategies are:

shared: share index readers across several queries. This strategy is the most efficient.not-shared: create an index reader for each individual query

The default reader strategy is shared. This can

be adjusted:

hibernate.search.reader.strategy = not-shared

Adding this property switches to the not-shared

strategy.

Or if you have a custom reader strategy:

hibernate.search.reader.strategy = my.corp.myapp.CustomReaderProvider

where my.corp.myapp.CustomReaderProvider is

the custom strategy implementation.

Hibernate Search is enabled out of the box when using Hibernate

Annotations or Hibernate EntityManager. If, for some reason you need to

disable it, set

hibernate.search.autoregister_listeners to false.

Note that there is no performance penalty when the listeners are enabled

but no entities are annotated as indexed.

To enable Hibernate Search in Hibernate Core (ie. if you don't use

Hibernate Annotations), add the

FullTextIndexEventListener for the following six

Hibernate events and also add it after the default

DefaultFlushEventListener, as in the following

example.

Example 3.13. Explicitly enabling Hibernate Search by configuring the

FullTextIndexEventListener

<hibernate-configuration>

<session-factory>

...

<event type="post-update">

<listener class="org.hibernate.search.event.FullTextIndexEventListener"/>

</event>

<event type="post-insert">

<listener class="org.hibernate.search.event.FullTextIndexEventListener"/>

</event>

<event type="post-delete">

<listener class="org.hibernate.search.event.FullTextIndexEventListener"/>

</event>

<event type="post-collection-recreate">

<listener class="org.hibernate.search.event.FullTextIndexEventListener"/>

</event>

<event type="post-collection-remove">

<listener class="org.hibernate.search.event.FullTextIndexEventListener"/>

</event>

<event type="post-collection-update">

<listener class="org.hibernate.search.event.FullTextIndexEventListener"/>

</event>

<event type="flush">

<listener class="org.hibernate.event.def.DefaultFlushEventListener"/>

<listener class="org.hibernate.search.event.FullTextIndexEventListener"/>

</event>

</session-factory>

</hibernate-configuration>By default, every time an object is inserted, updated or deleted through Hibernate, Hibernate Search updates the according Lucene index. It is sometimes desirable to disable that features if either your index is read-only or if index updates are done in a batch way (see Section 6.3, “Rebuilding the whole Index”).

To disable event based indexing, set

hibernate.search.indexing_strategy manual

Note

In most case, the JMS backend provides the best of both world, a lightweight event based system keeps track of all changes in the system, and the heavyweight indexing process is done by a separate process or machine.

Hibernate Search allows you to tune the Lucene indexing performance

by specifying a set of parameters which are passed through to underlying

Lucene IndexWriter such as

mergeFactor, maxMergeDocs and

maxBufferedDocs. You can specify these parameters

either as default values applying for all indexes, on a per index basis,

or even per shard.

There are two sets of parameters allowing for different performance

settings depending on the use case. During indexing operations triggered

by database modifications, the parameters are grouped by the

transaction keyword:

hibernate.search.[default|<indexname>].indexwriter.transaction.<parameter_name>

When indexing occurs via FullTextSession.index() or

via a MassIndexer (see

Section 6.3, “Rebuilding the whole Index”), the used properties are those

grouped under the batch keyword:

hibernate.search.[default|<indexname>].indexwriter.batch.<parameter_name>

If no value is set for a

.batch value in a specific shard configuration,

Hibernate Search will look at the index section, then at the default

section:

hibernate.search.Animals.2.indexwriter.transaction.max_merge_docs 10 hibernate.search.Animals.2.indexwriter.transaction.merge_factor 20 hibernate.search.default.indexwriter.batch.max_merge_docs 100

This configuration will result in these settings applied to the second shard of Animals index:

transaction.max_merge_docs= 10batch.max_merge_docs= 100transaction.merge_factor= 20batch.merge_factor= Lucene default

All other values will use the defaults defined in Lucene.

The default for all values is to leave them at Lucene's own default,

so the listed values in the following table actually depend on the version

of Lucene you are using; values shown are relative to version

2.4. For more information about Lucene indexing

performances, please refer to the Lucene documentation.

Warning

Previous versions had the batch

parameters inherit from transaction properties.

This needs now to be explicitly set.

Table 3.3. List of indexing performance and behavior properties

| Property | Description | Default Value |

|---|---|---|

hibernate.search.[default|<indexname>].exclusive_index_use | Set to | false (releases locks as soon as possible) |

hibernate.search.[default|<indexname>].indexwriter.[transaction|batch].max_buffered_delete_terms | Determines the minimal number of delete terms required before the buffered in-memory delete terms are applied and flushed. If there are documents buffered in memory at the time, they are merged and a new segment is created. | Disabled (flushes by RAM usage) |

hibernate.search.[default|<indexname>].indexwriter.[transaction|batch].max_buffered_docs | Controls the amount of documents buffered in memory during indexing. The bigger the more RAM is consumed. | Disabled (flushes by RAM usage) |

hibernate.search.[default|<indexname>].indexwriter.[transaction|batch].max_field_length | The maximum number of terms that will be indexed for a single field. This limits the amount of memory required for indexing so that very large data will not crash the indexing process by running out of memory. This setting refers to the number of running terms, not to the number of different terms. This silently truncates large documents, excluding from the index all terms that occur further in the document. If you know your source documents are large, be sure to set this value high enough to accommodate the expected size. If you set it to Integer.MAX_VALUE, then the only limit is your memory, but you should anticipate an OutOfMemoryError. If setting this value in | 10000 |

hibernate.search.[default|<indexname>].indexwriter.[transaction|batch].max_merge_docs | Defines the largest number of documents allowed in a segment. Larger values are best for batched indexing and speedier searches. Small values are best for transaction indexing. | Unlimited (Integer.MAX_VALUE) |

hibernate.search.[default|<indexname>].indexwriter.[transaction|batch].merge_factor | Controls segment merge frequency and size. Determines how often segment indexes are merged when insertion occurs. With smaller values, less RAM is used while indexing, and searches on unoptimized indexes are faster, but indexing speed is slower. With larger values, more RAM is used during indexing, and while searches on unoptimized indexes are slower, indexing is faster. Thus larger values (> 10) are best for batch index creation, and smaller values (< 10) for indexes that are interactively maintained. The value must no be lower than 2. | 10 |

hibernate.search.[default|<indexname>].indexwriter.[transaction|batch].ram_buffer_size | Controls the amount of RAM in MB dedicated to document buffers. When used together max_buffered_docs a flush occurs for whichever event happens first. Generally for faster indexing performance it's best to flush by RAM usage instead of document count and use as large a RAM buffer as you can. | 16 MB |

hibernate.search.[default|<indexname>].indexwriter.[transaction|batch].term_index_interval | Expert: Set the interval between indexed terms. Large values cause less memory to be used by IndexReader, but slow random-access to terms. Small values cause more memory to be used by an IndexReader, and speed random-access to terms. See Lucene documentation for more details. | 128 |

hibernate.search.[default|<indexname>].indexwriter.[transaction|batch].use_compound_file | The advantage of using the compound file format is that

less file descriptors are used. The disadvantage is that indexing

takes more time and temporary disk space. You can set this

parameter to false in an attempt to improve the

indexing time, but you could run out of file descriptors if

mergeFactor is also

large.Boolean parameter, use

" | true |

Tip

When your architecture permits it, always set

hibernate.search.default.exclusive_index_use=true

as it greatly improves efficiency in index writing.

To tune the indexing speed it might be useful to time the object

loading from database in isolation from the writes to the index. To

achieve this set the blackhole as worker backend and

start you indexing routines. This backend does not disable Hibernate

Search: it will still generate the needed changesets to the index, but

will discard them instead of flushing them to the index. As opposite to

setting the hibernate.search.indexing_strategy to

manual when using blackhole it will

possibly load more data to rebuild the index from associated

entities.

hibernate.search.worker.backend blackhole

The recommended approach is to focus first on optimizing the object loading, and then use the timings you achieve as a baseline to tune the indexing process.

The blackhole backend is not meant to be used in

production, only as a tool to identify indexing bottlenecks.

Lucene Directories have default locking strategies which work well for most cases, but it's possible to specify for each index managed by Hibernate Search which LockingFactory you want to use.

Some of these locking strategies require a filesystem level lock and may be used even on RAM based indexes, but this is not recommended and of no practical use.

To select a locking factory, set the

hibernate.search.<index>.locking_strategy option

to one of simple, native,

single or none, or set it to the

fully qualified name of an implementation of

org.hibernate.search.store.LockFactoryFactory;

Implementing this interface you can provide a custom

org.apache.lucene.store.LockFactory.

Table 3.4. List of available LockFactory implementations

| name | Class | Description |

|---|---|---|

| simple | org.apache.lucene.store.SimpleFSLockFactory |

Safe implementation based on Java's File API, it marks the usage of the index by creating a marker file. If for some reason you had to kill your application, you will need to remove this file before restarting it. This is the default implementation for

|

| native | org.apache.lucene.store.NativeFSLockFactory |

As does This implementation has known problems on NFS. |

| single | org.apache.lucene.store.SingleInstanceLockFactory |

This LockFactory doesn't use a file marker but is a Java object lock held in memory; therefore it's possible to use it only when you are sure the index is not going to be shared by any other process. This is the default implementation for

|

| none | org.apache.lucene.store.NoLockFactory |

All changes to this index are not coordinated by any lock; test your application carefully and make sure you know what it means. |

hibernate.search.default.locking_strategy simple hibernate.search.Animals.locking_strategy native hibernate.search.Books.locking_strategy org.custom.components.MyLockingFactory

Hibernate Search allows you to configure how exceptions are handled during the indexing process. If no configuration is provided then exceptions are logged to the log output by default. It is possible to explicitly declare the exception logging mechanism as seen below:

hibernate.search.error_handler log

The default exception handling occurs for both synchronous and asynchronous indexing. Hibernate Search provides an easy mechanism to override the default error handling implementation.

In order to provide your own implementation you must implement the

ErrorHandler interface, which provides handle (

ErrorContext context ) method. The ErrorContext

provides a reference to the primary LuceneWork that failed, the

underlying exception and any subsequent LuceneWork that could

not be processed due to the primary exception.

public interface ErrorContext {

List<LuceneWork> getFailingOperations();

LuceneWork getOperationAtFault();

Throwable getThrowable();

boolean hasErrors();

}The following provides an example implementation of

ErrorHandler:

public class CustomErrorHandler implements ErrorHandler {

public void handle ( ErrorContext context ) {

...

//publish error context to some internal error handling system

...

}

}To register this error handler with Hibernate Search you

must declare the CustomErrorHandler fully qualified classname

in the configuration properties:

hibernate.search.error_handler CustomerErrorHandler