We define high availability as the ability for the system to continue functioning after failure of one or more of the servers.

A part of high availability is failover which we define as the ability for client connections to migrate from one server to another in event of server failure so client applications can continue to operate.

HornetQ allows servers to be linked together as live - backup groups where each live server can have 1 or more backup servers. A backup server is owned by only one live server. Backup servers are not operational until failover occurs, however 1 chosen backup, which will be in passive mode, announces its status and waits to take over the live servers work

Before failover, only the live server is serving the HornetQ clients while the backup servers remain passive or awaiting to become a backup server. When a live server crashes or is brought down in the correct mode, the backup server currently in passive mode will become live and another backup server will become passive. If a live server restarts after a failover then it will have priority and be the next server to become live when the current live server goes down, if the current live server is configured to allow automatic failback then it will detect the live server coming back up and automatically stop.

HornetQ provides only shared store in this release. Replication will be available in the next release.

Note

Only persistent message data will survive failover. Any non persistent message data will not be available after failover.

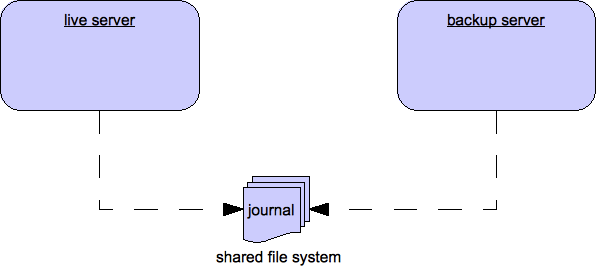

When using a shared store, both live and backup servers share the same entire data directory using a shared file system. This means the paging directory, journal directory, large messages and binding journal.

When failover occurs and a backup server takes over, it will load the persistent storage from the shared file system and clients can connect to it.

This style of high availability differs from data replication in that it requires a shared file system which is accessible by both the live and backup nodes. Typically this will be some kind of high performance Storage Area Network (SAN). We do not recommend you use Network Attached Storage (NAS), e.g. NFS mounts to store any shared journal (NFS is slow).

The advantage of shared-store high availability is that no replication occurs between the live and backup nodes, this means it does not suffer any performance penalties due to the overhead of replication during normal operation.

The disadvantage of shared store replication is that it requires a shared file system, and when the backup server activates it needs to load the journal from the shared store which can take some time depending on the amount of data in the store.

If you require the highest performance during normal operation, have access to a fast SAN, and can live with a slightly slower failover (depending on amount of data), we recommend shared store high availability

To configure the live and backup servers to share their store, configure

all hornetq-configuration.xml:

<shared-store>true<shared-store>

Additionally, each backup server must be flagged explicitly as a backup:

<backup>true</backup>

In order for live - backup groups to operate properly with a shared store, both servers must have configured the location of journal directory to point to the same shared location (as explained in Section 15.3, “Configuring the message journal”)

Note

todo write something about GFS

Also each node, live and backups, will need to have a cluster connection defined even if not part of a cluster. The Cluster Connection info defines how backup servers announce there presence to it's live server or any other nodes in the cluster. refer to Chapter 38, HornetQ and Application Server Cluster Configuration for details on how this is done.

After a live server has failed and a backup taken has taken over its duties, you may want to restart the live server and have clients fail back. To do this simply restart the original live server and kill the new live server. You can do this by killing the process itself or just waiting for the server to crash naturally

It is also possible to cause failover to occur on normal server shutdown, to enable

this set the following property to true in the hornetq-configuration.xml

configuration file like so:

<failover-on-shutdown>true</failover-on-shutdown>

By default this is set to false, if by some chance you have set this to false but still want to stop the server normally and cause failover then you can do this by using the management API as explained at Section 30.1.1.1, “Core Server Management”

You can also force the new live server to shutdown when the old live server comes back up allowing

the original live server to take over automatically by setting the following property in the

hornetq-configuration.xml configuration file as follows:

<allow-failback>true</allow-failback>

HornetQ defines two types of client failover:

Automatic client failover

Application-level client failover

HornetQ also provides 100% transparent automatic reattachment of connections to the same server (e.g. in case of transient network problems). This is similar to failover, except it's reconnecting to the same server and is discussed in Chapter 34, Client Reconnection and Session Reattachment

During failover, if the client has consumers on any non persistent or temporary queues, those queues will be automatically recreated during failover on the backup node, since the backup node will not have any knowledge of non persistent queues.

HornetQ clients can be configured to receive knowledge of all live and backup servers, so that in event of connection failure at the client - live server connection, the client will detect this and reconnect to the backup server. The backup server will then automatically recreate any sessions and consumers that existed on each connection before failover, thus saving the user from having to hand-code manual reconnection logic.

HornetQ clients detect connection failure when it has not received packets from

the server within the time given by client-failure-check-period

as explained in section Chapter 17, Detecting Dead Connections. If the client does not

receive data in good time, it will assume the connection has failed and attempt

failover. Also if the socket is closed by the OS, usually if the server process is

killed rather than the machine itself crashing, then the client will failover straight away.

HornetQ clients can be configured to discover the list of live-backup server groups in a number of different ways. They can be configured explicitly or probably the most common way of doing this is to use server discovery for the client to automatically discover the list. For full details on how to configure server discovery, please see Chapter 38, HornetQ and Application Server Cluster Configuration. Alternatively, the clients can explicitly connect to a specific server and download the current servers and backups see Chapter 38, HornetQ and Application Server Cluster Configuration.

To enable automatic client failover, the client must be configured to allow non-zero reconnection attempts (as explained in Chapter 34, Client Reconnection and Session Reattachment).

By default failover will only occur after at least one connection has been made to the live server. In other words, by default, failover will not occur if the client fails to make an initial connection to the live server - in this case it will simply retry connecting to the live server according to the reconnect-attempts property and fail after this number of attempts.

Since the client doesn't learn about the full topology until after the first

connection is made there is a window where it doesn't know about the backup. If a failure happens at

this point the client can only try reconnecting to the original live server. To configure

how many attempts the client will make you can set the property initialConnectAttempts

on the ClientSessionFactoryImpl or HornetQConnectionFactory or

initial-connect-attempts in xml. The default for this is 0, that

is try only once. Once the number of attempts has been made an exception will be thrown.

For examples of automatic failover with transacted and non-transacted JMS sessions, please see Section 11.1.65, “Transaction Failover” and Section 11.1.40, “Non-Transaction Failover With Server Data Replication”.

HornetQ does not replicate full server state between live and backup servers. When the new session is automatically recreated on the backup it won't have any knowledge of messages already sent or acknowledged in that session. Any in-flight sends or acknowledgements at the time of failover might also be lost.

By replicating full server state, theoretically we could provide a 100% transparent seamless failover, which would avoid any lost messages or acknowledgements, however this comes at a great cost: replicating the full server state (including the queues, session, etc.). This would require replication of the entire server state machine; every operation on the live server would have to replicated on the replica server(s) in the exact same global order to ensure a consistent replica state. This is extremely hard to do in a performant and scalable way, especially when one considers that multiple threads are changing the live server state concurrently.

It is possible to provide full state machine replication using techniques such as virtual synchrony, but this does not scale well and effectively serializes all operations to a single thread, dramatically reducing concurrency.

Other techniques for multi-threaded active replication exist such as replicating lock states or replicating thread scheduling but this is very hard to achieve at a Java level.

Consequently it has decided it was not worth massively reducing performance and concurrency for the sake of 100% transparent failover. Even without 100% transparent failover, it is simple to guarantee once and only once delivery, even in the case of failure, by using a combination of duplicate detection and retrying of transactions. However this is not 100% transparent to the client code.

If the client code is in a blocking call to the server, waiting for a response to continue its execution, when failover occurs, the new session will not have any knowledge of the call that was in progress. This call might otherwise hang for ever, waiting for a response that will never come.

To prevent this, HornetQ will unblock any blocking calls that were in progress

at the time of failover by making them throw a javax.jms.JMSException (if using JMS), or a HornetQException with error code HornetQException.UNBLOCKED. It is up to the client code to catch

this exception and retry any operations if desired.

If the method being unblocked is a call to commit(), or prepare(), then the

transaction will be automatically rolled back and HornetQ will throw a javax.jms.TransactionRolledBackException (if using JMS), or a

HornetQException with error code HornetQException.TRANSACTION_ROLLED_BACK if using the core

API.

If the session is transactional and messages have already been sent or acknowledged in the current transaction, then the server cannot be sure that messages sent or acknowledgements have not been lost during the failover.

Consequently the transaction will be marked as rollback-only, and any

subsequent attempt to commit it will throw a javax.jms.TransactionRolledBackException (if using JMS), or a

HornetQException with error code HornetQException.TRANSACTION_ROLLED_BACK if using the core

API.

2 phase commit

The caveat to this rule is when XA is used either via JMS or through the core API.

If 2 phase commit is used and prepare has already ben called then rolling back could

cause a HeuristicMixedException. Because of this the commit will throw

a XAException.XA_RETRY exception. This informs the Transaction Manager

that it should retry the commit at some later point in time, a side effect of this is

that any non persistent messages will be lost. To avoid this use persistent

messages when using XA. With acknowledgements this is not an issue since they are

flushed to the server before prepare gets called.

It is up to the user to catch the exception, and perform any client side local rollback code as necessary. There is no need to manually rollback the session - it is already rolled back. The user can then just retry the transactional operations again on the same session.

HornetQ ships with a fully functioning example demonstrating how to do this, please see Section 11.1.65, “Transaction Failover”

If failover occurs when a commit call is being executed, the server, as previously described, will unblock the call to prevent a hang, since no response will come back. In this case it is not easy for the client to determine whether the transaction commit was actually processed on the live server before failure occurred.

Note

If XA is being used either via JMS or through the core API then an XAException.XA_RETRY

is thrown. This is to inform Transaction Managers that a retry should occur at some point. At

some later point in time the Transaction Manager will retry the commit. If the original

commit hadn't occurred then it will still exist and be committed, if it doesn't exist

then it is assumed to have been committed although the transaction manager may log a warning.

To remedy this, the client can simply enable duplicate detection (Chapter 37, Duplicate Message Detection) in the transaction, and retry the transaction operations again after the call is unblocked. If the transaction had indeed been committed on the live server successfully before failover, then when the transaction is retried, duplicate detection will ensure that any durable messages resent in the transaction will be ignored on the server to prevent them getting sent more than once.

Note

By catching the rollback exceptions and retrying, catching unblocked calls and enabling duplicate detection, once and only once delivery guarantees for messages can be provided in the case of failure, guaranteeing 100% no loss or duplication of messages.

If the session is non transactional, messages or acknowledgements can be lost in the event of failover.

If you wish to provide once and only once delivery guarantees for non transacted sessions too, enabled duplicate detection, and catch unblock exceptions as described in Section 39.2.1.3, “Handling Blocking Calls During Failover”

JMS provides a standard mechanism for getting notified asynchronously of

connection failure: java.jms.ExceptionListener. Please consult

the JMS javadoc or any good JMS tutorial for more information on how to use

this.

The HornetQ core API also provides a similar feature in the form of the class

org.hornet.core.client.SessionFailureListener

Any ExceptionListener or SessionFailureListener instance will always be called by

HornetQ on event of connection failure, irrespective of whether the connection was successfully failed over,

reconnected or reattached, however you can find out if reconnect or reattach has happened

by either the failedOver flag passed in on the connectionFailed

on SessionfailureListener or by inspecting the error code on the

javax.jms.JMSException which will be one of the following:

Table 39.1. JMSException error codes

| error code | Description |

|---|---|

| FAILOVER | Failover has occurred and we have successfully reattached or reconnected. |

| DISCONNECT | No failover has occurred and we are disconnected. |

In some cases you may not want automatic client failover, and prefer to handle any connection failure yourself, and code your own manually reconnection logic in your own failure handler. We define this as application-level failover, since the failover is handled at the user application level.

To implement application-level failover, if you're using JMS then you need to set

an ExceptionListener class on the JMS connection. The ExceptionListener will be called by HornetQ in the event that

connection failure is detected. In your ExceptionListener, you

would close your old JMS connections, potentially look up new connection factory

instances from JNDI and creating new connections. In this case you may well be using

HA-JNDI

to ensure that the new connection factory is looked up from a different

server.

For a working example of application-level failover, please see Section 11.1.2, “Application-Layer Failover”.

If you are using the core API, then the procedure is very similar: you would set a

FailureListener on the core ClientSession

instances.